Ship Reliable Agents with Agentic Evaluations

Galileo empowers developers to optimize every step of multi-span AI agents with end-to-end evaluation and observability.

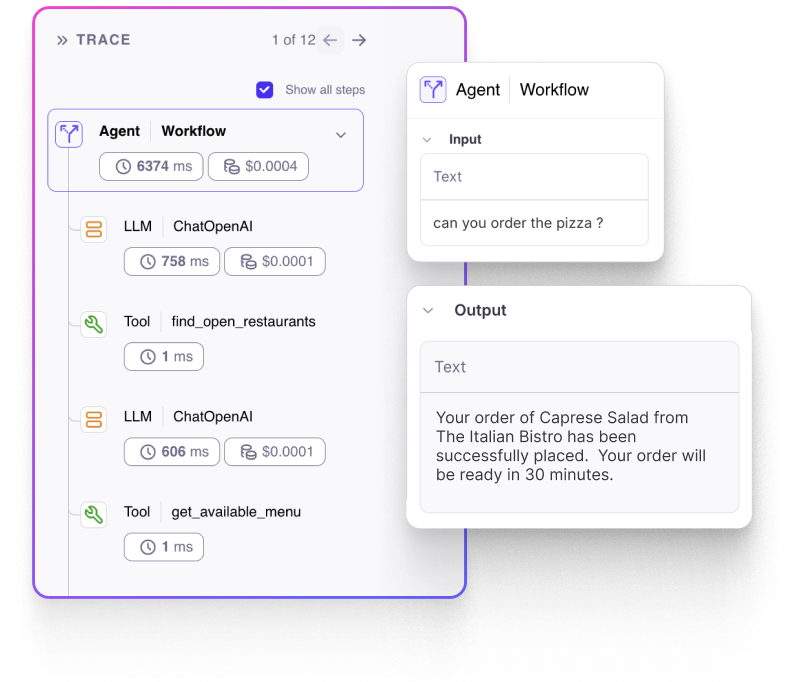

End-to-end observability

Catch every step under the hood, from LLM plan generation to tool calling and final actions.

Learn More →

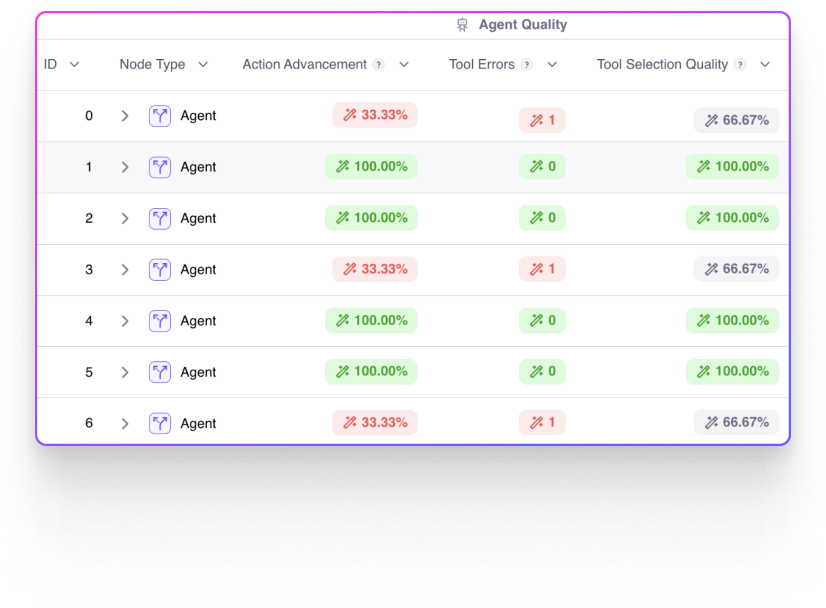

Metrics built for a world of agents

Measure and debug everything from tool selection and instruction, individual tool errors, and overall session success.

Learn More →

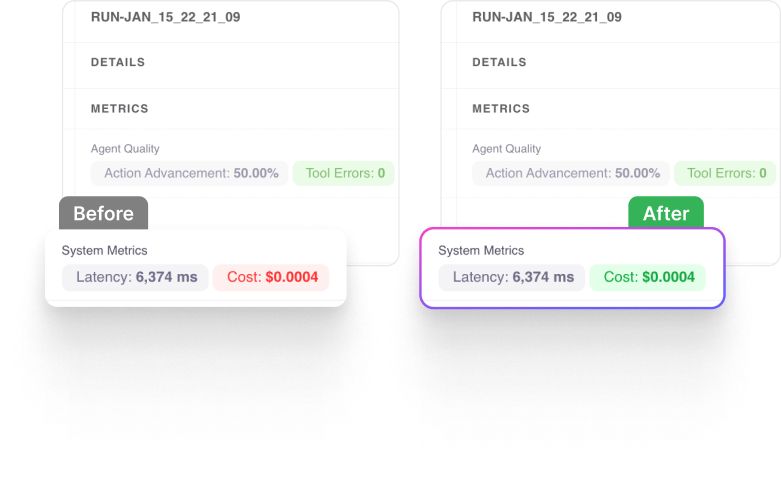

Granular cost and latency tracking

Build cost-effective agentic apps by optimizing for cost and latency at every step with side-by-side run comparisons and granular insights.

Learn More →