Posts tagged AI Evaluation

Best LLMs for RAG: Top Open And Closed Source Models

Top Open And Closed Source LLMs For Short, Medium and Long Context RAG

Survey of Hallucinations in Multimodal Models

An exploration of type of hallucinations in multimodal models and ways to mitigate them.

Mastering RAG: 4 Metrics to Improve Performance

Unlock the potential of RAG analysis with 4 essential metrics to enhance performance and decision-making. Learn how to master RAG methodology for greater effectiveness in project management and strategic planning.

Addressing GenAI Evaluation Challenges: Cost & Accuracy

Learn to do robust evaluation and beat the current SoTA approaches

Galileo Luna: Advancing LLM Evaluation Beyond GPT-3.5

Research backed evaluation foundation models for enterprise scale

A Metrics-First Approach to LLM Evaluation

Learn about different types of LLM evaluation metrics needed for generative applications

Mastering Agents: Build And Evaluate A Deep Research Agent with o3 and 4o

A step-by-step guide for evaluating smart agents

Meet Galileo Luna: Evaluation Foundation Models

Low latency, low cost, high accuracy GenAI evaluation is finally here. No more ask-GPT and painstaking vibe checks.

Announcing LLM Studio: A Smarter Way to Build LLM Applications

LLM Studio helps you develop and evaluate LLM apps in hours instead of days.

Webinar: Announcing Galileo LLM Studio

Webinar - Announcing Galileo LLM Studio: A Smarter Way to Build LLM Applications

Webinar – Evaluation Agents: Exploring the Next Frontier of GenAI Evals

Learn the next evolution of automated AI evaluations – Evaluation Agents.

Introducing Our Agent Leaderboard on Hugging Face

We built this leaderboard to answer one simple question: "How do AI agents perform in real-world agentic scenarios?"

Mastering Agents: Metrics for Evaluating AI Agents

Identify issues quickly and improve agent performance with powerful metrics

Metrics for Evaluating LLM Chatbot Agents - Part 2

A comprehensive guide to metrics for GenAI chatbot agents

Metrics for Evaluating LLM Chatbot Agents - Part 1

A comprehensive guide to metrics for GenAI chatbot agents

Mastering Agents: Evaluating AI Agents

Top research benchmarks for evaluating agent performance for planning, tool calling and persuasion.

Tricks to Improve LLM-as-a-Judge

Unlock the potential of LLM Judges with fundamental techniques

Measuring What Matters: A CTO’s Guide to LLM Chatbot Performance

Learn to bridge the gap between AI capabilities and business outcomes

Best Practices For Creating Your LLM-as-a-Judge

Master the art of building your AI evaluators using LLMs

Introducing ChainPoll: Enhancing LLM Evaluation

ChainPoll: A High Efficacy Method for LLM Hallucination Detection. ChainPoll leverages Chaining and Polling or Ensembling to help teams better detect LLM hallucinations. Read more at rungalileo.io/blog/chainpoll.

Mastering RAG: How To Observe Your RAG Post-Deployment

Learn to setup a robust observability solution for RAG in production

Webinar - The Future of Enterprise GenAI Evaluations

Evaluations are critical for enterprise GenAI development and deployment. Despite this, many teams still rely on 'vibe checks' and manual human evaluation. To productionize trustworthy AI, teams need to rethink how they evaluate their solutions.

LLM Hallucination Index: RAG Special

The LLM Hallucination Index ranks 22 of the leading models based on their performance in real-world scenarios. We hope this index helps AI builders make informed decisions about which LLM is best suited for their particular use case and need.

Mastering Data: Generate Synthetic Data for RAG in Just $10

Learn to create and filter synthetic data with ChainPoll for building evaluation and training dataset

Is Llama 3 better than GPT4?

Llama 3 insights from the leaderboards and experts

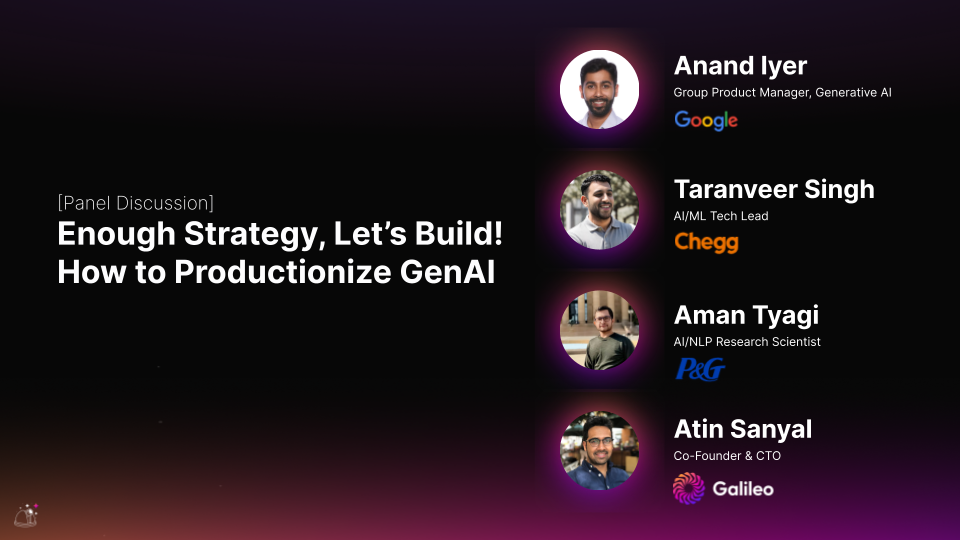

Enough Strategy, Let's Build: How to Productionize GenAI

At GenAI Productionize 2024, expert practitioners shared their own experiences and mistakes to offer tools and techniques for deploying GenAI at enterprise scale. Read key takeaways from the session on how to productionize generative AI.

Mastering RAG: How to Select an Embedding Model

Unsure of which embedding model to choose for your Retrieval-Augmented Generation (RAG) system? This blog post dives into the various options available, helping you select the best fit for your specific needs and maximize RAG performance.

Practical Tips for GenAI System Evaluation

It’s time to put the science back in data science! Craig Wiley, Sr Dir of AI at Databricks, joined us at GenAI Productionize 2024 to share practical tips and frameworks for evaluating and improving generative AI. Read key takeaways from his session.

LLM-as-a-Judge vs Human Evaluation

Understand the tradeoffs between LLMs and humans for generative AI evaluation

Mastering RAG: How To Evaluate LLMs For RAG

Learn the intricacies of evaluating LLMs for RAG - Datasets, Metrics & Benchmarks

Integrate IBM Watsonx with Galileo for LLM Evaluation

See how easy it is to leverage Galileo's platform alongside the IBM watsonx SDK to measure RAG performance, detect hallucinations, and quickly iterate through numerous prompts and LLMs.

Webinar – The Future of AI Agents: How Standards and Evaluation Drive Innovation

Join Galileo and Cisco to explore the infrastructure needed to build reliable, interoperable multi-agent systems, including an open, standardized framework for agent-to-agent collaboration.