Jul 18, 2025

How AI Model Profiling and Benchmarking Prevent Production Failures

The best AI agents are currently failing about 70 percent of the tasks assigned to them, as investments are expected to drop off a cliff. Production AI rarely fails for a single reason. Analysis reveals distinct failure modes—from inconsistent benchmarks to data drift—that can derail a model once it leaves the lab.

You're expected to watch more than a dozen KPIs covering accuracy, latency, cost, and safety, each capable of masking trouble if tracked in isolation.

This complexity reveals why traditional point-in-time testing can't keep pace with fast-moving data, evolving user behavior, or shifting regulatory demands. You need a disciplined approach that continuously profiles model behavior and benchmarks it against meaningful baselines, so issues surface before customers notice.

The playbook that follows gives you that discipline, weaving systematic evals, observability, and cost controls into one cohesive profiling and benchmarking workflow.

We recently explored this topic on our Chain of Thought podcast, where industry experts shared practical insights and real-world implementation strategies:

Understanding AI model profiling and benchmarking

Your production model works beautifully in testing, then crashes spectacularly when real users hit it. Sound familiar? You can't optimize what you don't measure, and you can't trust what you don't compare.

Model profiling reveals how your system actually behaves under load—where it burns through GPU cycles, when memory spikes occur, and why response times suddenly crater. Benchmarking tells you whether that behavior is competitive enough for production.

Together, they prevent the costly surprises that derail AI deployments.

What is AI model benchmarking?

AI model benchmarking is the process of systematically evaluating and comparing the performance of AI models using standardized datasets, tasks, and metrics. You might test your fraud detection system against a standard dataset, compare F1 scores to published baselines, and decide whether it meets your release criteria.

Yet this seemingly straightforward process hides serious pitfalls. Inconsistent datasets and scoring methods make fair comparisons nearly impossible. Many eval frameworks cover only narrow use cases, so your model can dominate leaderboards while failing catastrophically in production scenarios.

Modern enterprises need domain-specific eval frameworks that are refreshed regularly and reflect actual deployment conditions. Generic baselines might validate your research, but they won't predict whether your model survives contact with messy, real-world data.

What is AI model profiling?

AI model profiling is the comprehensive analysis of an AI model's behavior, resource usage, and performance characteristics across various conditions to identify performance bottlenecks, optimize efficiency, and understand system limitations.

Unlike benchmarking's "How do I compare?" question, profiling asks "Where does my system waste resources?"

Trace your inference paths and you'll uncover GPU bottlenecks, inefficient token generation, or memory spikes that hint at deeper architectural problems. Effective profiling feeds real-time dashboards that catch anomalies before customers experience them. Think of it as continuous health monitoring rather than periodic checkups.

Key dimensions of effective model performance

Model quality isn't one-dimensional. Your optimization efforts need a balanced scorecard that prevents tunnel vision on single metrics, especially when monitoring comprehensive KPIs for GenAI model health:

Performance consistency: Tracking accuracy, latency, and throughput variations across different data distributions, user loads, and operating conditions to identify potential failure modes before they impact production systems

Resource efficiency: Monitoring computational requirements, memory utilization, and infrastructure costs to optimize deployment strategies and ensure sustainable scaling as usage grows

Quality assurance: Evaluating output quality, reliability patterns, and edge case handling to maintain user trust and business value throughout the model lifecycle

Competitive positioning: Benchmarking against industry standards, alternative approaches, and evolving capabilities to inform strategic decisions about model architecture and optimization priorities

Operational resilience: Assessing system behavior under stress conditions, failure recovery capabilities, and degradation patterns to build robust production environments that maintain service quality during unexpected scenarios

Balancing these dimensions ensures your optimization translates into dependable, scalable systems that perform well and stay within budget constraints.

Best practices for comprehensive AI model benchmarking and profiling

Seven interlocking strategies let you move beyond one-off tests to a living system of measurement and improvement.

Together they form a closed loop: you start with a trustworthy dataset, compare against industry benchmarks to understand competitive positioning, instrument the model for continuous observation, inject human insight where automation stalls, and squeeze every last token, GPU cycle, and dollar from the pipeline.

Each tactic carries value on its own, but when combined, they create a foundation for confident scaling and optimization.

Build a gold-standard evals dataset

Your assessments are only as good as your data, and generic public datasets rarely mirror your domain's quirks. When distribution drifts even slightly, evaluation frameworks become mirages. Gold-standard datasets counter that risk because every sample is hand-picked, expertly labeled, and version-controlled.

Start by mapping the full spectrum of real traffic—common cases, edge cases, and outright anomalies—then sample to cover it all. Balance class frequencies so one label doesn't drown out the rest; skewed labels mask weaknesses and inflate headline scores.

Multiple annotators with clear rubrics drive consistency, while inter-rater metrics expose ambiguity you still need to fix.

However, prevent data leakage early: split train, dev, and test sets before any modeling, track provenance, and audit overlaps. Treat the dataset like code with version tags, changelogs, and immutable releases.

This guarantees that when you rerun an experiment next quarter, you're comparing apples to apples.

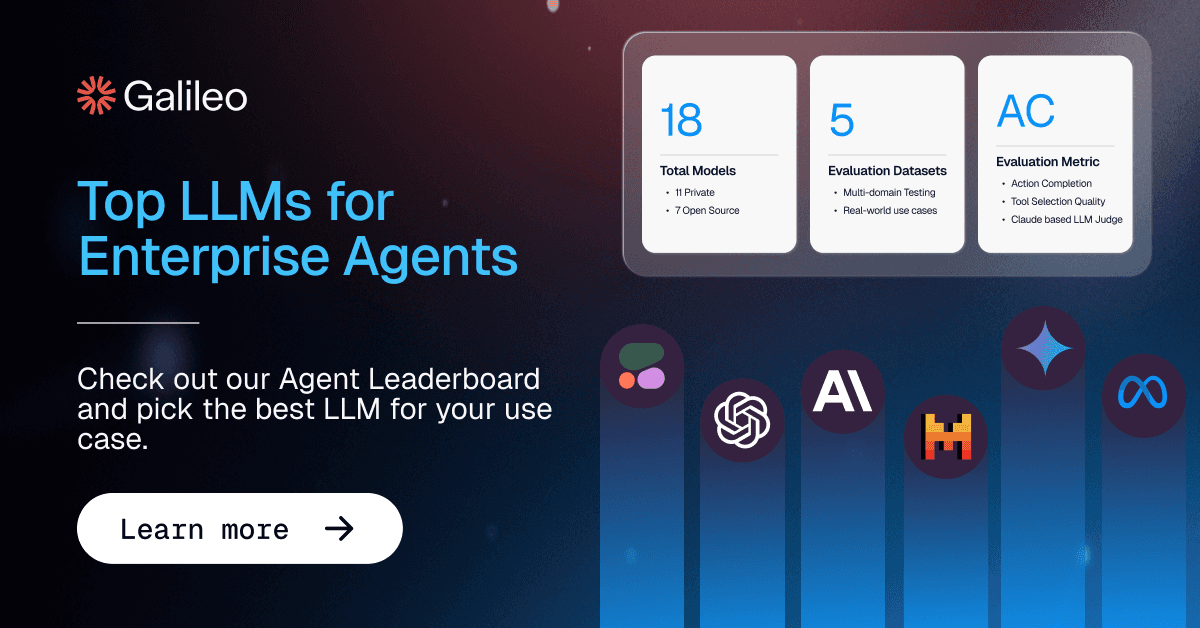

Compare against industry-standard agent leaderboards

Selecting the right AI model for your production workload remains one of the most consequential decisions teams make.

Academic benchmarks tell you how models perform on isolated tasks, but they rarely predict behavior when agents orchestrate complex workflows involving tool selection, multi-turn reasoning, and error recovery under production constraints.

This gap between test performance and real-world reliability creates expensive mistakes. Teams often discover after deployment that their chosen model excels at synthetic benchmarks yet struggles with the specific agent capabilities their application demands—tool coordination, or context retention across extended interactions.

Industry-standard agent leaderboards address this disconnect by evaluating models against multi-dimensional scenarios that mirror actual deployment conditions.

Galileo's Agent Leaderboard synthesizes eval datasets from Berkeley's Function Calling Leaderboard, τ-bench, xLAM, and ToolACE to create comprehensive assessments spanning 14 diverse benchmarks across academic, retail, airline, and enterprise API domains.

Rather than relying on single-score rankings, effective model selection requires examining performance across specific capability dimensions.

Automate performance monitoring and observability

Models seldom fail spectacularly; they erode quietly. Latency creeps, accuracy wobbles, a data pipe drops a column. Most teams discover these issues only after users complain or metrics crater. Automated observability spots those hairline cracks long before users tweet screenshots.

Real-time monitors cut incident resolution time because alerts trigger at the first metric blip. Instrument agent-level traces to capture full decision paths, then configure thresholds for 95th-percentile latency and critical accuracy KPIs.

Store raw traces for months so you can trace regressions back to the moment a schema changed.

More traces can mean more noise. Combat "trace overload" with aggregation layers that surface only statistically significant anomalies. Galileo's Graph Engine visualization clusters similar failure modes, while the Insights Engine auto-suppresses duplicate alerts so you focus on outliers, not dashboards.

Early drift detection here feeds directly into the next strategy—baseline management—closing the gap between observation and action.

Use continuous baseline management

Here's the trap most teams fall into: they confuse evaluation standards with performance baselines. External comparisons gauge you against the outside world; baselines compare you to yesterday's best.

A living CI/CD pipeline should re-evaluate the model whenever code or data shifts, block releases if scores slip, and update the baseline once new gains prove stable.

Teams that skip this rigor discover, too late, that once-stellar performance decayed months ago under new traffic patterns. Wire baseline tests into your deployment gates: on every pull request, kick off evals, calculate core KPIs, and fail the build if any fall below thresholds.

Schedule quarterly audits to ensure those thresholds still reflect market reality; otherwise, you end up guarding an outdated goalpost. Galileo ships with regression gates and visual diff dashboards, letting you promote or roll back models with a click while keeping leadership loops informed through automated alerts.

Leverage human-in-the-loop validation

What happens when your model generates a medical recommendation or explains a policy decision? Automated metrics can't judge nuance, tone, or ethical grey areas. Complex outputs demand contextual judgment that no metric captures.

Human-in-the-loop workflows flag low-confidence or high-impact samples for expert review, apply a consistent rubric, and measure inter-rater agreement to curb subjectivity. Prioritize sampling where automated evaluators disagree, where business risk is highest, or where new data domains appear.

To keep costs sane, reviewers handle tens, not thousands, of examples; their decisions retrain lightweight evaluators that then scale across the full dataset.

Galileo's Continuous Learning via Human Feedback lets you label as few as five examples, retrain the evaluator instantly, and redeploy without pipeline changes—preserving agility while injecting human judgment precisely where it matters.

Optimize resource and cost efficiency

High accuracy loses its shine if every inference drains your budget. You've probably seen the pattern: initial deployments work beautifully in testing, then production costs spiral out of control. Profiling exposes where cycles evaporate—bloated context windows, chatty prompts, and under-utilized GPUs.

Key metrics such as cost per token and hardware utilization help you trace spend down to individual calls. Start by batching similar requests, trimming prompt overhead, and exploring quantization to shrink model weights without gutting quality.

Measure again—never assume savings.

For evaluation itself, Galileo’s Luna-2 model slashes cost by 97% versus GPT-4, proving frugality needn't sacrifice rigor. The rule of thumb: instrument first, optimize second, celebrate third. Continuous profiling keeps the celebration short and the savings permanent.

Develop custom domain-specific metrics

Generic metrics like accuracy and F1 scores fail to capture what matters in specialized domains. For example, financial models might prioritize false-positive avoidance over recall, while medical systems might weight critical condition detection differently than routine observations.

Domain-specific metrics translate business requirements into quantifiable measurements that directly connect model performance to real-world impact. Begin by interviewing subject matter experts about what constitutes success or failure in their workflow—the distinctions often surprise technical teams focused on statistical measures.

Map these insights to custom evaluation functions that weight different error types according to their business cost. For instance, misclassifying a high-risk loan application as low-risk carries greater consequences than the reverse error.

Modern evaluation platforms like Galileo enable creating custom metrics without rebuilding your evals infrastructure. With Continuous Learning via Human Feedback) capability, domain experts provide annotated examples to train specialized evaluators that reflect nuanced business requirements, closing the gap between technical metrics and business outcomes.

Accelerate reliable AI with Galileo

Your AI stack becomes bulletproof when these strategies work in unison. Real-time monitoring catches drifts the moment they surface, cutting investigation time drastically. Human reviewers handle the nuanced cases machines miss, bringing contextual judgment and ethical oversight to automated decisions.

Here’s how Galileo gives you the foundation for trustworthy AI at enterprise scale.

Automated quality guardrails in CI/CD: Galileo integrates directly into your development workflow, running comprehensive eval on every code change and blocking releases that fail quality thresholds

Multi-dimensional response evaluation: With Galileo's Luna-2 evaluation models, you can assess every output across dozens of quality dimensions—correctness, toxicity, bias, adherence—at 97% lower cost than traditional LLM-based evaluation approaches

Real-time runtime protection: Galileo's Agent Protect scans every prompt and response in production, blocking harmful outputs before they reach users while maintaining detailed compliance logs for audit requirements

Intelligent failure detection: Galileo’s Insights Engine automatically clusters similar failures, surfaces root-cause patterns, and recommends fixes, reducing debugging time while building institutional knowledge

Human-in-the-loop optimization: Galileo's Continuous Learning via Human Feedback (CLHF) transforms expert reviews into reusable evaluators, accelerating iteration while maintaining quality standards

Get started with Galileo today and discover how comprehensive observability can elevate your AI models and achieve reliable AI systems that users trust.

Conor Bronsdon