Oct 10, 2025

Galileo vs. LangSmith: The Observability Platform That Doesn’t Just Log Failures, It Stops Them

When BAM Elevate needed to evaluate 1,000 agentic workflows—some retrieving 100+ documents—they hit a wall. Traditional LLM-as-judge evals would cost $50K+ monthly at GPT-4 pricing. Latency would stretch into seconds, and framework lock-in would trap them in LangChain forever.

They needed millisecond feedback at a fraction of the cost, across any orchestration framework, with the ability to block failures before they reach production.

This is where Galileo and LangSmith diverge completely.

LangSmith is built for the "move fast and log everything" phase. It traces LangChain applications beautifully. But when you need synthetic test data, runtime guardrails, or evaluator reusability, you're building those yourself or buying additional tools.

Galileo is built for the "ship safely at scale" phase. Framework-agnostic observability, sub-200-ms inline protection. One platform that doesn't require duct tape and external services to fill gaps.

In this comparison, you’ll see how these two platforms compare across key features, technical depth, integration flexibility, security, and cost so you can select the approach that best fits your production roadmap.

Check out our Agent Leaderboard and pick the best LLM for your use case

Galileo vs. Langsmith compared across key features

When comparing observability platforms, these dimensions separate production-ready solutions from prototyping tools:

Data generation: Can you test edge cases before they appear in production, or are you limited to capturing real user interactions?

Metric reusability: Will your team rebuild the same evaluators for each project, or can you create once and deploy everywhere?

Runtime protection: Does the platform block harmful outputs inline, or only log them for post-incident review?

Framework portability: What happens when you migrate orchestration layers? Does your observability investment carry forward or require rewrites?

Operational maturity: What are the platform's SLAs, incident history, and data handling during outages?

Capability | Galileo | LangSmith |

Core focus | Agent observability + runtime protection | LLM tracing & debugging |

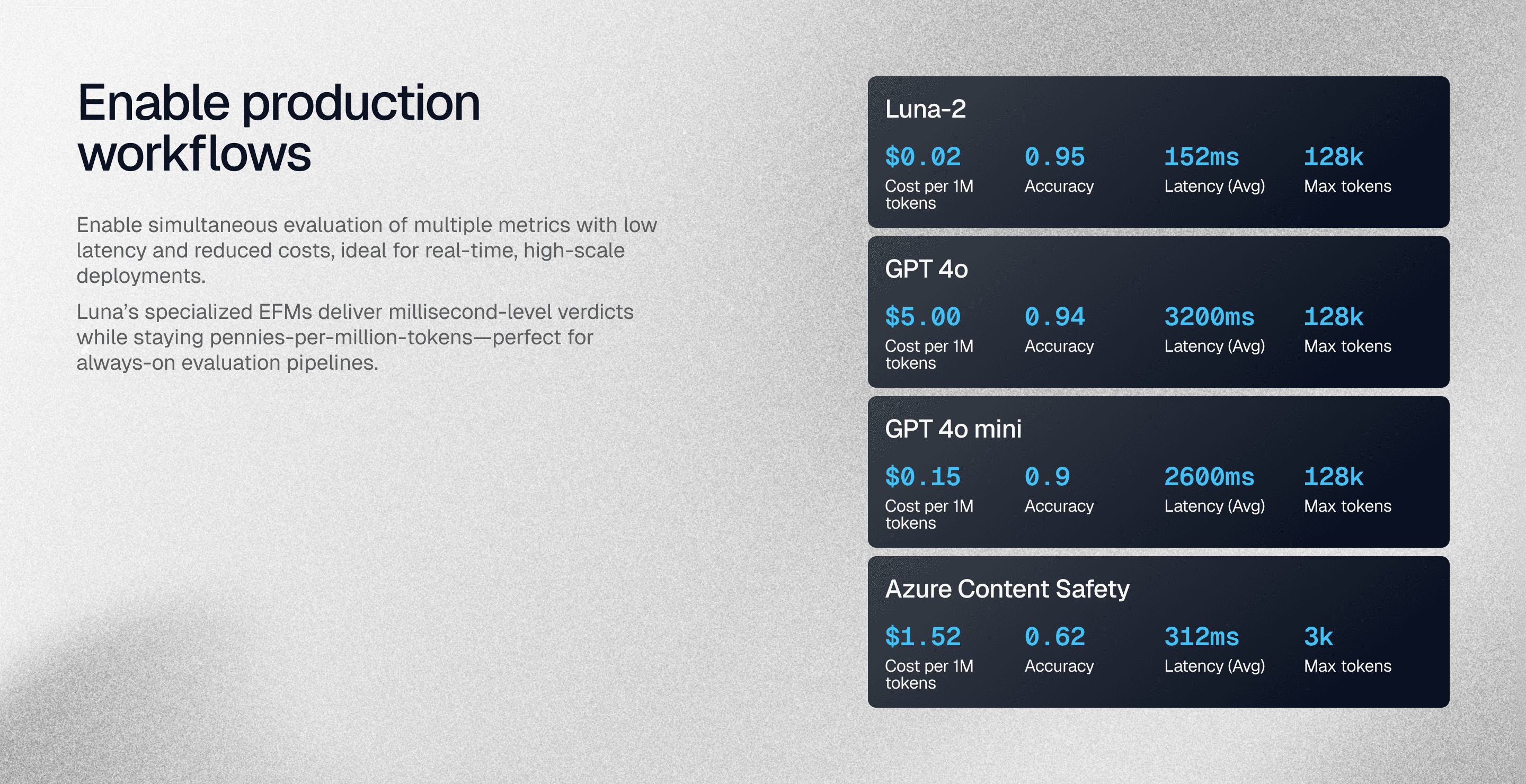

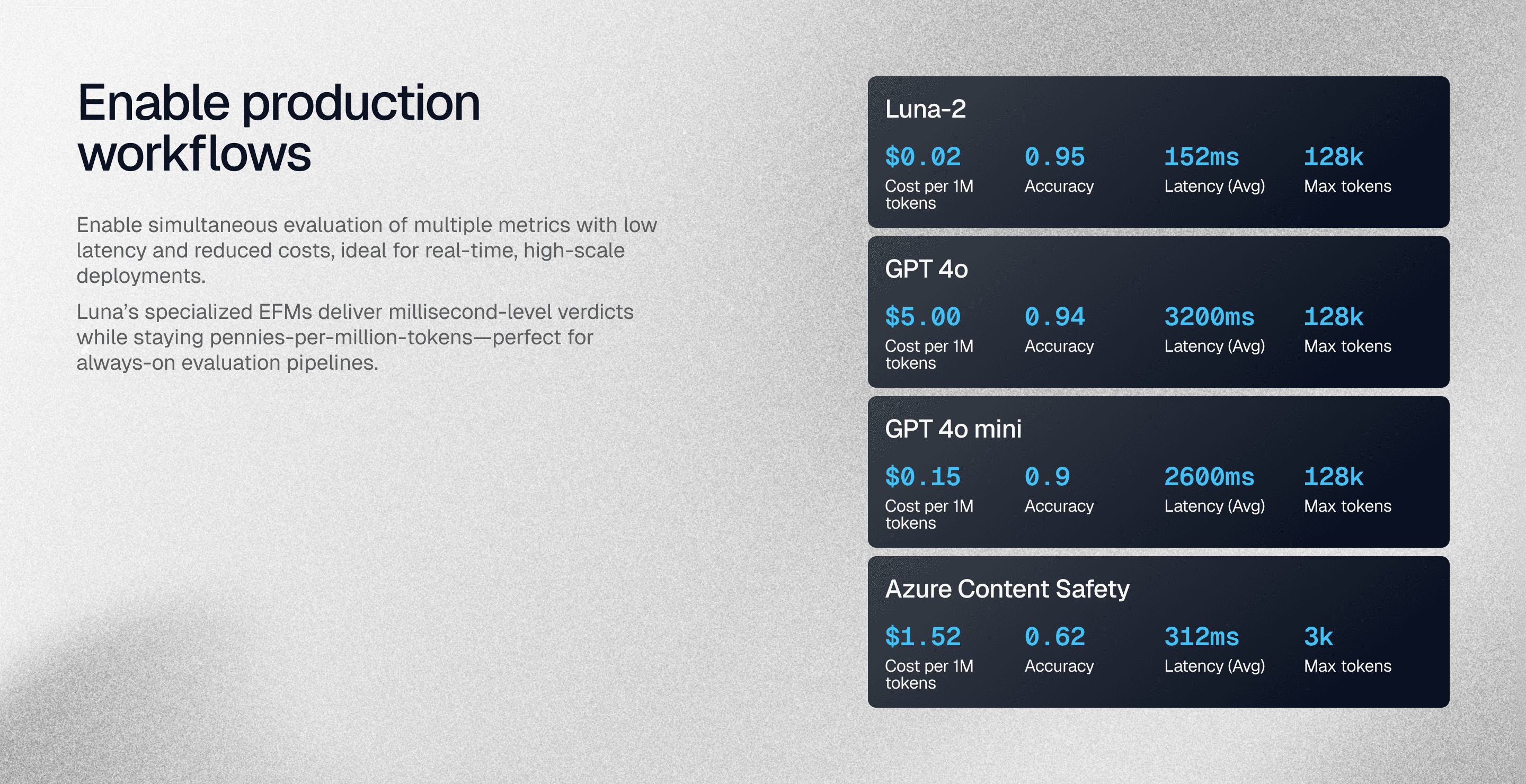

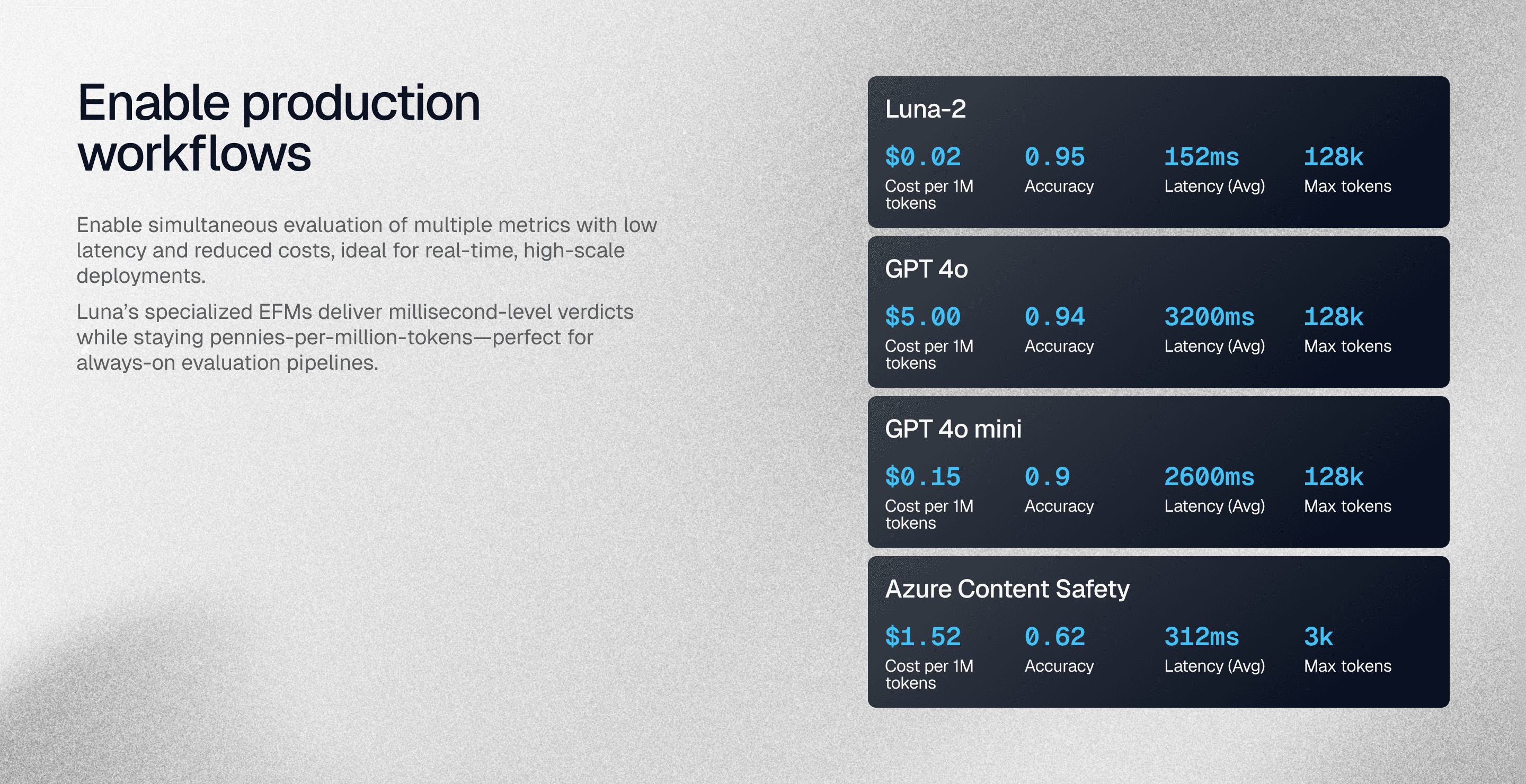

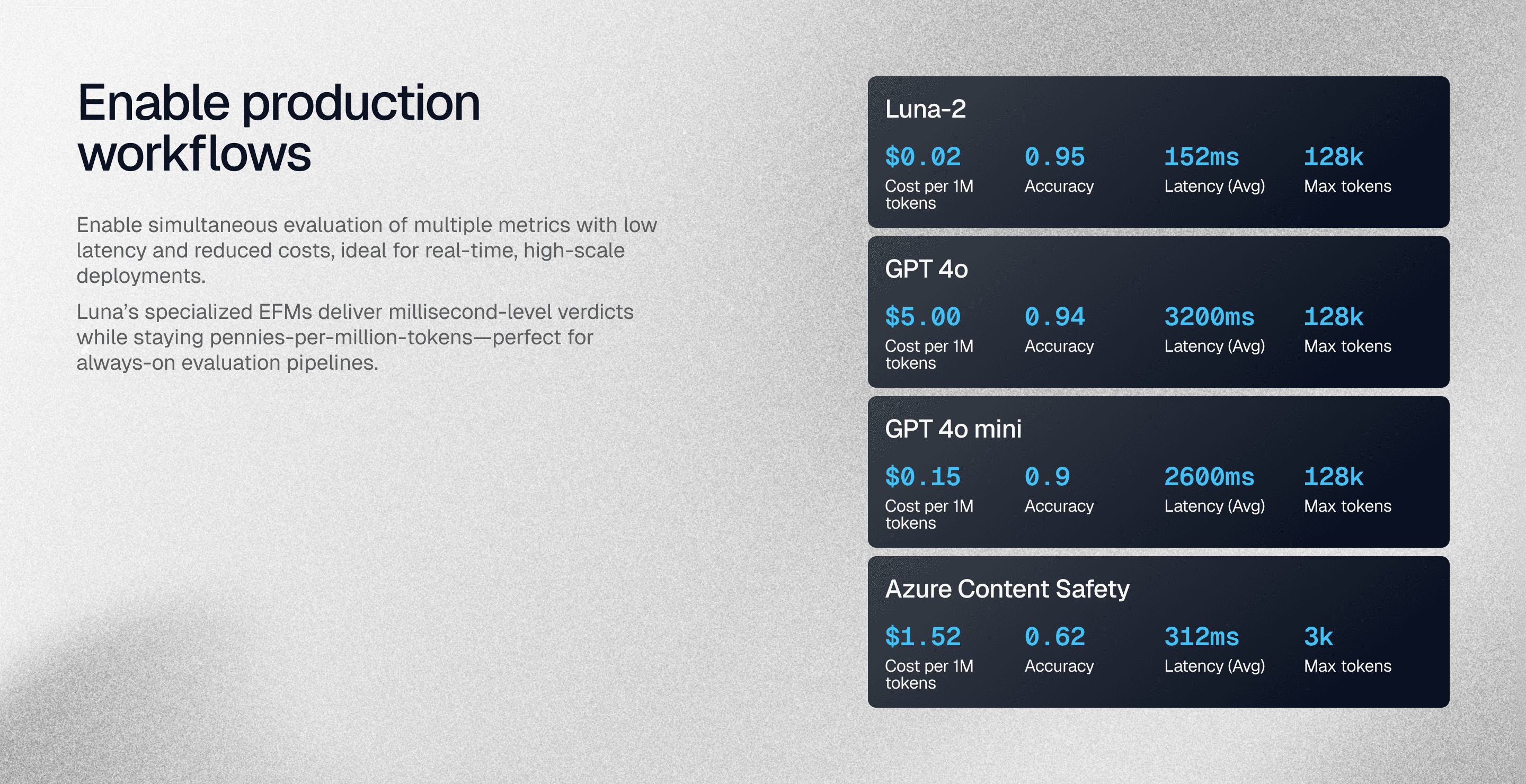

Evaluation engine | Luna-2 SLMs, sub-200ms, 97% cheaper | Generic LLM-as-judge (GPT-4) |

Synthetic data generation | Behavior profiles (injection, toxic, off-topic) | Production logs only |

Evaluator reusability | Metric Store with versioning & CLHF | Recreate prompt per project |

Runtime intervention | Inline blocking & guardrails | Logs only, no prevention |

Framework support | Framework-agnostic (CrewAI, LangGraph, custom) | LangChain-first, others need custom work |

Agent-specific metrics | 8 out-of-the-box (tool selection, flow, efficiency) | 2 basic metrics |

Span-level evaluation | Every step measured independently | Run-level only |

Session-level analysis | Multi-turn conversation tracking | No session grouping |

Scale proven | 20M+ traces/day, 50K+ live agents | 1B+ stored trace logs |

Deployment options | SaaS, hybrid, on-prem; SOC 2, ISO 27001 | Primarily SaaS; SOC 2 |

P0 incident SLA | 4 hours | Not publicly disclosed |

Metric creation time | Minutes (2-5 examples via CLHF) | Weeks (manual prompt engineering) |

Galileo gives you production-grade observability with predictable costs. LangSmith gives you excellent LangChain traces during development—but leaves gaps when you scale.

When LangSmith makes sense

LangSmith wins for specific scenarios:

Pure LangChain shops: If 90%+ of your stack is LangChain and staying that way, the auto-instrumentation is valuable. Chains, agents, and tools stream telemetry with minimal code.

Early prototyping: Pre-production teams iterating on prompts benefit from instant trace visualization. LangGraph Studio's visual editor lets you design flows and export code without leaving the browser.

Small scale: Under 1M traces monthly, LangSmith's SaaS model is plug-and-play. Token costs stay manageable when volumes are low.

Where teams outgrow it:

No runtime blocking (you'll need external guardrails)

No synthetic test data (testing in prod becomes the default)

Framework lock-in (custom orchestrators require heavy lifting)

Evaluator recreation (no metric reusability across projects)

Cost at scale (GPT-4 evals get expensive past 10M traces/month)

LangSmith is a monitoring tool masquerading as a platform. It does one thing exceptionally—trace LangChain apps—because that's table stakes. Everything else requires external tooling.

Where Galileo pulls ahead

When eval costs, framework lock-in, and reactive monitoring become bottlenecks, teams need more than traces—they need a platform that prevents failures before they ship.

Synthetic data generation (test before you ship)

LangSmith's dataset philosophy forces a dangerous catch-22: you need production logs to build evals, but you need evaluations to ship safely. This creates an endless loop of testing in production by default.

Galileo breaks this cycle by generating synthetic datasets with behavior profiles before day one—prompt injection attempts, off-topic queries, toxic content, and adversarial scenarios your users will discover, but you catch first.

Evaluator reusability (build once, deploy everywhere)

LangSmith treats every eval as a bespoke prompt engineering task. Building a "factual accuracy" checker for Project A? You do it again for Project B. And C. And D.

No evaluator library. No version control. No reusability.

However, the Galileo metric store lets you build once, refine with human feedback (CLHF), and deploy across every project. One team's "hallucination detector" becomes the entire organization's standard:

Service Titan's data architecture team runs evals across call transcription, sentiment analysis, and job value prediction—all from the same metric library. No recreating, no busywork.

BAM Elevate created a custom "tool selection quality" metric using five labeled examples. CLHF fine-tuned it to 92% accuracy in 10 minutes. They've reused it across 13 research projects and 60+ evaluation runs.

Runtime guardrails (block failures, don't just watch them)

LangSmith logs failures after they happen. A user jailbreaks your agent? Logged. PII leaks into a prompt? Traced. Toxic output ships? Recorded. You get detailed forensics but no prevention.

Galileo runtime protection scans every prompt and response in under 200ms, blocking policy violations before they reach users or databases. Luna-2 SLMs deliver this evaluation speed at 97% lower cost than GPT-4 judges, making 100% production sampling economically viable.

At GPT-4's $10 per 1M tokens, evaluating 20M daily traces costs $200K monthly. Luna-2 brings that to $6K—same accuracy, millisecond latency.

Framework flexibility (your observability layer shouldn't lock you in)

LangSmith's deep LangChain integration becomes a constraint when you need to migrate. Custom orchestrators, CrewAI, LlamaIndex, and Amazon Bedrock Agents—all require instrumentation workarounds.

Galileo provides framework-agnostic SDKs via OpenTelemetry. Instrument any agent framework—LangGraph, LlamaIndex, CrewAI, or proprietary engines—with the same telemetry layer.

Agent-specific observability (beyond chain traces)

LangSmith debugs individual chains. But multi-turn agents need session-level observability: tool selection patterns, multi-agent coordination, and recovery from failures that span multiple interactions.

Here are Galileo's advantages:

8 agentic evals out of the box vs. LangSmith's 2

Session-level tracking across multi-turn conversations

Span-level metrics on every retrieval, tool call, and generation

Insights Engine auto-clusters failure patterns and recommends fixes

Here’s what this looks like: BAM Elevate's graph-based trace views show every branch, tool call, and decision point. Hover over any node: inputs, outputs, latency, cost. Bottlenecks jump out immediately. They reduced debugging time from hours to minutes.

LangSmith shows you what happened. Galileo shows you why and how to fix it.

Galileo vs. Langsmith: Built-in capabilities and required integrations

The integration story differs dramatically between platforms - not just in complexity, but in what you get versus what you must build separately.

LangSmith

LangSmith's tight LangChain integration saves time during prototyping but creates three dependencies that teams discover at scale:

Testing in production: Without synthetic data generation, you're limited to production logs for test datasets. This forces a dangerous workflow: ship v1 with minimal testing, wait for production traffic, capture logs, build evals from logs, discover gaps, fix and repeat. You're essentially using early users as QA.

Evaluator recreation: No metric store means no version control for evaluators. Every project requires rebuilding the same "factual accuracy" checker from scratch. No way to reuse the "hallucination detector" from Project A in Project B.

Observation without intervention: When a prompt injection hits production, LangSmith provides detailed traces showing exactly what happened - comprehensive forensics, but zero prevention. You're watching failures occur, not stopping them.

A recent example: In May 2025, LangSmith experienced a 28-minute outage due to an expired SSL certificate. Traces sent during that window were dropped—not queued, not retried, just lost. The certificate had expired for 30 days before the cutoff.

Galileo

Galileo inverts these limitations by building prevention and reusability into the platform architecture:

Pre-production testing: Generate synthetic datasets with behavior profiles before day one - prompt injection attempts, off-topic queries, toxic content, and adversarial scenarios your users will discover, but you catch first. Ship with confidence instead of hoping.

Metric reusability: Galileo's metrics with CLHF transform five projects into one build and four deploys. One team's evaluator becomes everyone's standard. Reduce metric creation from weeks to minutes with version control and refinement built in.

Runtime protection: Protect scans every prompt and response in under 200ms, blocking policy violations before they reach users or databases. Prevention versus forensics - the difference between stopped incidents and detailed post-mortems.

Compliance and security

Regulators don't do warnings. They expect audit trails and immediate risk controls. Miss either, and the fine lands on you.

Galileo

Compliance infrastructure comes built-in, not as an afterthought requiring external integrations:

SOC 2 Type II and ISO 27001 certifications standard

Real-time guardrails block policy violations in under 200ms

PII detection and redaction automatic before storage

Immutable audit trails for every agent decision

Data residency controls keep records in specified regions

On-prem deployment for air-gapped environments

Finance and healthcare teams run regulated AI without inviting auditors back every sprint.

LangSmith

Security features require bolted-on tooling and custom implementations to meet compliance standards:

SOC 2 certified for security baseline

Detailed trace logs provide post-hoc visibility

No inline blocking — external guardrails required

No PII redaction — separate tooling needed

Primarily SaaS — limited on-prem options

For debugging prompt logic, LangSmith delivers. For end-to-end compliance, you're building a complete defense separately.

Galileo and Langsmith costs

Pricing isn't just the platform fee. It's the total cost of ownership.

Galileo's TCO advantage

Evaluation costs:

Luna-2 SLMs: $0.20 per 1M tokens

GPT-4: $10 per 1M tokens

97% reduction at the same accuracy

At 20M daily traces:

LangSmith (GPT-4): $200K/month

Galileo (Luna-2): $6K/month

$2.3M annual savings

Plus:

No external guardrail subscriptions

No synthetic data tooling

No metric versioning infrastructure

Drag-and-drop metric builder reduces engineering time

LangSmith's hidden costs

GPT-4 eval multiplies with every metric

External guardrails (e.g., $50K+/year for alternatives)

Synthetic data generation tools

Custom metric storage and versioning

Framework migration rewrites

The convenience of LangChain integration has a backend cost that compounds at scale.

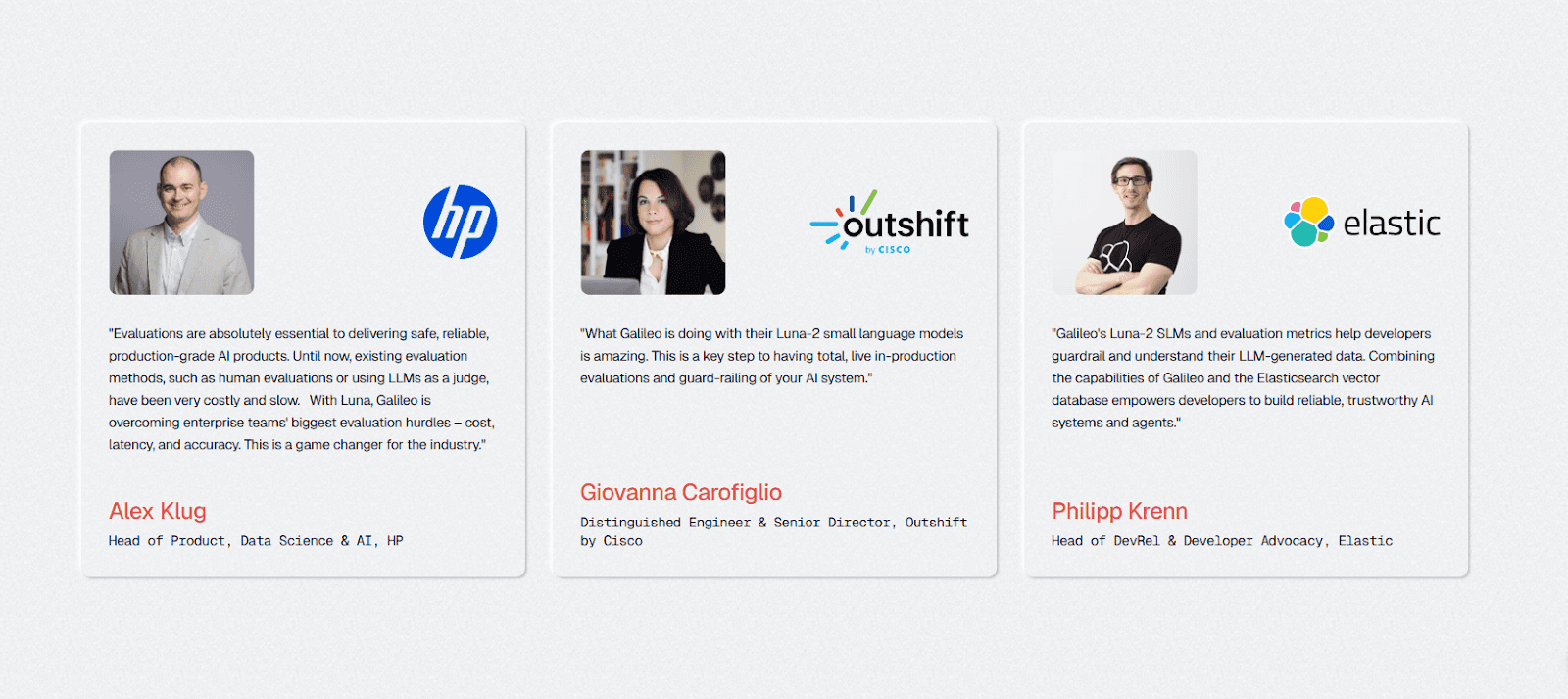

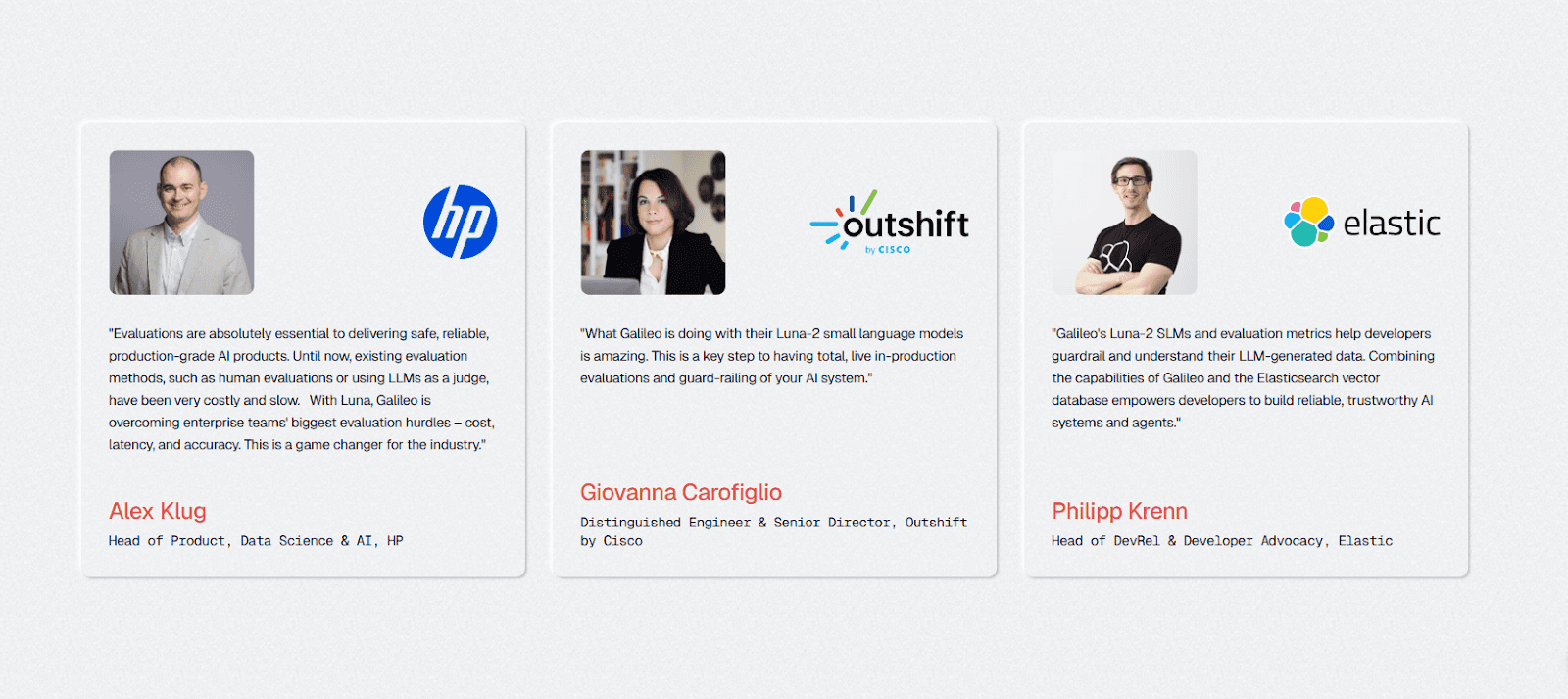

Galileo’s customer impact

Three organizations across financial intelligence, home services, and telecommunications demonstrate how production-grade observability changes not just what you can see, but what you can prevent.

BAM Elevate (Financial data intelligence)

Challenge: Evaluate 1,000 agentic workflows retrieving 100+ documents each. Traditional evals would cost $50K+/month.

Results with Galileo:

13 concurrent projects

60+ evaluation runs

5 different LLMs tested

Sub-200ms eval latency at 97% cost savings

Framework-agnostic: tested Tool Retrieval Models across GPT-4, Firefunction, and custom

"It was quite positive. We were able to get insights... Previously, we had tried other tools for evaluation and Galileo did get us pretty far." — Mano, Research Lead

Service Titan (Home services platform)

Challenge: Evaluate call transcription, sentiment analysis, and job routing across different use cases without switching tools.

Results with Galileo:

Single dashboard for 3 distinct AI systems

Custom "Expectation Adherence" metric via CLHF

Entire research team shares results via Slack integration

o1-preview evaluated within hours of announcement

Service Titan's principal data architect: "The visibility Evaluate provides is the first time this is happening for us. We can't measure what we can't see."

Fortune 50 telco (20M+ daily traces)

Challenge: Process massive scale with real-time guardrails for compliance.

Results with Galileo:

50,000+ live agents monitored

Sub-200ms inline blocking of policy violations

PII detection and redaction before storage

97% eval cost reduction enabling 100% sampling

While outages happen, the operational maturity to prevent certificate expiration—and the architecture to queue data during failures—separates platforms built for production from tools built for prototyping.

Galileo's infrastructure includes automatic failover, data queuing during incidents, and SOC 2 Type II + ISO 27001 certifications with regular audits.

Which platform fits your production roadmap?

Before choosing an observability platform, ask these questions:

"How do you generate test data before production?" Exposes whether synthetic data is built-in or you're testing in prod.

"Can I reuse evaluators across projects?" Reveals metric store capabilities vs. prompt recreation busywork.

"Can you block bad outputs at runtime?" Distinguishes guardrails from forensic logging.

"What happens if I outgrow my current framework?" Tests for framework lock-in vs. portability.

"How much time will my team spend recreating evaluators?" Quantifies the economic cost of no reusability.

"What's your operational maturity and incident history?" Separates production-ready platforms from prototyping tools.

Galileo is purpose-built for production agent reliability. Framework-agnostic. Guardrails included. When you're prototyping with LangChain, LangSmith's integration saves time. When you're shipping at scale, Galileo's platform prevents outages.

Choose Galileo when you need:

Real-time protection for regulated workloads (financial services, healthcare, PII-sensitive)

Framework flexibility to avoid orchestration lock-in

Cost-efficient scale with 20M+ traces daily

Metric reusability across teams and projects

On-prem or hybrid deployment for data residency

Sub-200ms guardrails that block failures inline

Agent-specific observability with session-level tracking

LangSmith is a feature masquerading as a platform. It traces LangChain applications beautifully. But production demands synthetic data, runtime protection, metric reusability, and framework portability.

Pick LangSmith If:

Your entire pipeline lives inside LangChain

You're in rapid prototyping (pre-production)

You prefer lightweight SaaS and can accept framework dependency

Token costs aren't a concern (<1M traces/month)

You're willing to build external guardrails separately

Evaluate your LLMs and agents with Galileo

Moving from reactive debugging to proactive quality assurance requires a platform built for production complexity.

Galileo's unified observability platform delivers:

Automated CI/CD guardrails: Block releases failing quality thresholds

Multi-dimensional evals: Luna-2 models assess correctness, toxicity, bias, and adherence at 97% lower cost

Real-time runtime protection: Scan every prompt/response, block harmful outputs before users see them

Intelligent failure detection: Insights Engine clusters failures, surfaces root causes, and recommends fixes

CLHF optimization: Transform expert reviews into reusable evaluators in minutes

Start building reliable AI agents that users trust—and stop treating production like your testing environment.

When BAM Elevate needed to evaluate 1,000 agentic workflows—some retrieving 100+ documents—they hit a wall. Traditional LLM-as-judge evals would cost $50K+ monthly at GPT-4 pricing. Latency would stretch into seconds, and framework lock-in would trap them in LangChain forever.

They needed millisecond feedback at a fraction of the cost, across any orchestration framework, with the ability to block failures before they reach production.

This is where Galileo and LangSmith diverge completely.

LangSmith is built for the "move fast and log everything" phase. It traces LangChain applications beautifully. But when you need synthetic test data, runtime guardrails, or evaluator reusability, you're building those yourself or buying additional tools.

Galileo is built for the "ship safely at scale" phase. Framework-agnostic observability, sub-200-ms inline protection. One platform that doesn't require duct tape and external services to fill gaps.

In this comparison, you’ll see how these two platforms compare across key features, technical depth, integration flexibility, security, and cost so you can select the approach that best fits your production roadmap.

Check out our Agent Leaderboard and pick the best LLM for your use case

Galileo vs. Langsmith compared across key features

When comparing observability platforms, these dimensions separate production-ready solutions from prototyping tools:

Data generation: Can you test edge cases before they appear in production, or are you limited to capturing real user interactions?

Metric reusability: Will your team rebuild the same evaluators for each project, or can you create once and deploy everywhere?

Runtime protection: Does the platform block harmful outputs inline, or only log them for post-incident review?

Framework portability: What happens when you migrate orchestration layers? Does your observability investment carry forward or require rewrites?

Operational maturity: What are the platform's SLAs, incident history, and data handling during outages?

Capability | Galileo | LangSmith |

Core focus | Agent observability + runtime protection | LLM tracing & debugging |

Evaluation engine | Luna-2 SLMs, sub-200ms, 97% cheaper | Generic LLM-as-judge (GPT-4) |

Synthetic data generation | Behavior profiles (injection, toxic, off-topic) | Production logs only |

Evaluator reusability | Metric Store with versioning & CLHF | Recreate prompt per project |

Runtime intervention | Inline blocking & guardrails | Logs only, no prevention |

Framework support | Framework-agnostic (CrewAI, LangGraph, custom) | LangChain-first, others need custom work |

Agent-specific metrics | 8 out-of-the-box (tool selection, flow, efficiency) | 2 basic metrics |

Span-level evaluation | Every step measured independently | Run-level only |

Session-level analysis | Multi-turn conversation tracking | No session grouping |

Scale proven | 20M+ traces/day, 50K+ live agents | 1B+ stored trace logs |

Deployment options | SaaS, hybrid, on-prem; SOC 2, ISO 27001 | Primarily SaaS; SOC 2 |

P0 incident SLA | 4 hours | Not publicly disclosed |

Metric creation time | Minutes (2-5 examples via CLHF) | Weeks (manual prompt engineering) |

Galileo gives you production-grade observability with predictable costs. LangSmith gives you excellent LangChain traces during development—but leaves gaps when you scale.

When LangSmith makes sense

LangSmith wins for specific scenarios:

Pure LangChain shops: If 90%+ of your stack is LangChain and staying that way, the auto-instrumentation is valuable. Chains, agents, and tools stream telemetry with minimal code.

Early prototyping: Pre-production teams iterating on prompts benefit from instant trace visualization. LangGraph Studio's visual editor lets you design flows and export code without leaving the browser.

Small scale: Under 1M traces monthly, LangSmith's SaaS model is plug-and-play. Token costs stay manageable when volumes are low.

Where teams outgrow it:

No runtime blocking (you'll need external guardrails)

No synthetic test data (testing in prod becomes the default)

Framework lock-in (custom orchestrators require heavy lifting)

Evaluator recreation (no metric reusability across projects)

Cost at scale (GPT-4 evals get expensive past 10M traces/month)

LangSmith is a monitoring tool masquerading as a platform. It does one thing exceptionally—trace LangChain apps—because that's table stakes. Everything else requires external tooling.

Where Galileo pulls ahead

When eval costs, framework lock-in, and reactive monitoring become bottlenecks, teams need more than traces—they need a platform that prevents failures before they ship.

Synthetic data generation (test before you ship)

LangSmith's dataset philosophy forces a dangerous catch-22: you need production logs to build evals, but you need evaluations to ship safely. This creates an endless loop of testing in production by default.

Galileo breaks this cycle by generating synthetic datasets with behavior profiles before day one—prompt injection attempts, off-topic queries, toxic content, and adversarial scenarios your users will discover, but you catch first.

Evaluator reusability (build once, deploy everywhere)

LangSmith treats every eval as a bespoke prompt engineering task. Building a "factual accuracy" checker for Project A? You do it again for Project B. And C. And D.

No evaluator library. No version control. No reusability.

However, the Galileo metric store lets you build once, refine with human feedback (CLHF), and deploy across every project. One team's "hallucination detector" becomes the entire organization's standard:

Service Titan's data architecture team runs evals across call transcription, sentiment analysis, and job value prediction—all from the same metric library. No recreating, no busywork.

BAM Elevate created a custom "tool selection quality" metric using five labeled examples. CLHF fine-tuned it to 92% accuracy in 10 minutes. They've reused it across 13 research projects and 60+ evaluation runs.

Runtime guardrails (block failures, don't just watch them)

LangSmith logs failures after they happen. A user jailbreaks your agent? Logged. PII leaks into a prompt? Traced. Toxic output ships? Recorded. You get detailed forensics but no prevention.

Galileo runtime protection scans every prompt and response in under 200ms, blocking policy violations before they reach users or databases. Luna-2 SLMs deliver this evaluation speed at 97% lower cost than GPT-4 judges, making 100% production sampling economically viable.

At GPT-4's $10 per 1M tokens, evaluating 20M daily traces costs $200K monthly. Luna-2 brings that to $6K—same accuracy, millisecond latency.

Framework flexibility (your observability layer shouldn't lock you in)

LangSmith's deep LangChain integration becomes a constraint when you need to migrate. Custom orchestrators, CrewAI, LlamaIndex, and Amazon Bedrock Agents—all require instrumentation workarounds.

Galileo provides framework-agnostic SDKs via OpenTelemetry. Instrument any agent framework—LangGraph, LlamaIndex, CrewAI, or proprietary engines—with the same telemetry layer.

Agent-specific observability (beyond chain traces)

LangSmith debugs individual chains. But multi-turn agents need session-level observability: tool selection patterns, multi-agent coordination, and recovery from failures that span multiple interactions.

Here are Galileo's advantages:

8 agentic evals out of the box vs. LangSmith's 2

Session-level tracking across multi-turn conversations

Span-level metrics on every retrieval, tool call, and generation

Insights Engine auto-clusters failure patterns and recommends fixes

Here’s what this looks like: BAM Elevate's graph-based trace views show every branch, tool call, and decision point. Hover over any node: inputs, outputs, latency, cost. Bottlenecks jump out immediately. They reduced debugging time from hours to minutes.

LangSmith shows you what happened. Galileo shows you why and how to fix it.

Galileo vs. Langsmith: Built-in capabilities and required integrations

The integration story differs dramatically between platforms - not just in complexity, but in what you get versus what you must build separately.

LangSmith

LangSmith's tight LangChain integration saves time during prototyping but creates three dependencies that teams discover at scale:

Testing in production: Without synthetic data generation, you're limited to production logs for test datasets. This forces a dangerous workflow: ship v1 with minimal testing, wait for production traffic, capture logs, build evals from logs, discover gaps, fix and repeat. You're essentially using early users as QA.

Evaluator recreation: No metric store means no version control for evaluators. Every project requires rebuilding the same "factual accuracy" checker from scratch. No way to reuse the "hallucination detector" from Project A in Project B.

Observation without intervention: When a prompt injection hits production, LangSmith provides detailed traces showing exactly what happened - comprehensive forensics, but zero prevention. You're watching failures occur, not stopping them.

A recent example: In May 2025, LangSmith experienced a 28-minute outage due to an expired SSL certificate. Traces sent during that window were dropped—not queued, not retried, just lost. The certificate had expired for 30 days before the cutoff.

Galileo

Galileo inverts these limitations by building prevention and reusability into the platform architecture:

Pre-production testing: Generate synthetic datasets with behavior profiles before day one - prompt injection attempts, off-topic queries, toxic content, and adversarial scenarios your users will discover, but you catch first. Ship with confidence instead of hoping.

Metric reusability: Galileo's metrics with CLHF transform five projects into one build and four deploys. One team's evaluator becomes everyone's standard. Reduce metric creation from weeks to minutes with version control and refinement built in.

Runtime protection: Protect scans every prompt and response in under 200ms, blocking policy violations before they reach users or databases. Prevention versus forensics - the difference between stopped incidents and detailed post-mortems.

Compliance and security

Regulators don't do warnings. They expect audit trails and immediate risk controls. Miss either, and the fine lands on you.

Galileo

Compliance infrastructure comes built-in, not as an afterthought requiring external integrations:

SOC 2 Type II and ISO 27001 certifications standard

Real-time guardrails block policy violations in under 200ms

PII detection and redaction automatic before storage

Immutable audit trails for every agent decision

Data residency controls keep records in specified regions

On-prem deployment for air-gapped environments

Finance and healthcare teams run regulated AI without inviting auditors back every sprint.

LangSmith

Security features require bolted-on tooling and custom implementations to meet compliance standards:

SOC 2 certified for security baseline

Detailed trace logs provide post-hoc visibility

No inline blocking — external guardrails required

No PII redaction — separate tooling needed

Primarily SaaS — limited on-prem options

For debugging prompt logic, LangSmith delivers. For end-to-end compliance, you're building a complete defense separately.

Galileo and Langsmith costs

Pricing isn't just the platform fee. It's the total cost of ownership.

Galileo's TCO advantage

Evaluation costs:

Luna-2 SLMs: $0.20 per 1M tokens

GPT-4: $10 per 1M tokens

97% reduction at the same accuracy

At 20M daily traces:

LangSmith (GPT-4): $200K/month

Galileo (Luna-2): $6K/month

$2.3M annual savings

Plus:

No external guardrail subscriptions

No synthetic data tooling

No metric versioning infrastructure

Drag-and-drop metric builder reduces engineering time

LangSmith's hidden costs

GPT-4 eval multiplies with every metric

External guardrails (e.g., $50K+/year for alternatives)

Synthetic data generation tools

Custom metric storage and versioning

Framework migration rewrites

The convenience of LangChain integration has a backend cost that compounds at scale.

Galileo’s customer impact

Three organizations across financial intelligence, home services, and telecommunications demonstrate how production-grade observability changes not just what you can see, but what you can prevent.

BAM Elevate (Financial data intelligence)

Challenge: Evaluate 1,000 agentic workflows retrieving 100+ documents each. Traditional evals would cost $50K+/month.

Results with Galileo:

13 concurrent projects

60+ evaluation runs

5 different LLMs tested

Sub-200ms eval latency at 97% cost savings

Framework-agnostic: tested Tool Retrieval Models across GPT-4, Firefunction, and custom

"It was quite positive. We were able to get insights... Previously, we had tried other tools for evaluation and Galileo did get us pretty far." — Mano, Research Lead

Service Titan (Home services platform)

Challenge: Evaluate call transcription, sentiment analysis, and job routing across different use cases without switching tools.

Results with Galileo:

Single dashboard for 3 distinct AI systems

Custom "Expectation Adherence" metric via CLHF

Entire research team shares results via Slack integration

o1-preview evaluated within hours of announcement

Service Titan's principal data architect: "The visibility Evaluate provides is the first time this is happening for us. We can't measure what we can't see."

Fortune 50 telco (20M+ daily traces)

Challenge: Process massive scale with real-time guardrails for compliance.

Results with Galileo:

50,000+ live agents monitored

Sub-200ms inline blocking of policy violations

PII detection and redaction before storage

97% eval cost reduction enabling 100% sampling

While outages happen, the operational maturity to prevent certificate expiration—and the architecture to queue data during failures—separates platforms built for production from tools built for prototyping.

Galileo's infrastructure includes automatic failover, data queuing during incidents, and SOC 2 Type II + ISO 27001 certifications with regular audits.

Which platform fits your production roadmap?

Before choosing an observability platform, ask these questions:

"How do you generate test data before production?" Exposes whether synthetic data is built-in or you're testing in prod.

"Can I reuse evaluators across projects?" Reveals metric store capabilities vs. prompt recreation busywork.

"Can you block bad outputs at runtime?" Distinguishes guardrails from forensic logging.

"What happens if I outgrow my current framework?" Tests for framework lock-in vs. portability.

"How much time will my team spend recreating evaluators?" Quantifies the economic cost of no reusability.

"What's your operational maturity and incident history?" Separates production-ready platforms from prototyping tools.

Galileo is purpose-built for production agent reliability. Framework-agnostic. Guardrails included. When you're prototyping with LangChain, LangSmith's integration saves time. When you're shipping at scale, Galileo's platform prevents outages.

Choose Galileo when you need:

Real-time protection for regulated workloads (financial services, healthcare, PII-sensitive)

Framework flexibility to avoid orchestration lock-in

Cost-efficient scale with 20M+ traces daily

Metric reusability across teams and projects

On-prem or hybrid deployment for data residency

Sub-200ms guardrails that block failures inline

Agent-specific observability with session-level tracking

LangSmith is a feature masquerading as a platform. It traces LangChain applications beautifully. But production demands synthetic data, runtime protection, metric reusability, and framework portability.

Pick LangSmith If:

Your entire pipeline lives inside LangChain

You're in rapid prototyping (pre-production)

You prefer lightweight SaaS and can accept framework dependency

Token costs aren't a concern (<1M traces/month)

You're willing to build external guardrails separately

Evaluate your LLMs and agents with Galileo

Moving from reactive debugging to proactive quality assurance requires a platform built for production complexity.

Galileo's unified observability platform delivers:

Automated CI/CD guardrails: Block releases failing quality thresholds

Multi-dimensional evals: Luna-2 models assess correctness, toxicity, bias, and adherence at 97% lower cost

Real-time runtime protection: Scan every prompt/response, block harmful outputs before users see them

Intelligent failure detection: Insights Engine clusters failures, surfaces root causes, and recommends fixes

CLHF optimization: Transform expert reviews into reusable evaluators in minutes

Start building reliable AI agents that users trust—and stop treating production like your testing environment.

When BAM Elevate needed to evaluate 1,000 agentic workflows—some retrieving 100+ documents—they hit a wall. Traditional LLM-as-judge evals would cost $50K+ monthly at GPT-4 pricing. Latency would stretch into seconds, and framework lock-in would trap them in LangChain forever.

They needed millisecond feedback at a fraction of the cost, across any orchestration framework, with the ability to block failures before they reach production.

This is where Galileo and LangSmith diverge completely.

LangSmith is built for the "move fast and log everything" phase. It traces LangChain applications beautifully. But when you need synthetic test data, runtime guardrails, or evaluator reusability, you're building those yourself or buying additional tools.

Galileo is built for the "ship safely at scale" phase. Framework-agnostic observability, sub-200-ms inline protection. One platform that doesn't require duct tape and external services to fill gaps.

In this comparison, you’ll see how these two platforms compare across key features, technical depth, integration flexibility, security, and cost so you can select the approach that best fits your production roadmap.

Check out our Agent Leaderboard and pick the best LLM for your use case

Galileo vs. Langsmith compared across key features

When comparing observability platforms, these dimensions separate production-ready solutions from prototyping tools:

Data generation: Can you test edge cases before they appear in production, or are you limited to capturing real user interactions?

Metric reusability: Will your team rebuild the same evaluators for each project, or can you create once and deploy everywhere?

Runtime protection: Does the platform block harmful outputs inline, or only log them for post-incident review?

Framework portability: What happens when you migrate orchestration layers? Does your observability investment carry forward or require rewrites?

Operational maturity: What are the platform's SLAs, incident history, and data handling during outages?

Capability | Galileo | LangSmith |

Core focus | Agent observability + runtime protection | LLM tracing & debugging |

Evaluation engine | Luna-2 SLMs, sub-200ms, 97% cheaper | Generic LLM-as-judge (GPT-4) |

Synthetic data generation | Behavior profiles (injection, toxic, off-topic) | Production logs only |

Evaluator reusability | Metric Store with versioning & CLHF | Recreate prompt per project |

Runtime intervention | Inline blocking & guardrails | Logs only, no prevention |

Framework support | Framework-agnostic (CrewAI, LangGraph, custom) | LangChain-first, others need custom work |

Agent-specific metrics | 8 out-of-the-box (tool selection, flow, efficiency) | 2 basic metrics |

Span-level evaluation | Every step measured independently | Run-level only |

Session-level analysis | Multi-turn conversation tracking | No session grouping |

Scale proven | 20M+ traces/day, 50K+ live agents | 1B+ stored trace logs |

Deployment options | SaaS, hybrid, on-prem; SOC 2, ISO 27001 | Primarily SaaS; SOC 2 |

P0 incident SLA | 4 hours | Not publicly disclosed |

Metric creation time | Minutes (2-5 examples via CLHF) | Weeks (manual prompt engineering) |

Galileo gives you production-grade observability with predictable costs. LangSmith gives you excellent LangChain traces during development—but leaves gaps when you scale.

When LangSmith makes sense

LangSmith wins for specific scenarios:

Pure LangChain shops: If 90%+ of your stack is LangChain and staying that way, the auto-instrumentation is valuable. Chains, agents, and tools stream telemetry with minimal code.

Early prototyping: Pre-production teams iterating on prompts benefit from instant trace visualization. LangGraph Studio's visual editor lets you design flows and export code without leaving the browser.

Small scale: Under 1M traces monthly, LangSmith's SaaS model is plug-and-play. Token costs stay manageable when volumes are low.

Where teams outgrow it:

No runtime blocking (you'll need external guardrails)

No synthetic test data (testing in prod becomes the default)

Framework lock-in (custom orchestrators require heavy lifting)

Evaluator recreation (no metric reusability across projects)

Cost at scale (GPT-4 evals get expensive past 10M traces/month)

LangSmith is a monitoring tool masquerading as a platform. It does one thing exceptionally—trace LangChain apps—because that's table stakes. Everything else requires external tooling.

Where Galileo pulls ahead

When eval costs, framework lock-in, and reactive monitoring become bottlenecks, teams need more than traces—they need a platform that prevents failures before they ship.

Synthetic data generation (test before you ship)

LangSmith's dataset philosophy forces a dangerous catch-22: you need production logs to build evals, but you need evaluations to ship safely. This creates an endless loop of testing in production by default.

Galileo breaks this cycle by generating synthetic datasets with behavior profiles before day one—prompt injection attempts, off-topic queries, toxic content, and adversarial scenarios your users will discover, but you catch first.

Evaluator reusability (build once, deploy everywhere)

LangSmith treats every eval as a bespoke prompt engineering task. Building a "factual accuracy" checker for Project A? You do it again for Project B. And C. And D.

No evaluator library. No version control. No reusability.

However, the Galileo metric store lets you build once, refine with human feedback (CLHF), and deploy across every project. One team's "hallucination detector" becomes the entire organization's standard:

Service Titan's data architecture team runs evals across call transcription, sentiment analysis, and job value prediction—all from the same metric library. No recreating, no busywork.

BAM Elevate created a custom "tool selection quality" metric using five labeled examples. CLHF fine-tuned it to 92% accuracy in 10 minutes. They've reused it across 13 research projects and 60+ evaluation runs.

Runtime guardrails (block failures, don't just watch them)

LangSmith logs failures after they happen. A user jailbreaks your agent? Logged. PII leaks into a prompt? Traced. Toxic output ships? Recorded. You get detailed forensics but no prevention.

Galileo runtime protection scans every prompt and response in under 200ms, blocking policy violations before they reach users or databases. Luna-2 SLMs deliver this evaluation speed at 97% lower cost than GPT-4 judges, making 100% production sampling economically viable.

At GPT-4's $10 per 1M tokens, evaluating 20M daily traces costs $200K monthly. Luna-2 brings that to $6K—same accuracy, millisecond latency.

Framework flexibility (your observability layer shouldn't lock you in)

LangSmith's deep LangChain integration becomes a constraint when you need to migrate. Custom orchestrators, CrewAI, LlamaIndex, and Amazon Bedrock Agents—all require instrumentation workarounds.

Galileo provides framework-agnostic SDKs via OpenTelemetry. Instrument any agent framework—LangGraph, LlamaIndex, CrewAI, or proprietary engines—with the same telemetry layer.

Agent-specific observability (beyond chain traces)

LangSmith debugs individual chains. But multi-turn agents need session-level observability: tool selection patterns, multi-agent coordination, and recovery from failures that span multiple interactions.

Here are Galileo's advantages:

8 agentic evals out of the box vs. LangSmith's 2

Session-level tracking across multi-turn conversations

Span-level metrics on every retrieval, tool call, and generation

Insights Engine auto-clusters failure patterns and recommends fixes

Here’s what this looks like: BAM Elevate's graph-based trace views show every branch, tool call, and decision point. Hover over any node: inputs, outputs, latency, cost. Bottlenecks jump out immediately. They reduced debugging time from hours to minutes.

LangSmith shows you what happened. Galileo shows you why and how to fix it.

Galileo vs. Langsmith: Built-in capabilities and required integrations

The integration story differs dramatically between platforms - not just in complexity, but in what you get versus what you must build separately.

LangSmith

LangSmith's tight LangChain integration saves time during prototyping but creates three dependencies that teams discover at scale:

Testing in production: Without synthetic data generation, you're limited to production logs for test datasets. This forces a dangerous workflow: ship v1 with minimal testing, wait for production traffic, capture logs, build evals from logs, discover gaps, fix and repeat. You're essentially using early users as QA.

Evaluator recreation: No metric store means no version control for evaluators. Every project requires rebuilding the same "factual accuracy" checker from scratch. No way to reuse the "hallucination detector" from Project A in Project B.

Observation without intervention: When a prompt injection hits production, LangSmith provides detailed traces showing exactly what happened - comprehensive forensics, but zero prevention. You're watching failures occur, not stopping them.

A recent example: In May 2025, LangSmith experienced a 28-minute outage due to an expired SSL certificate. Traces sent during that window were dropped—not queued, not retried, just lost. The certificate had expired for 30 days before the cutoff.

Galileo

Galileo inverts these limitations by building prevention and reusability into the platform architecture:

Pre-production testing: Generate synthetic datasets with behavior profiles before day one - prompt injection attempts, off-topic queries, toxic content, and adversarial scenarios your users will discover, but you catch first. Ship with confidence instead of hoping.

Metric reusability: Galileo's metrics with CLHF transform five projects into one build and four deploys. One team's evaluator becomes everyone's standard. Reduce metric creation from weeks to minutes with version control and refinement built in.

Runtime protection: Protect scans every prompt and response in under 200ms, blocking policy violations before they reach users or databases. Prevention versus forensics - the difference between stopped incidents and detailed post-mortems.

Compliance and security

Regulators don't do warnings. They expect audit trails and immediate risk controls. Miss either, and the fine lands on you.

Galileo

Compliance infrastructure comes built-in, not as an afterthought requiring external integrations:

SOC 2 Type II and ISO 27001 certifications standard

Real-time guardrails block policy violations in under 200ms

PII detection and redaction automatic before storage

Immutable audit trails for every agent decision

Data residency controls keep records in specified regions

On-prem deployment for air-gapped environments

Finance and healthcare teams run regulated AI without inviting auditors back every sprint.

LangSmith

Security features require bolted-on tooling and custom implementations to meet compliance standards:

SOC 2 certified for security baseline

Detailed trace logs provide post-hoc visibility

No inline blocking — external guardrails required

No PII redaction — separate tooling needed

Primarily SaaS — limited on-prem options

For debugging prompt logic, LangSmith delivers. For end-to-end compliance, you're building a complete defense separately.

Galileo and Langsmith costs

Pricing isn't just the platform fee. It's the total cost of ownership.

Galileo's TCO advantage

Evaluation costs:

Luna-2 SLMs: $0.20 per 1M tokens

GPT-4: $10 per 1M tokens

97% reduction at the same accuracy

At 20M daily traces:

LangSmith (GPT-4): $200K/month

Galileo (Luna-2): $6K/month

$2.3M annual savings

Plus:

No external guardrail subscriptions

No synthetic data tooling

No metric versioning infrastructure

Drag-and-drop metric builder reduces engineering time

LangSmith's hidden costs

GPT-4 eval multiplies with every metric

External guardrails (e.g., $50K+/year for alternatives)

Synthetic data generation tools

Custom metric storage and versioning

Framework migration rewrites

The convenience of LangChain integration has a backend cost that compounds at scale.

Galileo’s customer impact

Three organizations across financial intelligence, home services, and telecommunications demonstrate how production-grade observability changes not just what you can see, but what you can prevent.

BAM Elevate (Financial data intelligence)

Challenge: Evaluate 1,000 agentic workflows retrieving 100+ documents each. Traditional evals would cost $50K+/month.

Results with Galileo:

13 concurrent projects

60+ evaluation runs

5 different LLMs tested

Sub-200ms eval latency at 97% cost savings

Framework-agnostic: tested Tool Retrieval Models across GPT-4, Firefunction, and custom

"It was quite positive. We were able to get insights... Previously, we had tried other tools for evaluation and Galileo did get us pretty far." — Mano, Research Lead

Service Titan (Home services platform)

Challenge: Evaluate call transcription, sentiment analysis, and job routing across different use cases without switching tools.

Results with Galileo:

Single dashboard for 3 distinct AI systems

Custom "Expectation Adherence" metric via CLHF

Entire research team shares results via Slack integration

o1-preview evaluated within hours of announcement

Service Titan's principal data architect: "The visibility Evaluate provides is the first time this is happening for us. We can't measure what we can't see."

Fortune 50 telco (20M+ daily traces)

Challenge: Process massive scale with real-time guardrails for compliance.

Results with Galileo:

50,000+ live agents monitored

Sub-200ms inline blocking of policy violations

PII detection and redaction before storage

97% eval cost reduction enabling 100% sampling

While outages happen, the operational maturity to prevent certificate expiration—and the architecture to queue data during failures—separates platforms built for production from tools built for prototyping.

Galileo's infrastructure includes automatic failover, data queuing during incidents, and SOC 2 Type II + ISO 27001 certifications with regular audits.

Which platform fits your production roadmap?

Before choosing an observability platform, ask these questions:

"How do you generate test data before production?" Exposes whether synthetic data is built-in or you're testing in prod.

"Can I reuse evaluators across projects?" Reveals metric store capabilities vs. prompt recreation busywork.

"Can you block bad outputs at runtime?" Distinguishes guardrails from forensic logging.

"What happens if I outgrow my current framework?" Tests for framework lock-in vs. portability.

"How much time will my team spend recreating evaluators?" Quantifies the economic cost of no reusability.

"What's your operational maturity and incident history?" Separates production-ready platforms from prototyping tools.

Galileo is purpose-built for production agent reliability. Framework-agnostic. Guardrails included. When you're prototyping with LangChain, LangSmith's integration saves time. When you're shipping at scale, Galileo's platform prevents outages.

Choose Galileo when you need:

Real-time protection for regulated workloads (financial services, healthcare, PII-sensitive)

Framework flexibility to avoid orchestration lock-in

Cost-efficient scale with 20M+ traces daily

Metric reusability across teams and projects

On-prem or hybrid deployment for data residency

Sub-200ms guardrails that block failures inline

Agent-specific observability with session-level tracking

LangSmith is a feature masquerading as a platform. It traces LangChain applications beautifully. But production demands synthetic data, runtime protection, metric reusability, and framework portability.

Pick LangSmith If:

Your entire pipeline lives inside LangChain

You're in rapid prototyping (pre-production)

You prefer lightweight SaaS and can accept framework dependency

Token costs aren't a concern (<1M traces/month)

You're willing to build external guardrails separately

Evaluate your LLMs and agents with Galileo

Moving from reactive debugging to proactive quality assurance requires a platform built for production complexity.

Galileo's unified observability platform delivers:

Automated CI/CD guardrails: Block releases failing quality thresholds

Multi-dimensional evals: Luna-2 models assess correctness, toxicity, bias, and adherence at 97% lower cost

Real-time runtime protection: Scan every prompt/response, block harmful outputs before users see them

Intelligent failure detection: Insights Engine clusters failures, surfaces root causes, and recommends fixes

CLHF optimization: Transform expert reviews into reusable evaluators in minutes

Start building reliable AI agents that users trust—and stop treating production like your testing environment.

When BAM Elevate needed to evaluate 1,000 agentic workflows—some retrieving 100+ documents—they hit a wall. Traditional LLM-as-judge evals would cost $50K+ monthly at GPT-4 pricing. Latency would stretch into seconds, and framework lock-in would trap them in LangChain forever.

They needed millisecond feedback at a fraction of the cost, across any orchestration framework, with the ability to block failures before they reach production.

This is where Galileo and LangSmith diverge completely.

LangSmith is built for the "move fast and log everything" phase. It traces LangChain applications beautifully. But when you need synthetic test data, runtime guardrails, or evaluator reusability, you're building those yourself or buying additional tools.

Galileo is built for the "ship safely at scale" phase. Framework-agnostic observability, sub-200-ms inline protection. One platform that doesn't require duct tape and external services to fill gaps.

In this comparison, you’ll see how these two platforms compare across key features, technical depth, integration flexibility, security, and cost so you can select the approach that best fits your production roadmap.

Check out our Agent Leaderboard and pick the best LLM for your use case

Galileo vs. Langsmith compared across key features

When comparing observability platforms, these dimensions separate production-ready solutions from prototyping tools:

Data generation: Can you test edge cases before they appear in production, or are you limited to capturing real user interactions?

Metric reusability: Will your team rebuild the same evaluators for each project, or can you create once and deploy everywhere?

Runtime protection: Does the platform block harmful outputs inline, or only log them for post-incident review?

Framework portability: What happens when you migrate orchestration layers? Does your observability investment carry forward or require rewrites?

Operational maturity: What are the platform's SLAs, incident history, and data handling during outages?

Capability | Galileo | LangSmith |

Core focus | Agent observability + runtime protection | LLM tracing & debugging |

Evaluation engine | Luna-2 SLMs, sub-200ms, 97% cheaper | Generic LLM-as-judge (GPT-4) |

Synthetic data generation | Behavior profiles (injection, toxic, off-topic) | Production logs only |

Evaluator reusability | Metric Store with versioning & CLHF | Recreate prompt per project |

Runtime intervention | Inline blocking & guardrails | Logs only, no prevention |

Framework support | Framework-agnostic (CrewAI, LangGraph, custom) | LangChain-first, others need custom work |

Agent-specific metrics | 8 out-of-the-box (tool selection, flow, efficiency) | 2 basic metrics |

Span-level evaluation | Every step measured independently | Run-level only |

Session-level analysis | Multi-turn conversation tracking | No session grouping |

Scale proven | 20M+ traces/day, 50K+ live agents | 1B+ stored trace logs |

Deployment options | SaaS, hybrid, on-prem; SOC 2, ISO 27001 | Primarily SaaS; SOC 2 |

P0 incident SLA | 4 hours | Not publicly disclosed |

Metric creation time | Minutes (2-5 examples via CLHF) | Weeks (manual prompt engineering) |

Galileo gives you production-grade observability with predictable costs. LangSmith gives you excellent LangChain traces during development—but leaves gaps when you scale.

When LangSmith makes sense

LangSmith wins for specific scenarios:

Pure LangChain shops: If 90%+ of your stack is LangChain and staying that way, the auto-instrumentation is valuable. Chains, agents, and tools stream telemetry with minimal code.

Early prototyping: Pre-production teams iterating on prompts benefit from instant trace visualization. LangGraph Studio's visual editor lets you design flows and export code without leaving the browser.

Small scale: Under 1M traces monthly, LangSmith's SaaS model is plug-and-play. Token costs stay manageable when volumes are low.

Where teams outgrow it:

No runtime blocking (you'll need external guardrails)

No synthetic test data (testing in prod becomes the default)

Framework lock-in (custom orchestrators require heavy lifting)

Evaluator recreation (no metric reusability across projects)

Cost at scale (GPT-4 evals get expensive past 10M traces/month)

LangSmith is a monitoring tool masquerading as a platform. It does one thing exceptionally—trace LangChain apps—because that's table stakes. Everything else requires external tooling.

Where Galileo pulls ahead

When eval costs, framework lock-in, and reactive monitoring become bottlenecks, teams need more than traces—they need a platform that prevents failures before they ship.

Synthetic data generation (test before you ship)

LangSmith's dataset philosophy forces a dangerous catch-22: you need production logs to build evals, but you need evaluations to ship safely. This creates an endless loop of testing in production by default.

Galileo breaks this cycle by generating synthetic datasets with behavior profiles before day one—prompt injection attempts, off-topic queries, toxic content, and adversarial scenarios your users will discover, but you catch first.

Evaluator reusability (build once, deploy everywhere)

LangSmith treats every eval as a bespoke prompt engineering task. Building a "factual accuracy" checker for Project A? You do it again for Project B. And C. And D.

No evaluator library. No version control. No reusability.

However, the Galileo metric store lets you build once, refine with human feedback (CLHF), and deploy across every project. One team's "hallucination detector" becomes the entire organization's standard:

Service Titan's data architecture team runs evals across call transcription, sentiment analysis, and job value prediction—all from the same metric library. No recreating, no busywork.

BAM Elevate created a custom "tool selection quality" metric using five labeled examples. CLHF fine-tuned it to 92% accuracy in 10 minutes. They've reused it across 13 research projects and 60+ evaluation runs.

Runtime guardrails (block failures, don't just watch them)

LangSmith logs failures after they happen. A user jailbreaks your agent? Logged. PII leaks into a prompt? Traced. Toxic output ships? Recorded. You get detailed forensics but no prevention.

Galileo runtime protection scans every prompt and response in under 200ms, blocking policy violations before they reach users or databases. Luna-2 SLMs deliver this evaluation speed at 97% lower cost than GPT-4 judges, making 100% production sampling economically viable.

At GPT-4's $10 per 1M tokens, evaluating 20M daily traces costs $200K monthly. Luna-2 brings that to $6K—same accuracy, millisecond latency.

Framework flexibility (your observability layer shouldn't lock you in)

LangSmith's deep LangChain integration becomes a constraint when you need to migrate. Custom orchestrators, CrewAI, LlamaIndex, and Amazon Bedrock Agents—all require instrumentation workarounds.

Galileo provides framework-agnostic SDKs via OpenTelemetry. Instrument any agent framework—LangGraph, LlamaIndex, CrewAI, or proprietary engines—with the same telemetry layer.

Agent-specific observability (beyond chain traces)

LangSmith debugs individual chains. But multi-turn agents need session-level observability: tool selection patterns, multi-agent coordination, and recovery from failures that span multiple interactions.

Here are Galileo's advantages:

8 agentic evals out of the box vs. LangSmith's 2

Session-level tracking across multi-turn conversations

Span-level metrics on every retrieval, tool call, and generation

Insights Engine auto-clusters failure patterns and recommends fixes

Here’s what this looks like: BAM Elevate's graph-based trace views show every branch, tool call, and decision point. Hover over any node: inputs, outputs, latency, cost. Bottlenecks jump out immediately. They reduced debugging time from hours to minutes.

LangSmith shows you what happened. Galileo shows you why and how to fix it.

Galileo vs. Langsmith: Built-in capabilities and required integrations

The integration story differs dramatically between platforms - not just in complexity, but in what you get versus what you must build separately.

LangSmith

LangSmith's tight LangChain integration saves time during prototyping but creates three dependencies that teams discover at scale:

Testing in production: Without synthetic data generation, you're limited to production logs for test datasets. This forces a dangerous workflow: ship v1 with minimal testing, wait for production traffic, capture logs, build evals from logs, discover gaps, fix and repeat. You're essentially using early users as QA.

Evaluator recreation: No metric store means no version control for evaluators. Every project requires rebuilding the same "factual accuracy" checker from scratch. No way to reuse the "hallucination detector" from Project A in Project B.

Observation without intervention: When a prompt injection hits production, LangSmith provides detailed traces showing exactly what happened - comprehensive forensics, but zero prevention. You're watching failures occur, not stopping them.

A recent example: In May 2025, LangSmith experienced a 28-minute outage due to an expired SSL certificate. Traces sent during that window were dropped—not queued, not retried, just lost. The certificate had expired for 30 days before the cutoff.

Galileo

Galileo inverts these limitations by building prevention and reusability into the platform architecture:

Pre-production testing: Generate synthetic datasets with behavior profiles before day one - prompt injection attempts, off-topic queries, toxic content, and adversarial scenarios your users will discover, but you catch first. Ship with confidence instead of hoping.

Metric reusability: Galileo's metrics with CLHF transform five projects into one build and four deploys. One team's evaluator becomes everyone's standard. Reduce metric creation from weeks to minutes with version control and refinement built in.

Runtime protection: Protect scans every prompt and response in under 200ms, blocking policy violations before they reach users or databases. Prevention versus forensics - the difference between stopped incidents and detailed post-mortems.

Compliance and security

Regulators don't do warnings. They expect audit trails and immediate risk controls. Miss either, and the fine lands on you.

Galileo

Compliance infrastructure comes built-in, not as an afterthought requiring external integrations:

SOC 2 Type II and ISO 27001 certifications standard

Real-time guardrails block policy violations in under 200ms

PII detection and redaction automatic before storage

Immutable audit trails for every agent decision

Data residency controls keep records in specified regions

On-prem deployment for air-gapped environments

Finance and healthcare teams run regulated AI without inviting auditors back every sprint.

LangSmith

Security features require bolted-on tooling and custom implementations to meet compliance standards:

SOC 2 certified for security baseline

Detailed trace logs provide post-hoc visibility

No inline blocking — external guardrails required

No PII redaction — separate tooling needed

Primarily SaaS — limited on-prem options

For debugging prompt logic, LangSmith delivers. For end-to-end compliance, you're building a complete defense separately.

Galileo and Langsmith costs

Pricing isn't just the platform fee. It's the total cost of ownership.

Galileo's TCO advantage

Evaluation costs:

Luna-2 SLMs: $0.20 per 1M tokens

GPT-4: $10 per 1M tokens

97% reduction at the same accuracy

At 20M daily traces:

LangSmith (GPT-4): $200K/month

Galileo (Luna-2): $6K/month

$2.3M annual savings

Plus:

No external guardrail subscriptions

No synthetic data tooling

No metric versioning infrastructure

Drag-and-drop metric builder reduces engineering time

LangSmith's hidden costs

GPT-4 eval multiplies with every metric

External guardrails (e.g., $50K+/year for alternatives)

Synthetic data generation tools

Custom metric storage and versioning

Framework migration rewrites

The convenience of LangChain integration has a backend cost that compounds at scale.

Galileo’s customer impact

Three organizations across financial intelligence, home services, and telecommunications demonstrate how production-grade observability changes not just what you can see, but what you can prevent.

BAM Elevate (Financial data intelligence)

Challenge: Evaluate 1,000 agentic workflows retrieving 100+ documents each. Traditional evals would cost $50K+/month.

Results with Galileo:

13 concurrent projects

60+ evaluation runs

5 different LLMs tested

Sub-200ms eval latency at 97% cost savings

Framework-agnostic: tested Tool Retrieval Models across GPT-4, Firefunction, and custom

"It was quite positive. We were able to get insights... Previously, we had tried other tools for evaluation and Galileo did get us pretty far." — Mano, Research Lead

Service Titan (Home services platform)

Challenge: Evaluate call transcription, sentiment analysis, and job routing across different use cases without switching tools.

Results with Galileo:

Single dashboard for 3 distinct AI systems

Custom "Expectation Adherence" metric via CLHF

Entire research team shares results via Slack integration

o1-preview evaluated within hours of announcement

Service Titan's principal data architect: "The visibility Evaluate provides is the first time this is happening for us. We can't measure what we can't see."

Fortune 50 telco (20M+ daily traces)

Challenge: Process massive scale with real-time guardrails for compliance.

Results with Galileo:

50,000+ live agents monitored

Sub-200ms inline blocking of policy violations

PII detection and redaction before storage

97% eval cost reduction enabling 100% sampling

While outages happen, the operational maturity to prevent certificate expiration—and the architecture to queue data during failures—separates platforms built for production from tools built for prototyping.

Galileo's infrastructure includes automatic failover, data queuing during incidents, and SOC 2 Type II + ISO 27001 certifications with regular audits.

Which platform fits your production roadmap?

Before choosing an observability platform, ask these questions:

"How do you generate test data before production?" Exposes whether synthetic data is built-in or you're testing in prod.

"Can I reuse evaluators across projects?" Reveals metric store capabilities vs. prompt recreation busywork.

"Can you block bad outputs at runtime?" Distinguishes guardrails from forensic logging.

"What happens if I outgrow my current framework?" Tests for framework lock-in vs. portability.

"How much time will my team spend recreating evaluators?" Quantifies the economic cost of no reusability.

"What's your operational maturity and incident history?" Separates production-ready platforms from prototyping tools.

Galileo is purpose-built for production agent reliability. Framework-agnostic. Guardrails included. When you're prototyping with LangChain, LangSmith's integration saves time. When you're shipping at scale, Galileo's platform prevents outages.

Choose Galileo when you need:

Real-time protection for regulated workloads (financial services, healthcare, PII-sensitive)

Framework flexibility to avoid orchestration lock-in

Cost-efficient scale with 20M+ traces daily

Metric reusability across teams and projects

On-prem or hybrid deployment for data residency

Sub-200ms guardrails that block failures inline

Agent-specific observability with session-level tracking

LangSmith is a feature masquerading as a platform. It traces LangChain applications beautifully. But production demands synthetic data, runtime protection, metric reusability, and framework portability.

Pick LangSmith If:

Your entire pipeline lives inside LangChain

You're in rapid prototyping (pre-production)

You prefer lightweight SaaS and can accept framework dependency

Token costs aren't a concern (<1M traces/month)

You're willing to build external guardrails separately

Evaluate your LLMs and agents with Galileo

Moving from reactive debugging to proactive quality assurance requires a platform built for production complexity.

Galileo's unified observability platform delivers:

Automated CI/CD guardrails: Block releases failing quality thresholds

Multi-dimensional evals: Luna-2 models assess correctness, toxicity, bias, and adherence at 97% lower cost

Real-time runtime protection: Scan every prompt/response, block harmful outputs before users see them

Intelligent failure detection: Insights Engine clusters failures, surfaces root causes, and recommends fixes

CLHF optimization: Transform expert reviews into reusable evaluators in minutes

Start building reliable AI agents that users trust—and stop treating production like your testing environment.

Conor Bronsdon