Sep 27, 2025

How to Evaluate Large Language Models

The global large language models market size was estimated at USD 5.6 billion in 2024 and is projected to reach USD 35.4 billion by 2030, growing at a CAGR of 36.9% from 2025 to 2030. With this explosive growth comes unprecedented risk—a single hallucinating agent can damage brand reputation, leak sensitive data, or create regulatory exposure worth millions.

The stakes couldn't be higher, especially for enterprises in regulated industries where AI failures cascade beyond technical issues into business-threatening crises.

You probably recognize this paradox: you need to move quickly with AI adoption to maintain competitive advantage, but traditional evaluation methods don't adequately mitigate the unique risks of modern LLMs and autonomous agents.

This comprehensive guide provides a structured framework to evaluate LLMs at scale. We'll walk through seven critical steps that balance technical performance with business objectives, helping you build confidence in your AI systems while accelerating deployment.

We recently explored this topic on our Chain of Thought podcast, where industry experts shared practical insights and real-world implementation strategies

What is LLM eval?

LLM eval is the systematic process of assessing language model performance across multiple dimensions to ensure models meet both technical specifications and business requirements. Unlike traditional ML evals that focus on simple accuracy metrics, effective LLM evaluation requires a multifaceted approach addressing unique challenges:

Non-deterministic outputs: LLMs generate different responses to identical inputs

Subjective quality assessment: Many aspects require nuanced human-like judgment

Complex failure modes: Issues like hallucinations aren't easily captured by single metrics

Business alignment: Technical excellence means nothing without solving real problems

Modern LLM evals frameworks must span the entire AI lifecycle from development through deployment and continuous monitoring. They require both quantitative metrics and qualitative assessment methods, combining automated evaluation with human judgment where appropriate.

With this foundation established, let's explore the step-by-step framework for implementing comprehensive LLM evaluation in your enterprise.

LLM evaluation step #1: Create a strategic evaluation framework

You might be tempted to jump straight to technical metrics, but this common mistake leads to a fundamental disconnect between your AI systems and actual business value. Many teams build impressive models that fail to solve real problems because their evals framework lacks strategic alignment with organizational objectives.

A McKinsey study emphasized that AI systems lacking transparent evaluation frameworks often fail to achieve anticipated productivity gains. Your team likely struggles to justify AI investments precisely because technical metrics don't translate into business impact that executives understand.

The most effective evaluation frameworks start by identifying your organization's strategic priorities—whether improving customer satisfaction, decreasing operational costs, or accelerating product development.

These priorities should directly inform what you measure. For customer-facing assistants, track metrics like CSAT scores, first-contact resolution rates, and support ticket volume reduction.

Purpose-built evaluation platforms like Galileo help bridge this gap by enabling you to create custom metrics aligned with specific business objectives. These capabilities allow you to develop a balanced scorecard approach that evaluates models across key dimensions:

Agentic flows

Expression/readability

Model confidence

Response quality

Safety/compliance

Rather than vague goals like "improve customer experience," establish concrete metrics such as "increase NPS by 15 points" or "reduce task completion time by 30%." This specificity creates accountability and makes ROI calculation straightforward through measurable cost savings, revenue gains, or productivity improvements.

LLM eval step #2: Choose between online and offline evaluation

Your AI systems face a challenging reality: controlled testing environments rarely match the complexity of real-world interactions. Many teams fall into the trap of over-relying on either pre-deployment benchmarks or post-deployment user feedback, missing critical evaluation opportunities throughout the AI lifecycle.

In benchmark-obsessed organizations, models perform beautifully on standard test sets but crumble when facing unexpected user queries. Conversely, teams that only react to production feedback often discover issues too late, after they've already impacted customers and business reputation.

The solution lies in implementing a balanced approach:

Offline evaluation: Takes place in controlled settings using benchmarks, test suites, and synthetic datasets. This approach helps identify specific capabilities and limitations without exposing users to potential issues. You can systematically test for hallucinations, bias, and task-specific performance before deployment.

Online evaluation: Occurs in production environments through A/B testing, user feedback collection, and real-time monitoring. This reveals how your LLM performs with actual users and real-world queries, uncovering issues that controlled tests might miss.

Integrating a modern experimentation functionality enables systematic offline evaluation through controlled testing against datasets with predefined inputs and expected outputs. This lets you compare different models, prompts, or configurations to identify best-performing variations through comprehensive metric evals before deployment.

LLM evaluation step #3: Build comprehensive evaluation datasets

You've likely experienced the frustration of models that perform well on standard benchmarks but struggle with your specific use cases. This disconnect happens because generic datasets fail to capture the unique challenges of your business domain and user interactions.

Many teams compound this problem by creating eval datasets that lack diversity, focusing on common cases while neglecting edge scenarios that often cause the most damaging failures in production. When edge cases emerge in the real world, these teams find themselves scrambling to understand what went wrong.

Creating effective eval datasets requires deliberate curation. Start by collecting examples that mirror real-world usage patterns across your intended application domains. This should include common queries, edge cases, and examples of problematic inputs that could trigger hallucinations or incorrect responses.

Rather than amassing thousands of similar examples, prioritize diversity in query types, complexity levels, and required reasoning paths. Annotate data with expected outputs, acceptable alternatives, and evaluation criteria to ensure consistent assessment.

Galileo's Dataset management capabilities further provide structured organization and versioning for test cases in experimentation workflows. The system supports input variables, reference outputs, and automatic versioning for reproducibility.

It also enables dynamic prompt templating with mustache syntax, nested field access, and systematic testing scenarios essential for evaluating prompts and continuous improvement.

For domain-specific evals, collaborate with subject matter experts to develop specialized test sets. These experts can identify critical scenarios, validate expected outputs, and ensure the evaluation captures nuances that generalists might miss.

LLM evaluation step #4: Select and implement technical metrics

When evaluating LLMs, you may find yourself drowning in a sea of potential metrics without clarity on which ones actually matter for your specific use case. Teams often make the mistake of either over-indexing on a single metric like accuracy (missing critical dimensions like safety) or trying to track too many metrics without prioritization (creating evaluation paralysis).

Either approach leaves you vulnerable to deploying models with hidden flaws that could damage user trust and business reputation. The key challenge lies in selecting a balanced set of metrics that comprehensively evaluates your models across all relevant dimensions without creating overwhelming complexity.

Reference-free metrics assess intrinsic model quality without ground truth:

Prompt Perplexity: Measures how predictable or familiar prompts are to language models using log probabilities. Lower scores indicate better prompt quality and model tuning.

Uncertainty: Quantifies how confident AI models are about their predictions by measuring randomness in token-level decisions during response generation.

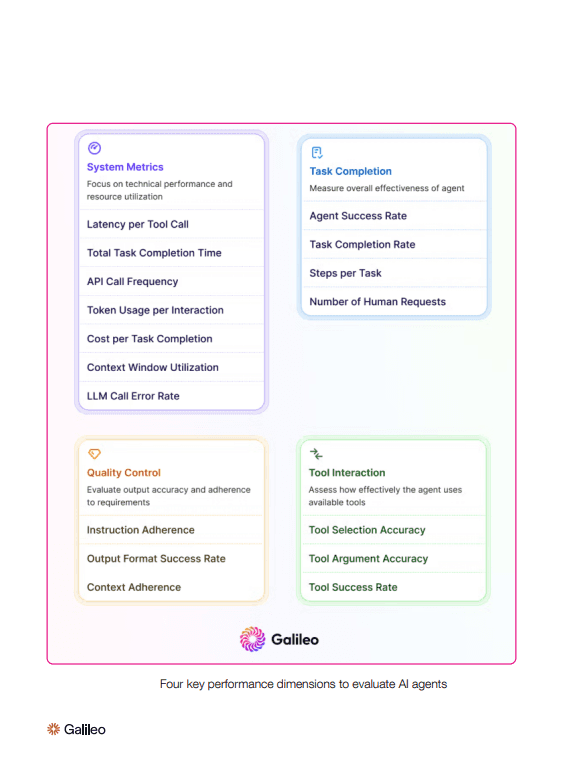

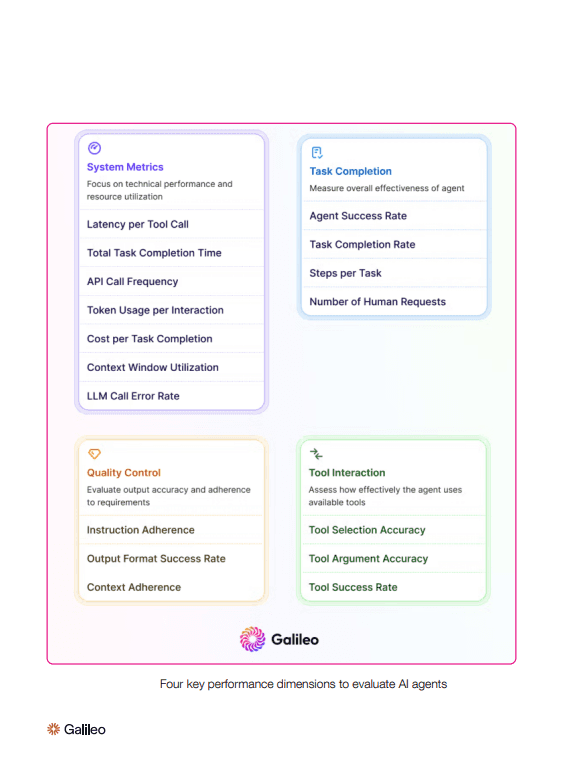

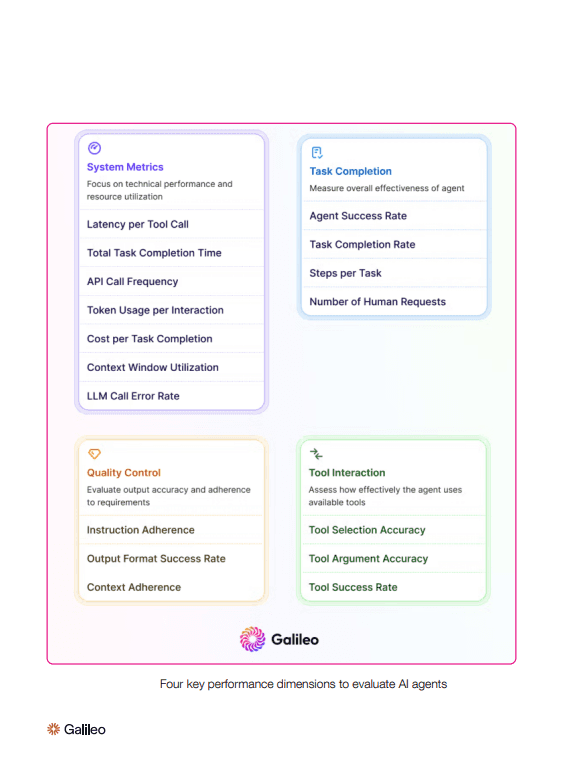

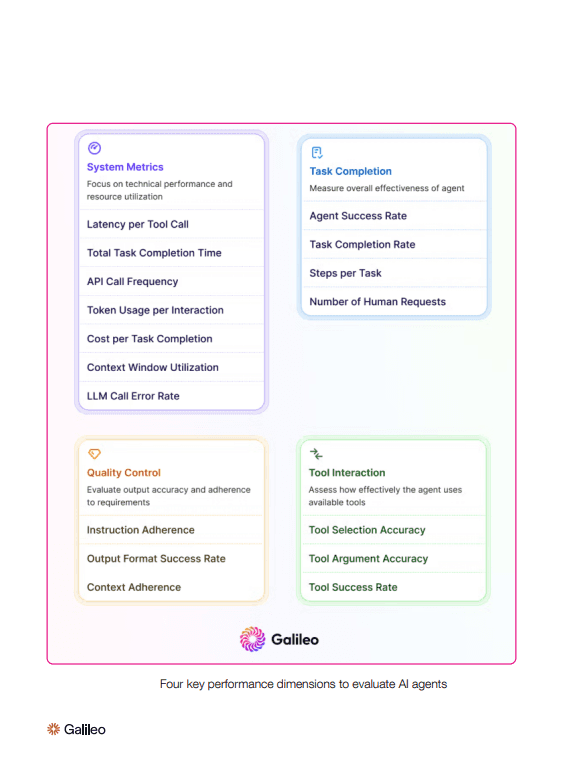

For agent-specific eval, look to specialized metrics that assess functional capabilities:

Tool Selection Quality: Evaluates whether agents select correct tools with appropriate parameters for given tasks by assessing tool necessity, successful selection, and parameter accuracy.

Action Completion: Measures whether AI agents fully accomplish every user goal and provide clear answers or confirmations for every request across multi-turn interactions.

Galileo's Metrics Overview further provides ready-to-use out-of-the-box metrics across these five key categories, enabling comprehensive evaluation without requiring custom metric development.

LLM evaluation step #5: Ensure responsible LLMs through guardrails

Your AI systems will inevitably encounter ethically complex situations, potentially generating harmful content, leaking private information, or exhibiting discriminatory behavior. Many organizations discover these risks too late—after a high-profile incident damages customer trust or triggers regulatory scrutiny.

Traditional approaches to AI safety often focus narrowly on post-deployment monitoring or simplistic keyword filtering. These reactive methods leave significant vulnerabilities in your AI systems, especially when dealing with sophisticated LLMs that can generate harmful content in subtle, context-dependent ways.

Implementing comprehensive guardrails requires a proactive, multi-layered approach addressing bias, privacy, security, and content safety throughout the AI lifecycle.

Bias detection demands algorithmic constraints during model training to enforce fairness criteria, adversarial testing to identify edge cases where bias emerges, and diverse evaluation teams to spot biases that homogeneous groups might miss.

Galileo's Runtime Protection system provides real-time guardrailing and safety mechanisms that intercept and evaluate AI inputs and outputs before they reach end users. This capability implements comprehensive protection against various threats.

For sensitive industries, you should employ specialized guardrails like PII detection to identify sensitive data spans, including account information, addresses, credit cards, SSNs, emails, names, and phone numbers.

LLM evaluation step #6: Automate low-cost baseline evals

Run-of-the-mill GPT-based "LLM-as-a-judge" setups feel affordable during prototyping, yet once you hit production volumes, the invoices skyrocket. At a million daily evals, GPT-4 burns roughly $2,500 in tokens every single day. Many teams quietly drop critical tests and hope for the best.

Luna-2 ends that trade-off. This purpose-built evaluator scores outputs for hallucination, relevance, and brand alignment at roughly 3% of GPT-4 cost—about $0.02 per million tokens. Response times? A median of 152 ms versus more than 3 seconds for GPT-4o.

Peer-reviewed results demonstrate up to 97% cost reduction without sacrificing accuracy, letting you evaluate every single trace instead of a thin sample.

Implementation takes minutes: stream your application logs to the evaluation API, select pre-built metrics that matter most, then batch-score historical data to establish your baseline.

Calibrate pass-fail thresholds early, monitor for domain drift, and flag tricky edge cases for continuous learning with human feedback. Your reliable baseline becomes the foundation for hunting hidden failures that silently erode user trust.

For critical applications, supplement automated evaluation with Galileo's Annotation system, which provides human-in-the-loop capabilities for improving AI evaluation accuracy and model performance.

LLM evals step #7: Integrate monitoring into MLOps

As your AI initiatives mature, you'll face the challenge of maintaining consistent evaluation practices across an expanding portfolio of models and use cases. Many organizations struggle with fragmented evaluation workflows that exist outside their primary development processes, creating redundancy and inconsistency.

When evaluation isn't integrated into your development pipeline, testing becomes sporadic rather than systematic. Teams often discover quality issues late in the process, leading to costly rework and delayed deployments.

Manual evals processes also create bottlenecks that slow your ability to iterate and improve models.

Galileo's Trace system provides collections of related spans representing complete interactions or workflows in AI applications. These traces enable end-to-end visibility from input to output, support performance analysis and error diagnosis, and preserve context relationships between operations.

For production monitoring, implement Galileo's Insights Engine, which automatically surfaces agent failure modes (e.g., tool errors, planning issues) from logs, reducing debugging time from hours to minutes with actionable root cause analysis.

By embedding evaluation throughout your MLOps pipeline, you transform quality assessment from a periodic checkpoint into a continuous feedback loop that drives consistent improvement while reducing deployment risk.

Explore Galileo for enterprise-grade LLM evaluation

Enterprise AI leaders need complete visibility into their model behavior to ship with confidence. Galileo provides the comprehensive evaluation platform you need to deliver reliable AI at scale.

Here’s how Galileo revolutionizes how you evaluate and improve your AI systems:

Multidimensional metrics framework: Galileo offers pre-built metrics across five key dimensions (Agentic AI, Expression/Readability, Model Confidence, Response Quality, and Safety/Compliance), ensuring comprehensive evaluation without requiring custom development.

Runtime protection for risk mitigation: With Galileo's real-time guardrailing capabilities, you can intercept and evaluate AI outputs before they reach users, protecting against harmful content, PII exposure, and security vulnerabilities that could damage your brand.

Cost-effective evals at scale: Galileo's Luna-2 Small Language Models deliver evaluation at 97% lower cost than GPT-4 with millisecond latency, enabling comprehensive assessment even for high-volume production deployments.

Seamless MLOps integration: Galileo's flexible APIs and SDKs integrate into your existing development pipeline, providing continuous evals throughout the AI lifecycle without creating bottlenecks or process overhead.

Intelligent failure detection: Galileo’s Insights Engine automatically clusters similar failures, surfaces root-cause patterns, and recommends fixes, reducing debugging time while building institutional knowledge

Human-in-the-loop optimization: Galileo's Continuous Learning via Human Feedback (CLHF) transforms expert reviews into reusable evaluators, accelerating iteration while maintaining quality standards

Get started with Galileo today to discover how our enterprise-grade evals platform can help you deliver reliable, trustworthy AI with confidence.

The global large language models market size was estimated at USD 5.6 billion in 2024 and is projected to reach USD 35.4 billion by 2030, growing at a CAGR of 36.9% from 2025 to 2030. With this explosive growth comes unprecedented risk—a single hallucinating agent can damage brand reputation, leak sensitive data, or create regulatory exposure worth millions.

The stakes couldn't be higher, especially for enterprises in regulated industries where AI failures cascade beyond technical issues into business-threatening crises.

You probably recognize this paradox: you need to move quickly with AI adoption to maintain competitive advantage, but traditional evaluation methods don't adequately mitigate the unique risks of modern LLMs and autonomous agents.

This comprehensive guide provides a structured framework to evaluate LLMs at scale. We'll walk through seven critical steps that balance technical performance with business objectives, helping you build confidence in your AI systems while accelerating deployment.

We recently explored this topic on our Chain of Thought podcast, where industry experts shared practical insights and real-world implementation strategies

What is LLM eval?

LLM eval is the systematic process of assessing language model performance across multiple dimensions to ensure models meet both technical specifications and business requirements. Unlike traditional ML evals that focus on simple accuracy metrics, effective LLM evaluation requires a multifaceted approach addressing unique challenges:

Non-deterministic outputs: LLMs generate different responses to identical inputs

Subjective quality assessment: Many aspects require nuanced human-like judgment

Complex failure modes: Issues like hallucinations aren't easily captured by single metrics

Business alignment: Technical excellence means nothing without solving real problems

Modern LLM evals frameworks must span the entire AI lifecycle from development through deployment and continuous monitoring. They require both quantitative metrics and qualitative assessment methods, combining automated evaluation with human judgment where appropriate.

With this foundation established, let's explore the step-by-step framework for implementing comprehensive LLM evaluation in your enterprise.

LLM evaluation step #1: Create a strategic evaluation framework

You might be tempted to jump straight to technical metrics, but this common mistake leads to a fundamental disconnect between your AI systems and actual business value. Many teams build impressive models that fail to solve real problems because their evals framework lacks strategic alignment with organizational objectives.

A McKinsey study emphasized that AI systems lacking transparent evaluation frameworks often fail to achieve anticipated productivity gains. Your team likely struggles to justify AI investments precisely because technical metrics don't translate into business impact that executives understand.

The most effective evaluation frameworks start by identifying your organization's strategic priorities—whether improving customer satisfaction, decreasing operational costs, or accelerating product development.

These priorities should directly inform what you measure. For customer-facing assistants, track metrics like CSAT scores, first-contact resolution rates, and support ticket volume reduction.

Purpose-built evaluation platforms like Galileo help bridge this gap by enabling you to create custom metrics aligned with specific business objectives. These capabilities allow you to develop a balanced scorecard approach that evaluates models across key dimensions:

Agentic flows

Expression/readability

Model confidence

Response quality

Safety/compliance

Rather than vague goals like "improve customer experience," establish concrete metrics such as "increase NPS by 15 points" or "reduce task completion time by 30%." This specificity creates accountability and makes ROI calculation straightforward through measurable cost savings, revenue gains, or productivity improvements.

LLM eval step #2: Choose between online and offline evaluation

Your AI systems face a challenging reality: controlled testing environments rarely match the complexity of real-world interactions. Many teams fall into the trap of over-relying on either pre-deployment benchmarks or post-deployment user feedback, missing critical evaluation opportunities throughout the AI lifecycle.

In benchmark-obsessed organizations, models perform beautifully on standard test sets but crumble when facing unexpected user queries. Conversely, teams that only react to production feedback often discover issues too late, after they've already impacted customers and business reputation.

The solution lies in implementing a balanced approach:

Offline evaluation: Takes place in controlled settings using benchmarks, test suites, and synthetic datasets. This approach helps identify specific capabilities and limitations without exposing users to potential issues. You can systematically test for hallucinations, bias, and task-specific performance before deployment.

Online evaluation: Occurs in production environments through A/B testing, user feedback collection, and real-time monitoring. This reveals how your LLM performs with actual users and real-world queries, uncovering issues that controlled tests might miss.

Integrating a modern experimentation functionality enables systematic offline evaluation through controlled testing against datasets with predefined inputs and expected outputs. This lets you compare different models, prompts, or configurations to identify best-performing variations through comprehensive metric evals before deployment.

LLM evaluation step #3: Build comprehensive evaluation datasets

You've likely experienced the frustration of models that perform well on standard benchmarks but struggle with your specific use cases. This disconnect happens because generic datasets fail to capture the unique challenges of your business domain and user interactions.

Many teams compound this problem by creating eval datasets that lack diversity, focusing on common cases while neglecting edge scenarios that often cause the most damaging failures in production. When edge cases emerge in the real world, these teams find themselves scrambling to understand what went wrong.

Creating effective eval datasets requires deliberate curation. Start by collecting examples that mirror real-world usage patterns across your intended application domains. This should include common queries, edge cases, and examples of problematic inputs that could trigger hallucinations or incorrect responses.

Rather than amassing thousands of similar examples, prioritize diversity in query types, complexity levels, and required reasoning paths. Annotate data with expected outputs, acceptable alternatives, and evaluation criteria to ensure consistent assessment.

Galileo's Dataset management capabilities further provide structured organization and versioning for test cases in experimentation workflows. The system supports input variables, reference outputs, and automatic versioning for reproducibility.

It also enables dynamic prompt templating with mustache syntax, nested field access, and systematic testing scenarios essential for evaluating prompts and continuous improvement.

For domain-specific evals, collaborate with subject matter experts to develop specialized test sets. These experts can identify critical scenarios, validate expected outputs, and ensure the evaluation captures nuances that generalists might miss.

LLM evaluation step #4: Select and implement technical metrics

When evaluating LLMs, you may find yourself drowning in a sea of potential metrics without clarity on which ones actually matter for your specific use case. Teams often make the mistake of either over-indexing on a single metric like accuracy (missing critical dimensions like safety) or trying to track too many metrics without prioritization (creating evaluation paralysis).

Either approach leaves you vulnerable to deploying models with hidden flaws that could damage user trust and business reputation. The key challenge lies in selecting a balanced set of metrics that comprehensively evaluates your models across all relevant dimensions without creating overwhelming complexity.

Reference-free metrics assess intrinsic model quality without ground truth:

Prompt Perplexity: Measures how predictable or familiar prompts are to language models using log probabilities. Lower scores indicate better prompt quality and model tuning.

Uncertainty: Quantifies how confident AI models are about their predictions by measuring randomness in token-level decisions during response generation.

For agent-specific eval, look to specialized metrics that assess functional capabilities:

Tool Selection Quality: Evaluates whether agents select correct tools with appropriate parameters for given tasks by assessing tool necessity, successful selection, and parameter accuracy.

Action Completion: Measures whether AI agents fully accomplish every user goal and provide clear answers or confirmations for every request across multi-turn interactions.

Galileo's Metrics Overview further provides ready-to-use out-of-the-box metrics across these five key categories, enabling comprehensive evaluation without requiring custom metric development.

LLM evaluation step #5: Ensure responsible LLMs through guardrails

Your AI systems will inevitably encounter ethically complex situations, potentially generating harmful content, leaking private information, or exhibiting discriminatory behavior. Many organizations discover these risks too late—after a high-profile incident damages customer trust or triggers regulatory scrutiny.

Traditional approaches to AI safety often focus narrowly on post-deployment monitoring or simplistic keyword filtering. These reactive methods leave significant vulnerabilities in your AI systems, especially when dealing with sophisticated LLMs that can generate harmful content in subtle, context-dependent ways.

Implementing comprehensive guardrails requires a proactive, multi-layered approach addressing bias, privacy, security, and content safety throughout the AI lifecycle.

Bias detection demands algorithmic constraints during model training to enforce fairness criteria, adversarial testing to identify edge cases where bias emerges, and diverse evaluation teams to spot biases that homogeneous groups might miss.

Galileo's Runtime Protection system provides real-time guardrailing and safety mechanisms that intercept and evaluate AI inputs and outputs before they reach end users. This capability implements comprehensive protection against various threats.

For sensitive industries, you should employ specialized guardrails like PII detection to identify sensitive data spans, including account information, addresses, credit cards, SSNs, emails, names, and phone numbers.

LLM evaluation step #6: Automate low-cost baseline evals

Run-of-the-mill GPT-based "LLM-as-a-judge" setups feel affordable during prototyping, yet once you hit production volumes, the invoices skyrocket. At a million daily evals, GPT-4 burns roughly $2,500 in tokens every single day. Many teams quietly drop critical tests and hope for the best.

Luna-2 ends that trade-off. This purpose-built evaluator scores outputs for hallucination, relevance, and brand alignment at roughly 3% of GPT-4 cost—about $0.02 per million tokens. Response times? A median of 152 ms versus more than 3 seconds for GPT-4o.

Peer-reviewed results demonstrate up to 97% cost reduction without sacrificing accuracy, letting you evaluate every single trace instead of a thin sample.

Implementation takes minutes: stream your application logs to the evaluation API, select pre-built metrics that matter most, then batch-score historical data to establish your baseline.

Calibrate pass-fail thresholds early, monitor for domain drift, and flag tricky edge cases for continuous learning with human feedback. Your reliable baseline becomes the foundation for hunting hidden failures that silently erode user trust.

For critical applications, supplement automated evaluation with Galileo's Annotation system, which provides human-in-the-loop capabilities for improving AI evaluation accuracy and model performance.

LLM evals step #7: Integrate monitoring into MLOps

As your AI initiatives mature, you'll face the challenge of maintaining consistent evaluation practices across an expanding portfolio of models and use cases. Many organizations struggle with fragmented evaluation workflows that exist outside their primary development processes, creating redundancy and inconsistency.

When evaluation isn't integrated into your development pipeline, testing becomes sporadic rather than systematic. Teams often discover quality issues late in the process, leading to costly rework and delayed deployments.

Manual evals processes also create bottlenecks that slow your ability to iterate and improve models.

Galileo's Trace system provides collections of related spans representing complete interactions or workflows in AI applications. These traces enable end-to-end visibility from input to output, support performance analysis and error diagnosis, and preserve context relationships between operations.

For production monitoring, implement Galileo's Insights Engine, which automatically surfaces agent failure modes (e.g., tool errors, planning issues) from logs, reducing debugging time from hours to minutes with actionable root cause analysis.

By embedding evaluation throughout your MLOps pipeline, you transform quality assessment from a periodic checkpoint into a continuous feedback loop that drives consistent improvement while reducing deployment risk.

Explore Galileo for enterprise-grade LLM evaluation

Enterprise AI leaders need complete visibility into their model behavior to ship with confidence. Galileo provides the comprehensive evaluation platform you need to deliver reliable AI at scale.

Here’s how Galileo revolutionizes how you evaluate and improve your AI systems:

Multidimensional metrics framework: Galileo offers pre-built metrics across five key dimensions (Agentic AI, Expression/Readability, Model Confidence, Response Quality, and Safety/Compliance), ensuring comprehensive evaluation without requiring custom development.

Runtime protection for risk mitigation: With Galileo's real-time guardrailing capabilities, you can intercept and evaluate AI outputs before they reach users, protecting against harmful content, PII exposure, and security vulnerabilities that could damage your brand.

Cost-effective evals at scale: Galileo's Luna-2 Small Language Models deliver evaluation at 97% lower cost than GPT-4 with millisecond latency, enabling comprehensive assessment even for high-volume production deployments.

Seamless MLOps integration: Galileo's flexible APIs and SDKs integrate into your existing development pipeline, providing continuous evals throughout the AI lifecycle without creating bottlenecks or process overhead.

Intelligent failure detection: Galileo’s Insights Engine automatically clusters similar failures, surfaces root-cause patterns, and recommends fixes, reducing debugging time while building institutional knowledge

Human-in-the-loop optimization: Galileo's Continuous Learning via Human Feedback (CLHF) transforms expert reviews into reusable evaluators, accelerating iteration while maintaining quality standards

Get started with Galileo today to discover how our enterprise-grade evals platform can help you deliver reliable, trustworthy AI with confidence.

The global large language models market size was estimated at USD 5.6 billion in 2024 and is projected to reach USD 35.4 billion by 2030, growing at a CAGR of 36.9% from 2025 to 2030. With this explosive growth comes unprecedented risk—a single hallucinating agent can damage brand reputation, leak sensitive data, or create regulatory exposure worth millions.

The stakes couldn't be higher, especially for enterprises in regulated industries where AI failures cascade beyond technical issues into business-threatening crises.

You probably recognize this paradox: you need to move quickly with AI adoption to maintain competitive advantage, but traditional evaluation methods don't adequately mitigate the unique risks of modern LLMs and autonomous agents.

This comprehensive guide provides a structured framework to evaluate LLMs at scale. We'll walk through seven critical steps that balance technical performance with business objectives, helping you build confidence in your AI systems while accelerating deployment.

We recently explored this topic on our Chain of Thought podcast, where industry experts shared practical insights and real-world implementation strategies

What is LLM eval?

LLM eval is the systematic process of assessing language model performance across multiple dimensions to ensure models meet both technical specifications and business requirements. Unlike traditional ML evals that focus on simple accuracy metrics, effective LLM evaluation requires a multifaceted approach addressing unique challenges:

Non-deterministic outputs: LLMs generate different responses to identical inputs

Subjective quality assessment: Many aspects require nuanced human-like judgment

Complex failure modes: Issues like hallucinations aren't easily captured by single metrics

Business alignment: Technical excellence means nothing without solving real problems

Modern LLM evals frameworks must span the entire AI lifecycle from development through deployment and continuous monitoring. They require both quantitative metrics and qualitative assessment methods, combining automated evaluation with human judgment where appropriate.

With this foundation established, let's explore the step-by-step framework for implementing comprehensive LLM evaluation in your enterprise.

LLM evaluation step #1: Create a strategic evaluation framework

You might be tempted to jump straight to technical metrics, but this common mistake leads to a fundamental disconnect between your AI systems and actual business value. Many teams build impressive models that fail to solve real problems because their evals framework lacks strategic alignment with organizational objectives.

A McKinsey study emphasized that AI systems lacking transparent evaluation frameworks often fail to achieve anticipated productivity gains. Your team likely struggles to justify AI investments precisely because technical metrics don't translate into business impact that executives understand.

The most effective evaluation frameworks start by identifying your organization's strategic priorities—whether improving customer satisfaction, decreasing operational costs, or accelerating product development.

These priorities should directly inform what you measure. For customer-facing assistants, track metrics like CSAT scores, first-contact resolution rates, and support ticket volume reduction.

Purpose-built evaluation platforms like Galileo help bridge this gap by enabling you to create custom metrics aligned with specific business objectives. These capabilities allow you to develop a balanced scorecard approach that evaluates models across key dimensions:

Agentic flows

Expression/readability

Model confidence

Response quality

Safety/compliance

Rather than vague goals like "improve customer experience," establish concrete metrics such as "increase NPS by 15 points" or "reduce task completion time by 30%." This specificity creates accountability and makes ROI calculation straightforward through measurable cost savings, revenue gains, or productivity improvements.

LLM eval step #2: Choose between online and offline evaluation

Your AI systems face a challenging reality: controlled testing environments rarely match the complexity of real-world interactions. Many teams fall into the trap of over-relying on either pre-deployment benchmarks or post-deployment user feedback, missing critical evaluation opportunities throughout the AI lifecycle.

In benchmark-obsessed organizations, models perform beautifully on standard test sets but crumble when facing unexpected user queries. Conversely, teams that only react to production feedback often discover issues too late, after they've already impacted customers and business reputation.

The solution lies in implementing a balanced approach:

Offline evaluation: Takes place in controlled settings using benchmarks, test suites, and synthetic datasets. This approach helps identify specific capabilities and limitations without exposing users to potential issues. You can systematically test for hallucinations, bias, and task-specific performance before deployment.

Online evaluation: Occurs in production environments through A/B testing, user feedback collection, and real-time monitoring. This reveals how your LLM performs with actual users and real-world queries, uncovering issues that controlled tests might miss.

Integrating a modern experimentation functionality enables systematic offline evaluation through controlled testing against datasets with predefined inputs and expected outputs. This lets you compare different models, prompts, or configurations to identify best-performing variations through comprehensive metric evals before deployment.

LLM evaluation step #3: Build comprehensive evaluation datasets

You've likely experienced the frustration of models that perform well on standard benchmarks but struggle with your specific use cases. This disconnect happens because generic datasets fail to capture the unique challenges of your business domain and user interactions.

Many teams compound this problem by creating eval datasets that lack diversity, focusing on common cases while neglecting edge scenarios that often cause the most damaging failures in production. When edge cases emerge in the real world, these teams find themselves scrambling to understand what went wrong.

Creating effective eval datasets requires deliberate curation. Start by collecting examples that mirror real-world usage patterns across your intended application domains. This should include common queries, edge cases, and examples of problematic inputs that could trigger hallucinations or incorrect responses.

Rather than amassing thousands of similar examples, prioritize diversity in query types, complexity levels, and required reasoning paths. Annotate data with expected outputs, acceptable alternatives, and evaluation criteria to ensure consistent assessment.

Galileo's Dataset management capabilities further provide structured organization and versioning for test cases in experimentation workflows. The system supports input variables, reference outputs, and automatic versioning for reproducibility.

It also enables dynamic prompt templating with mustache syntax, nested field access, and systematic testing scenarios essential for evaluating prompts and continuous improvement.

For domain-specific evals, collaborate with subject matter experts to develop specialized test sets. These experts can identify critical scenarios, validate expected outputs, and ensure the evaluation captures nuances that generalists might miss.

LLM evaluation step #4: Select and implement technical metrics

When evaluating LLMs, you may find yourself drowning in a sea of potential metrics without clarity on which ones actually matter for your specific use case. Teams often make the mistake of either over-indexing on a single metric like accuracy (missing critical dimensions like safety) or trying to track too many metrics without prioritization (creating evaluation paralysis).

Either approach leaves you vulnerable to deploying models with hidden flaws that could damage user trust and business reputation. The key challenge lies in selecting a balanced set of metrics that comprehensively evaluates your models across all relevant dimensions without creating overwhelming complexity.

Reference-free metrics assess intrinsic model quality without ground truth:

Prompt Perplexity: Measures how predictable or familiar prompts are to language models using log probabilities. Lower scores indicate better prompt quality and model tuning.

Uncertainty: Quantifies how confident AI models are about their predictions by measuring randomness in token-level decisions during response generation.

For agent-specific eval, look to specialized metrics that assess functional capabilities:

Tool Selection Quality: Evaluates whether agents select correct tools with appropriate parameters for given tasks by assessing tool necessity, successful selection, and parameter accuracy.

Action Completion: Measures whether AI agents fully accomplish every user goal and provide clear answers or confirmations for every request across multi-turn interactions.

Galileo's Metrics Overview further provides ready-to-use out-of-the-box metrics across these five key categories, enabling comprehensive evaluation without requiring custom metric development.

LLM evaluation step #5: Ensure responsible LLMs through guardrails

Your AI systems will inevitably encounter ethically complex situations, potentially generating harmful content, leaking private information, or exhibiting discriminatory behavior. Many organizations discover these risks too late—after a high-profile incident damages customer trust or triggers regulatory scrutiny.

Traditional approaches to AI safety often focus narrowly on post-deployment monitoring or simplistic keyword filtering. These reactive methods leave significant vulnerabilities in your AI systems, especially when dealing with sophisticated LLMs that can generate harmful content in subtle, context-dependent ways.

Implementing comprehensive guardrails requires a proactive, multi-layered approach addressing bias, privacy, security, and content safety throughout the AI lifecycle.

Bias detection demands algorithmic constraints during model training to enforce fairness criteria, adversarial testing to identify edge cases where bias emerges, and diverse evaluation teams to spot biases that homogeneous groups might miss.

Galileo's Runtime Protection system provides real-time guardrailing and safety mechanisms that intercept and evaluate AI inputs and outputs before they reach end users. This capability implements comprehensive protection against various threats.

For sensitive industries, you should employ specialized guardrails like PII detection to identify sensitive data spans, including account information, addresses, credit cards, SSNs, emails, names, and phone numbers.

LLM evaluation step #6: Automate low-cost baseline evals

Run-of-the-mill GPT-based "LLM-as-a-judge" setups feel affordable during prototyping, yet once you hit production volumes, the invoices skyrocket. At a million daily evals, GPT-4 burns roughly $2,500 in tokens every single day. Many teams quietly drop critical tests and hope for the best.

Luna-2 ends that trade-off. This purpose-built evaluator scores outputs for hallucination, relevance, and brand alignment at roughly 3% of GPT-4 cost—about $0.02 per million tokens. Response times? A median of 152 ms versus more than 3 seconds for GPT-4o.

Peer-reviewed results demonstrate up to 97% cost reduction without sacrificing accuracy, letting you evaluate every single trace instead of a thin sample.

Implementation takes minutes: stream your application logs to the evaluation API, select pre-built metrics that matter most, then batch-score historical data to establish your baseline.

Calibrate pass-fail thresholds early, monitor for domain drift, and flag tricky edge cases for continuous learning with human feedback. Your reliable baseline becomes the foundation for hunting hidden failures that silently erode user trust.

For critical applications, supplement automated evaluation with Galileo's Annotation system, which provides human-in-the-loop capabilities for improving AI evaluation accuracy and model performance.

LLM evals step #7: Integrate monitoring into MLOps

As your AI initiatives mature, you'll face the challenge of maintaining consistent evaluation practices across an expanding portfolio of models and use cases. Many organizations struggle with fragmented evaluation workflows that exist outside their primary development processes, creating redundancy and inconsistency.

When evaluation isn't integrated into your development pipeline, testing becomes sporadic rather than systematic. Teams often discover quality issues late in the process, leading to costly rework and delayed deployments.

Manual evals processes also create bottlenecks that slow your ability to iterate and improve models.

Galileo's Trace system provides collections of related spans representing complete interactions or workflows in AI applications. These traces enable end-to-end visibility from input to output, support performance analysis and error diagnosis, and preserve context relationships between operations.

For production monitoring, implement Galileo's Insights Engine, which automatically surfaces agent failure modes (e.g., tool errors, planning issues) from logs, reducing debugging time from hours to minutes with actionable root cause analysis.

By embedding evaluation throughout your MLOps pipeline, you transform quality assessment from a periodic checkpoint into a continuous feedback loop that drives consistent improvement while reducing deployment risk.

Explore Galileo for enterprise-grade LLM evaluation

Enterprise AI leaders need complete visibility into their model behavior to ship with confidence. Galileo provides the comprehensive evaluation platform you need to deliver reliable AI at scale.

Here’s how Galileo revolutionizes how you evaluate and improve your AI systems:

Multidimensional metrics framework: Galileo offers pre-built metrics across five key dimensions (Agentic AI, Expression/Readability, Model Confidence, Response Quality, and Safety/Compliance), ensuring comprehensive evaluation without requiring custom development.

Runtime protection for risk mitigation: With Galileo's real-time guardrailing capabilities, you can intercept and evaluate AI outputs before they reach users, protecting against harmful content, PII exposure, and security vulnerabilities that could damage your brand.

Cost-effective evals at scale: Galileo's Luna-2 Small Language Models deliver evaluation at 97% lower cost than GPT-4 with millisecond latency, enabling comprehensive assessment even for high-volume production deployments.

Seamless MLOps integration: Galileo's flexible APIs and SDKs integrate into your existing development pipeline, providing continuous evals throughout the AI lifecycle without creating bottlenecks or process overhead.

Intelligent failure detection: Galileo’s Insights Engine automatically clusters similar failures, surfaces root-cause patterns, and recommends fixes, reducing debugging time while building institutional knowledge

Human-in-the-loop optimization: Galileo's Continuous Learning via Human Feedback (CLHF) transforms expert reviews into reusable evaluators, accelerating iteration while maintaining quality standards

Get started with Galileo today to discover how our enterprise-grade evals platform can help you deliver reliable, trustworthy AI with confidence.

The global large language models market size was estimated at USD 5.6 billion in 2024 and is projected to reach USD 35.4 billion by 2030, growing at a CAGR of 36.9% from 2025 to 2030. With this explosive growth comes unprecedented risk—a single hallucinating agent can damage brand reputation, leak sensitive data, or create regulatory exposure worth millions.

The stakes couldn't be higher, especially for enterprises in regulated industries where AI failures cascade beyond technical issues into business-threatening crises.

You probably recognize this paradox: you need to move quickly with AI adoption to maintain competitive advantage, but traditional evaluation methods don't adequately mitigate the unique risks of modern LLMs and autonomous agents.

This comprehensive guide provides a structured framework to evaluate LLMs at scale. We'll walk through seven critical steps that balance technical performance with business objectives, helping you build confidence in your AI systems while accelerating deployment.

We recently explored this topic on our Chain of Thought podcast, where industry experts shared practical insights and real-world implementation strategies

What is LLM eval?

LLM eval is the systematic process of assessing language model performance across multiple dimensions to ensure models meet both technical specifications and business requirements. Unlike traditional ML evals that focus on simple accuracy metrics, effective LLM evaluation requires a multifaceted approach addressing unique challenges:

Non-deterministic outputs: LLMs generate different responses to identical inputs

Subjective quality assessment: Many aspects require nuanced human-like judgment

Complex failure modes: Issues like hallucinations aren't easily captured by single metrics

Business alignment: Technical excellence means nothing without solving real problems

Modern LLM evals frameworks must span the entire AI lifecycle from development through deployment and continuous monitoring. They require both quantitative metrics and qualitative assessment methods, combining automated evaluation with human judgment where appropriate.

With this foundation established, let's explore the step-by-step framework for implementing comprehensive LLM evaluation in your enterprise.

LLM evaluation step #1: Create a strategic evaluation framework

You might be tempted to jump straight to technical metrics, but this common mistake leads to a fundamental disconnect between your AI systems and actual business value. Many teams build impressive models that fail to solve real problems because their evals framework lacks strategic alignment with organizational objectives.

A McKinsey study emphasized that AI systems lacking transparent evaluation frameworks often fail to achieve anticipated productivity gains. Your team likely struggles to justify AI investments precisely because technical metrics don't translate into business impact that executives understand.

The most effective evaluation frameworks start by identifying your organization's strategic priorities—whether improving customer satisfaction, decreasing operational costs, or accelerating product development.

These priorities should directly inform what you measure. For customer-facing assistants, track metrics like CSAT scores, first-contact resolution rates, and support ticket volume reduction.

Purpose-built evaluation platforms like Galileo help bridge this gap by enabling you to create custom metrics aligned with specific business objectives. These capabilities allow you to develop a balanced scorecard approach that evaluates models across key dimensions:

Agentic flows

Expression/readability

Model confidence

Response quality

Safety/compliance

Rather than vague goals like "improve customer experience," establish concrete metrics such as "increase NPS by 15 points" or "reduce task completion time by 30%." This specificity creates accountability and makes ROI calculation straightforward through measurable cost savings, revenue gains, or productivity improvements.

LLM eval step #2: Choose between online and offline evaluation

Your AI systems face a challenging reality: controlled testing environments rarely match the complexity of real-world interactions. Many teams fall into the trap of over-relying on either pre-deployment benchmarks or post-deployment user feedback, missing critical evaluation opportunities throughout the AI lifecycle.

In benchmark-obsessed organizations, models perform beautifully on standard test sets but crumble when facing unexpected user queries. Conversely, teams that only react to production feedback often discover issues too late, after they've already impacted customers and business reputation.

The solution lies in implementing a balanced approach:

Offline evaluation: Takes place in controlled settings using benchmarks, test suites, and synthetic datasets. This approach helps identify specific capabilities and limitations without exposing users to potential issues. You can systematically test for hallucinations, bias, and task-specific performance before deployment.

Online evaluation: Occurs in production environments through A/B testing, user feedback collection, and real-time monitoring. This reveals how your LLM performs with actual users and real-world queries, uncovering issues that controlled tests might miss.

Integrating a modern experimentation functionality enables systematic offline evaluation through controlled testing against datasets with predefined inputs and expected outputs. This lets you compare different models, prompts, or configurations to identify best-performing variations through comprehensive metric evals before deployment.

LLM evaluation step #3: Build comprehensive evaluation datasets

You've likely experienced the frustration of models that perform well on standard benchmarks but struggle with your specific use cases. This disconnect happens because generic datasets fail to capture the unique challenges of your business domain and user interactions.

Many teams compound this problem by creating eval datasets that lack diversity, focusing on common cases while neglecting edge scenarios that often cause the most damaging failures in production. When edge cases emerge in the real world, these teams find themselves scrambling to understand what went wrong.

Creating effective eval datasets requires deliberate curation. Start by collecting examples that mirror real-world usage patterns across your intended application domains. This should include common queries, edge cases, and examples of problematic inputs that could trigger hallucinations or incorrect responses.

Rather than amassing thousands of similar examples, prioritize diversity in query types, complexity levels, and required reasoning paths. Annotate data with expected outputs, acceptable alternatives, and evaluation criteria to ensure consistent assessment.

Galileo's Dataset management capabilities further provide structured organization and versioning for test cases in experimentation workflows. The system supports input variables, reference outputs, and automatic versioning for reproducibility.

It also enables dynamic prompt templating with mustache syntax, nested field access, and systematic testing scenarios essential for evaluating prompts and continuous improvement.

For domain-specific evals, collaborate with subject matter experts to develop specialized test sets. These experts can identify critical scenarios, validate expected outputs, and ensure the evaluation captures nuances that generalists might miss.

LLM evaluation step #4: Select and implement technical metrics

When evaluating LLMs, you may find yourself drowning in a sea of potential metrics without clarity on which ones actually matter for your specific use case. Teams often make the mistake of either over-indexing on a single metric like accuracy (missing critical dimensions like safety) or trying to track too many metrics without prioritization (creating evaluation paralysis).

Either approach leaves you vulnerable to deploying models with hidden flaws that could damage user trust and business reputation. The key challenge lies in selecting a balanced set of metrics that comprehensively evaluates your models across all relevant dimensions without creating overwhelming complexity.

Reference-free metrics assess intrinsic model quality without ground truth:

Prompt Perplexity: Measures how predictable or familiar prompts are to language models using log probabilities. Lower scores indicate better prompt quality and model tuning.

Uncertainty: Quantifies how confident AI models are about their predictions by measuring randomness in token-level decisions during response generation.

For agent-specific eval, look to specialized metrics that assess functional capabilities:

Tool Selection Quality: Evaluates whether agents select correct tools with appropriate parameters for given tasks by assessing tool necessity, successful selection, and parameter accuracy.

Action Completion: Measures whether AI agents fully accomplish every user goal and provide clear answers or confirmations for every request across multi-turn interactions.

Galileo's Metrics Overview further provides ready-to-use out-of-the-box metrics across these five key categories, enabling comprehensive evaluation without requiring custom metric development.

LLM evaluation step #5: Ensure responsible LLMs through guardrails

Your AI systems will inevitably encounter ethically complex situations, potentially generating harmful content, leaking private information, or exhibiting discriminatory behavior. Many organizations discover these risks too late—after a high-profile incident damages customer trust or triggers regulatory scrutiny.

Traditional approaches to AI safety often focus narrowly on post-deployment monitoring or simplistic keyword filtering. These reactive methods leave significant vulnerabilities in your AI systems, especially when dealing with sophisticated LLMs that can generate harmful content in subtle, context-dependent ways.

Implementing comprehensive guardrails requires a proactive, multi-layered approach addressing bias, privacy, security, and content safety throughout the AI lifecycle.

Bias detection demands algorithmic constraints during model training to enforce fairness criteria, adversarial testing to identify edge cases where bias emerges, and diverse evaluation teams to spot biases that homogeneous groups might miss.

Galileo's Runtime Protection system provides real-time guardrailing and safety mechanisms that intercept and evaluate AI inputs and outputs before they reach end users. This capability implements comprehensive protection against various threats.

For sensitive industries, you should employ specialized guardrails like PII detection to identify sensitive data spans, including account information, addresses, credit cards, SSNs, emails, names, and phone numbers.

LLM evaluation step #6: Automate low-cost baseline evals

Run-of-the-mill GPT-based "LLM-as-a-judge" setups feel affordable during prototyping, yet once you hit production volumes, the invoices skyrocket. At a million daily evals, GPT-4 burns roughly $2,500 in tokens every single day. Many teams quietly drop critical tests and hope for the best.

Luna-2 ends that trade-off. This purpose-built evaluator scores outputs for hallucination, relevance, and brand alignment at roughly 3% of GPT-4 cost—about $0.02 per million tokens. Response times? A median of 152 ms versus more than 3 seconds for GPT-4o.

Peer-reviewed results demonstrate up to 97% cost reduction without sacrificing accuracy, letting you evaluate every single trace instead of a thin sample.

Implementation takes minutes: stream your application logs to the evaluation API, select pre-built metrics that matter most, then batch-score historical data to establish your baseline.

Calibrate pass-fail thresholds early, monitor for domain drift, and flag tricky edge cases for continuous learning with human feedback. Your reliable baseline becomes the foundation for hunting hidden failures that silently erode user trust.

For critical applications, supplement automated evaluation with Galileo's Annotation system, which provides human-in-the-loop capabilities for improving AI evaluation accuracy and model performance.

LLM evals step #7: Integrate monitoring into MLOps

As your AI initiatives mature, you'll face the challenge of maintaining consistent evaluation practices across an expanding portfolio of models and use cases. Many organizations struggle with fragmented evaluation workflows that exist outside their primary development processes, creating redundancy and inconsistency.

When evaluation isn't integrated into your development pipeline, testing becomes sporadic rather than systematic. Teams often discover quality issues late in the process, leading to costly rework and delayed deployments.

Manual evals processes also create bottlenecks that slow your ability to iterate and improve models.

Galileo's Trace system provides collections of related spans representing complete interactions or workflows in AI applications. These traces enable end-to-end visibility from input to output, support performance analysis and error diagnosis, and preserve context relationships between operations.

For production monitoring, implement Galileo's Insights Engine, which automatically surfaces agent failure modes (e.g., tool errors, planning issues) from logs, reducing debugging time from hours to minutes with actionable root cause analysis.

By embedding evaluation throughout your MLOps pipeline, you transform quality assessment from a periodic checkpoint into a continuous feedback loop that drives consistent improvement while reducing deployment risk.

Explore Galileo for enterprise-grade LLM evaluation

Enterprise AI leaders need complete visibility into their model behavior to ship with confidence. Galileo provides the comprehensive evaluation platform you need to deliver reliable AI at scale.

Here’s how Galileo revolutionizes how you evaluate and improve your AI systems:

Multidimensional metrics framework: Galileo offers pre-built metrics across five key dimensions (Agentic AI, Expression/Readability, Model Confidence, Response Quality, and Safety/Compliance), ensuring comprehensive evaluation without requiring custom development.

Runtime protection for risk mitigation: With Galileo's real-time guardrailing capabilities, you can intercept and evaluate AI outputs before they reach users, protecting against harmful content, PII exposure, and security vulnerabilities that could damage your brand.

Cost-effective evals at scale: Galileo's Luna-2 Small Language Models deliver evaluation at 97% lower cost than GPT-4 with millisecond latency, enabling comprehensive assessment even for high-volume production deployments.

Seamless MLOps integration: Galileo's flexible APIs and SDKs integrate into your existing development pipeline, providing continuous evals throughout the AI lifecycle without creating bottlenecks or process overhead.

Intelligent failure detection: Galileo’s Insights Engine automatically clusters similar failures, surfaces root-cause patterns, and recommends fixes, reducing debugging time while building institutional knowledge

Human-in-the-loop optimization: Galileo's Continuous Learning via Human Feedback (CLHF) transforms expert reviews into reusable evaluators, accelerating iteration while maintaining quality standards

Get started with Galileo today to discover how our enterprise-grade evals platform can help you deliver reliable, trustworthy AI with confidence.

Conor Bronsdon