Introduction

What is Eval Engineering?

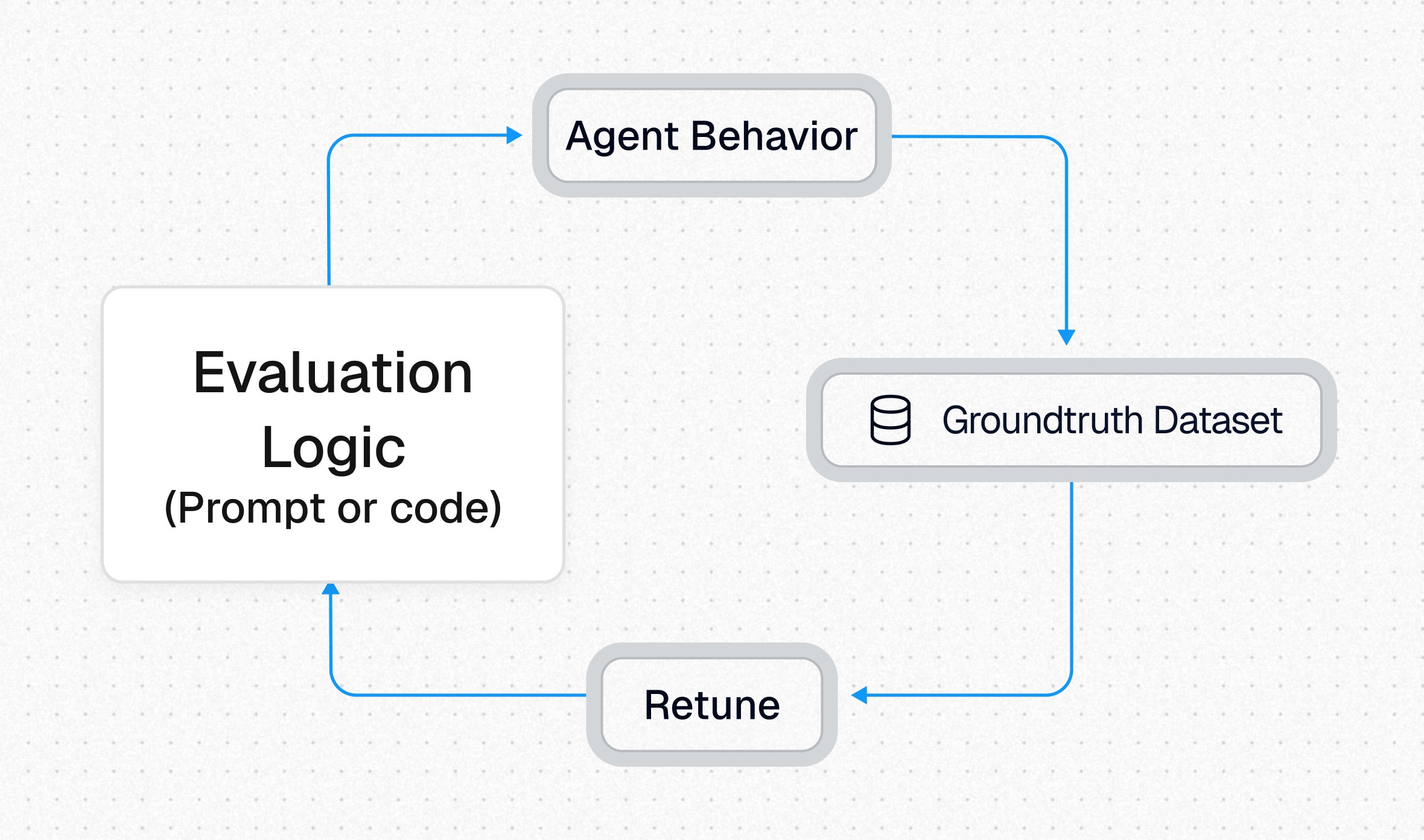

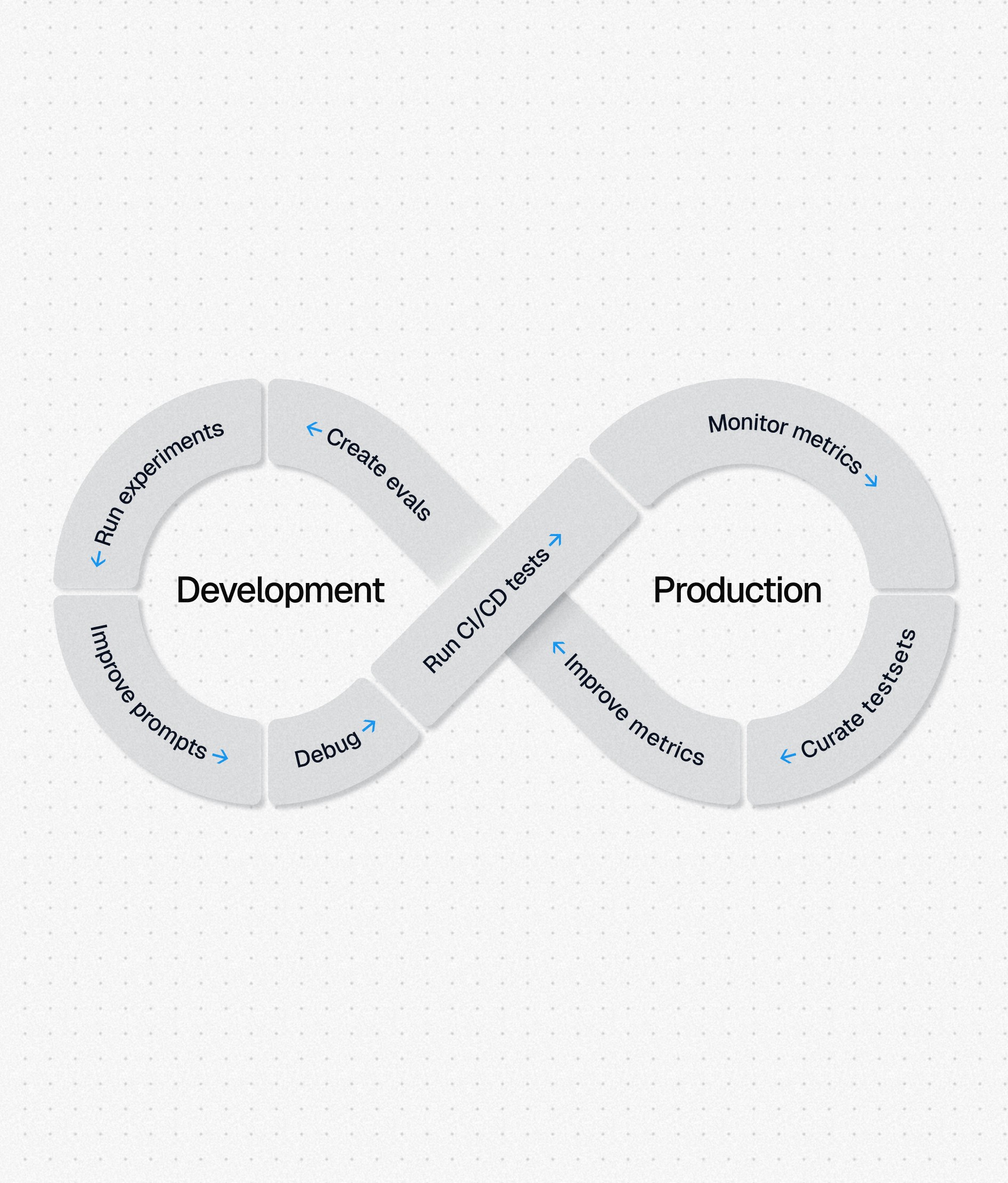

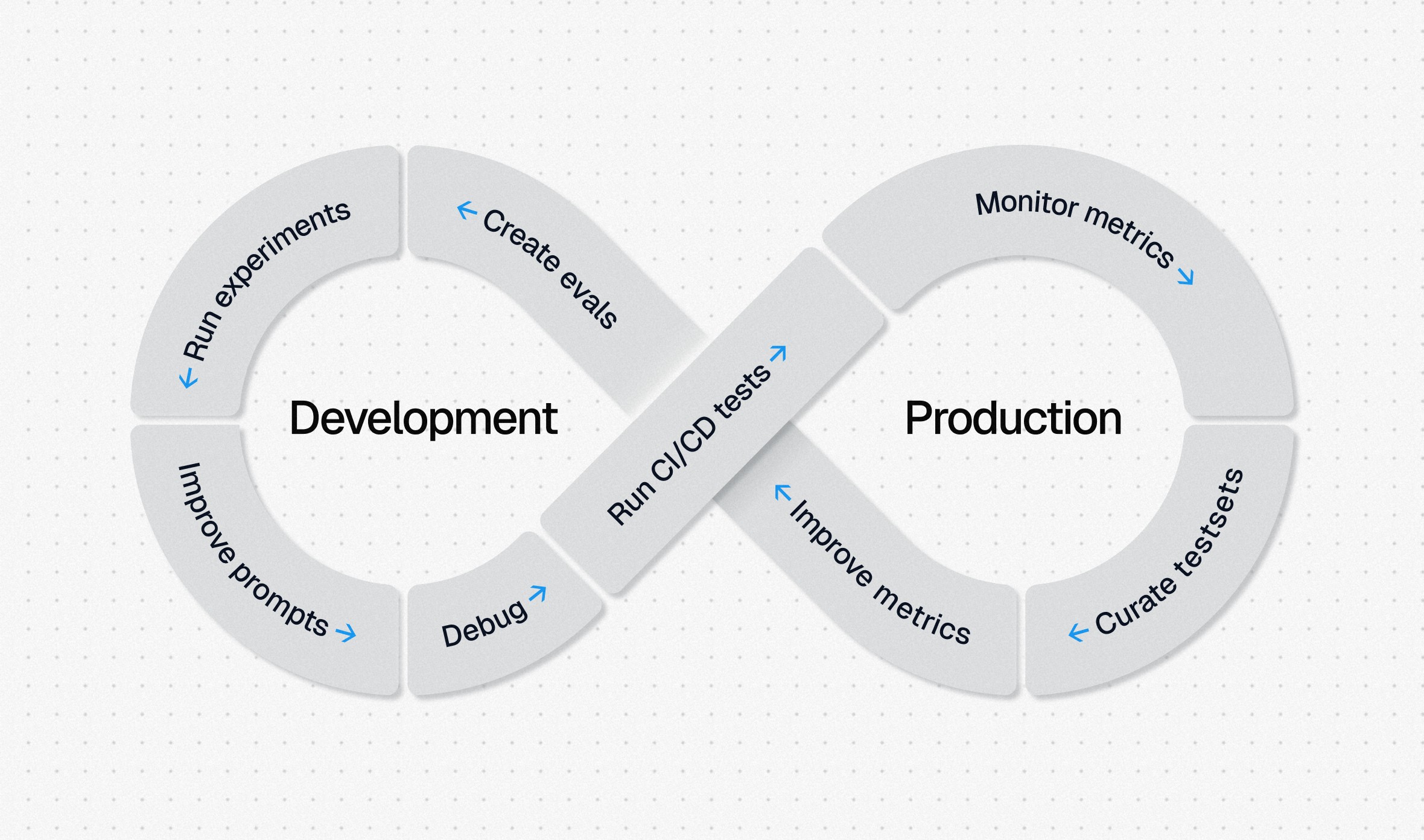

The Workflow

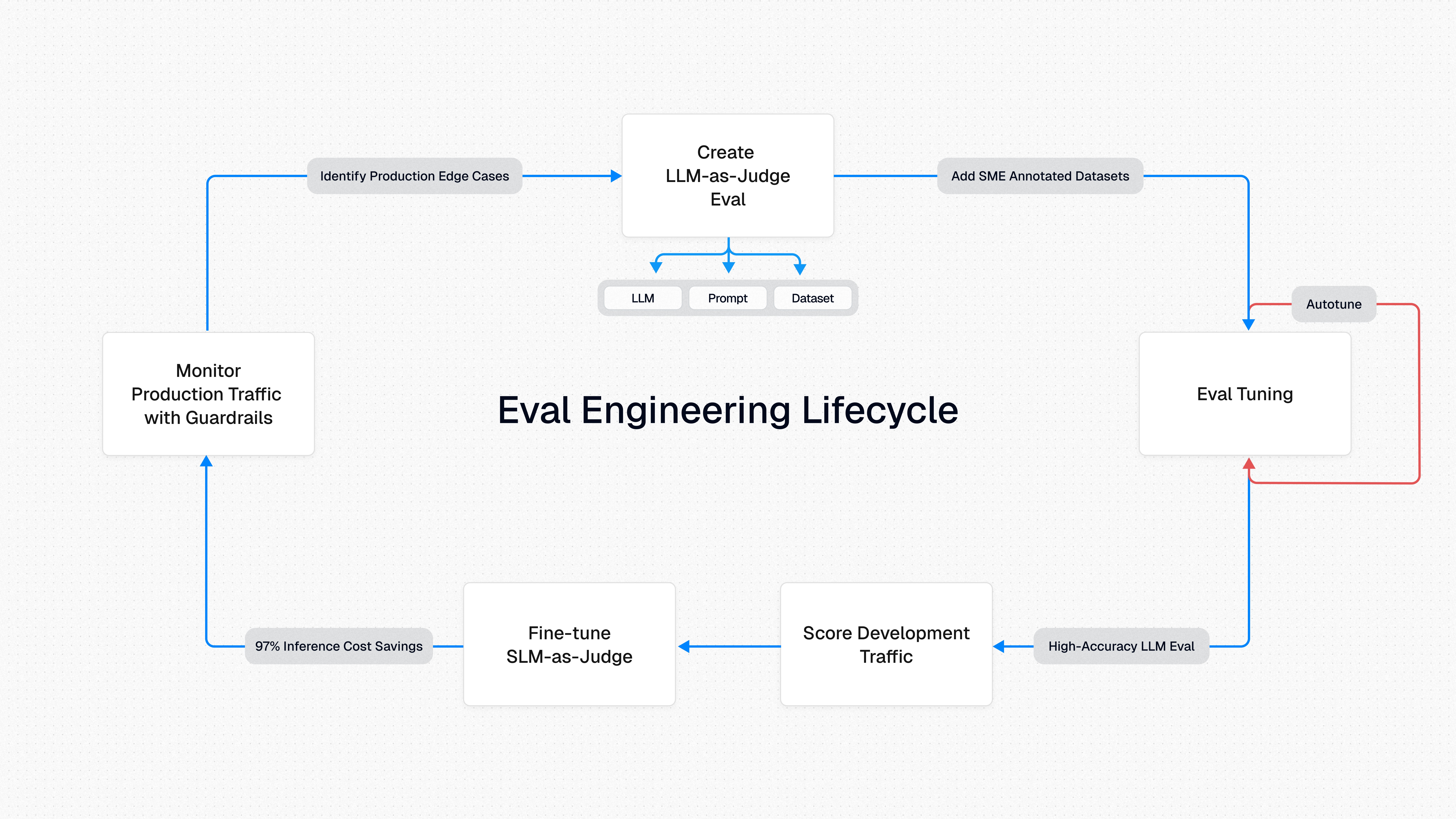

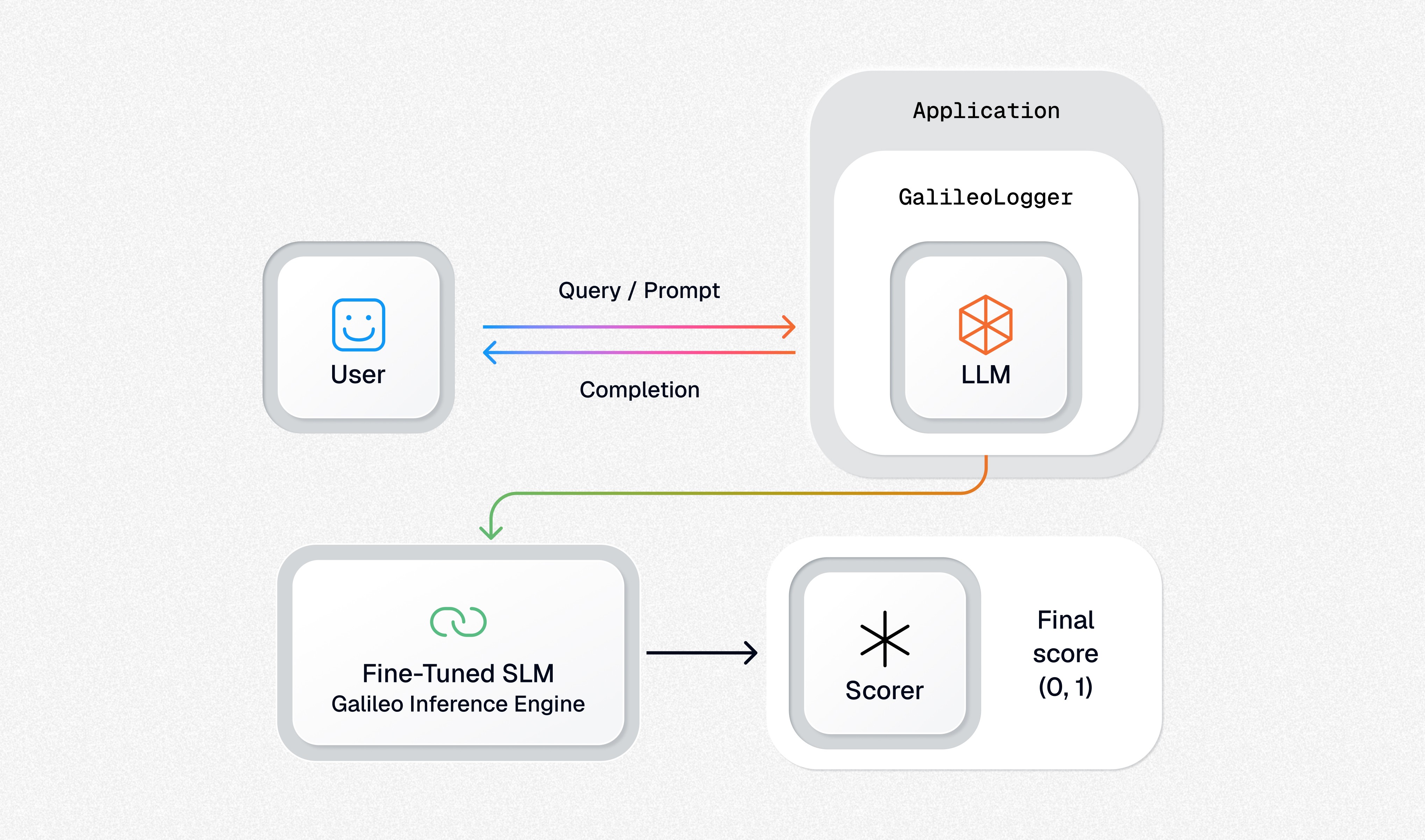

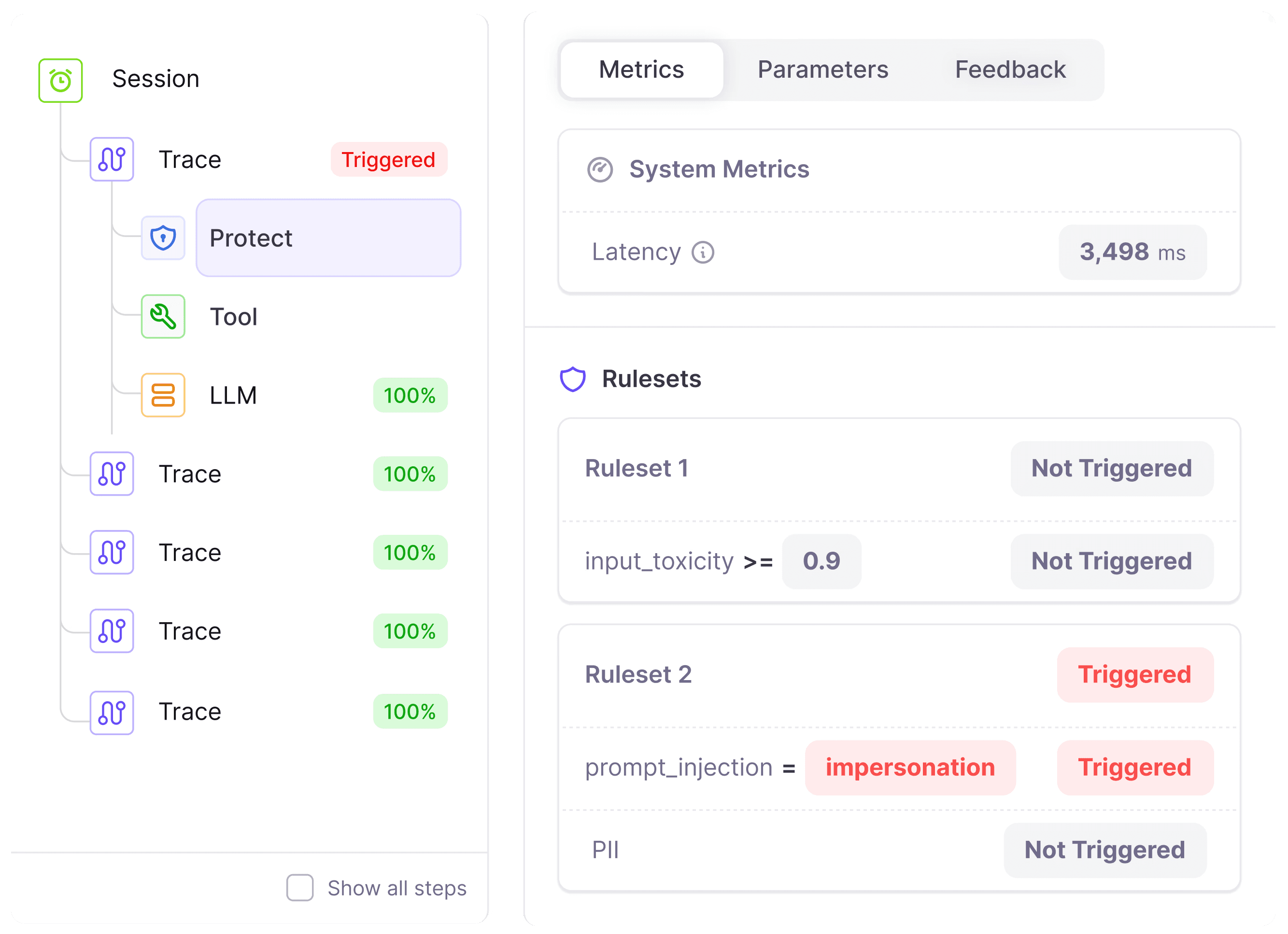

Eval engineering follows the entire lifecycle of an eval, from creating the initial LLM-as-a-judge prompt to deploying it as a production guardrail. Each phase has its unique challenges. The Galileo platform was designed to accelerate each phase of this lifecycle.

Step 1

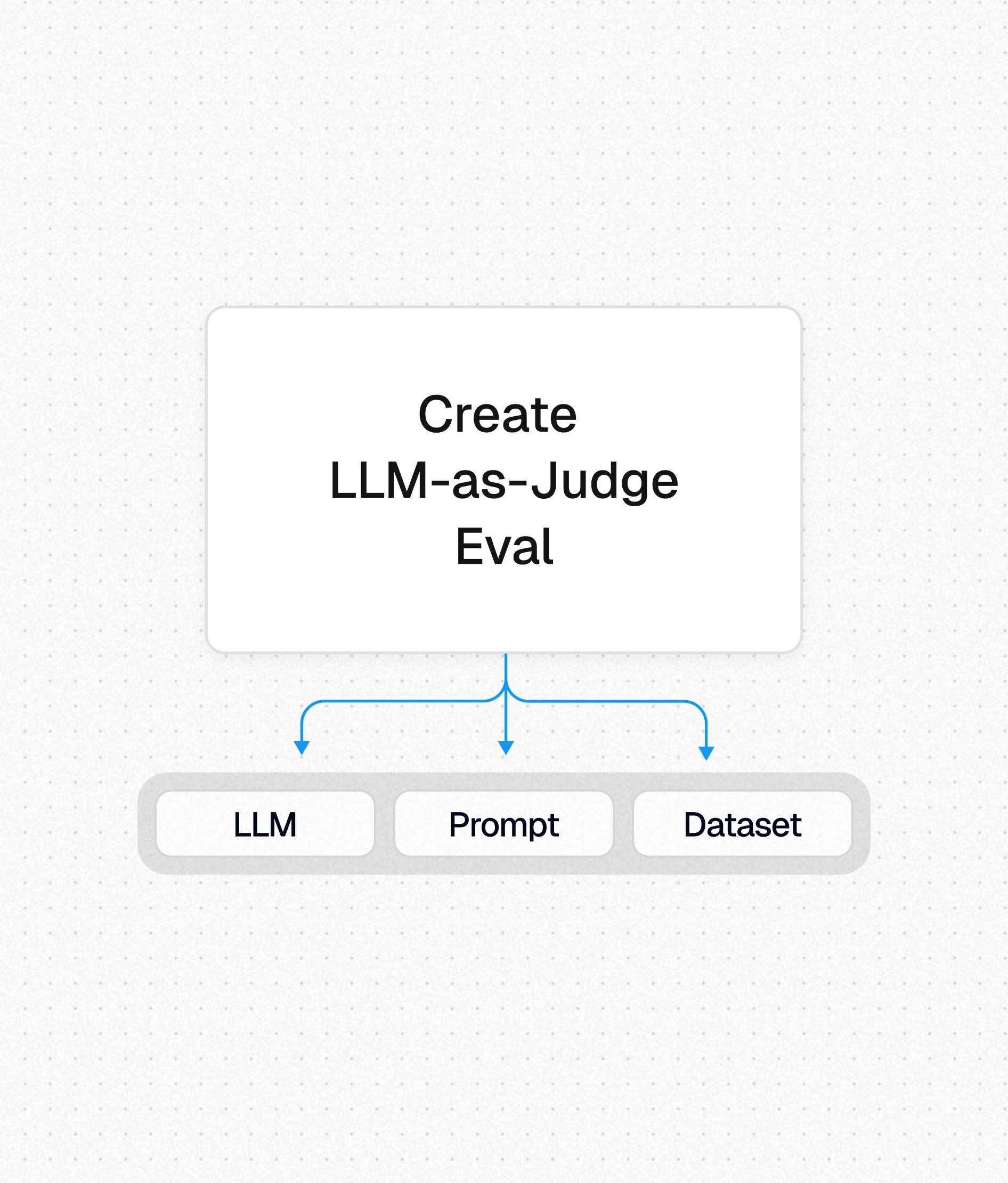

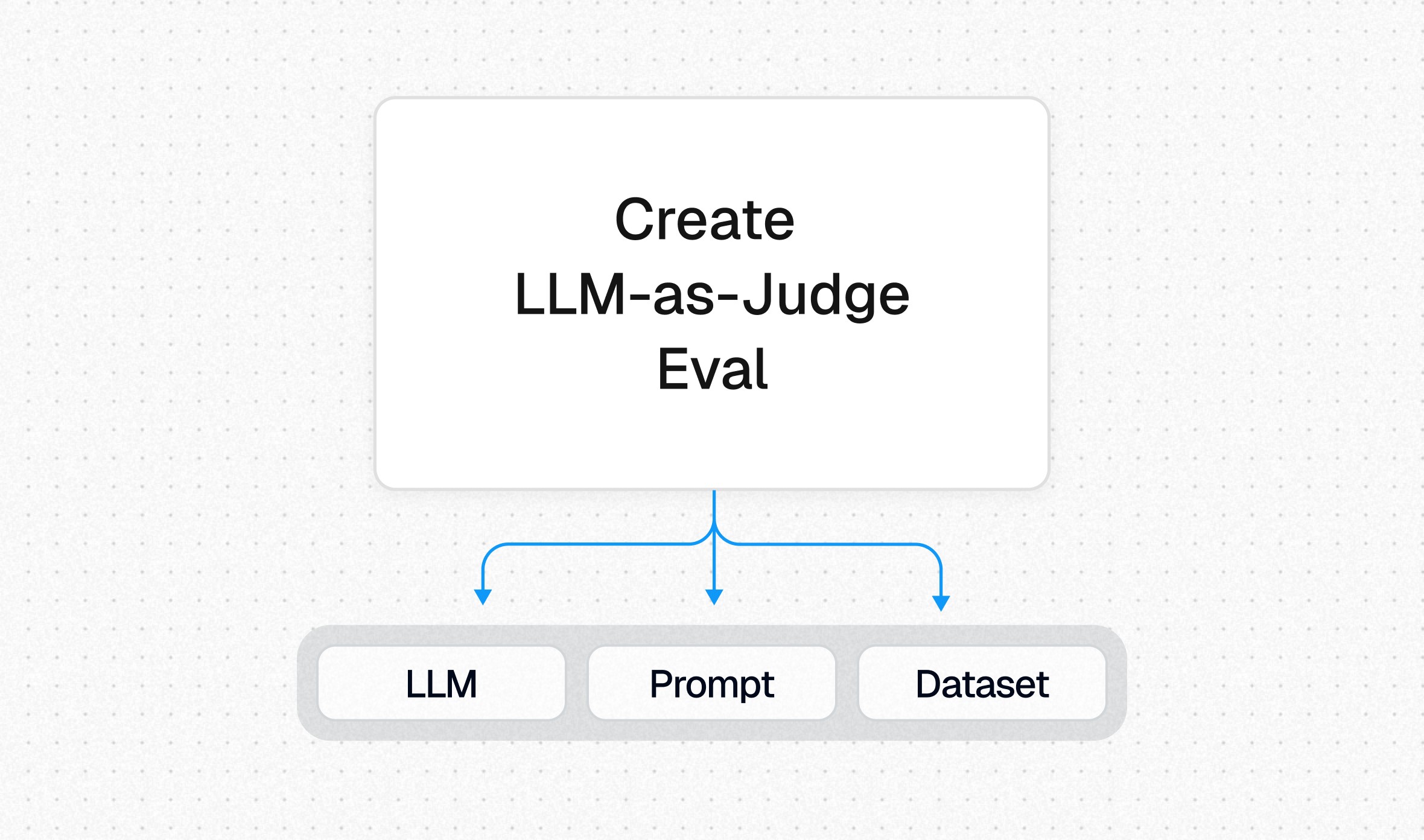

Creating the LLM-as-a-Judge

→

The LLM (e.g. GPT-4o)

→

The prompt used to perform the evaluation

→

A reference dataset to test accuracy against

Step 2

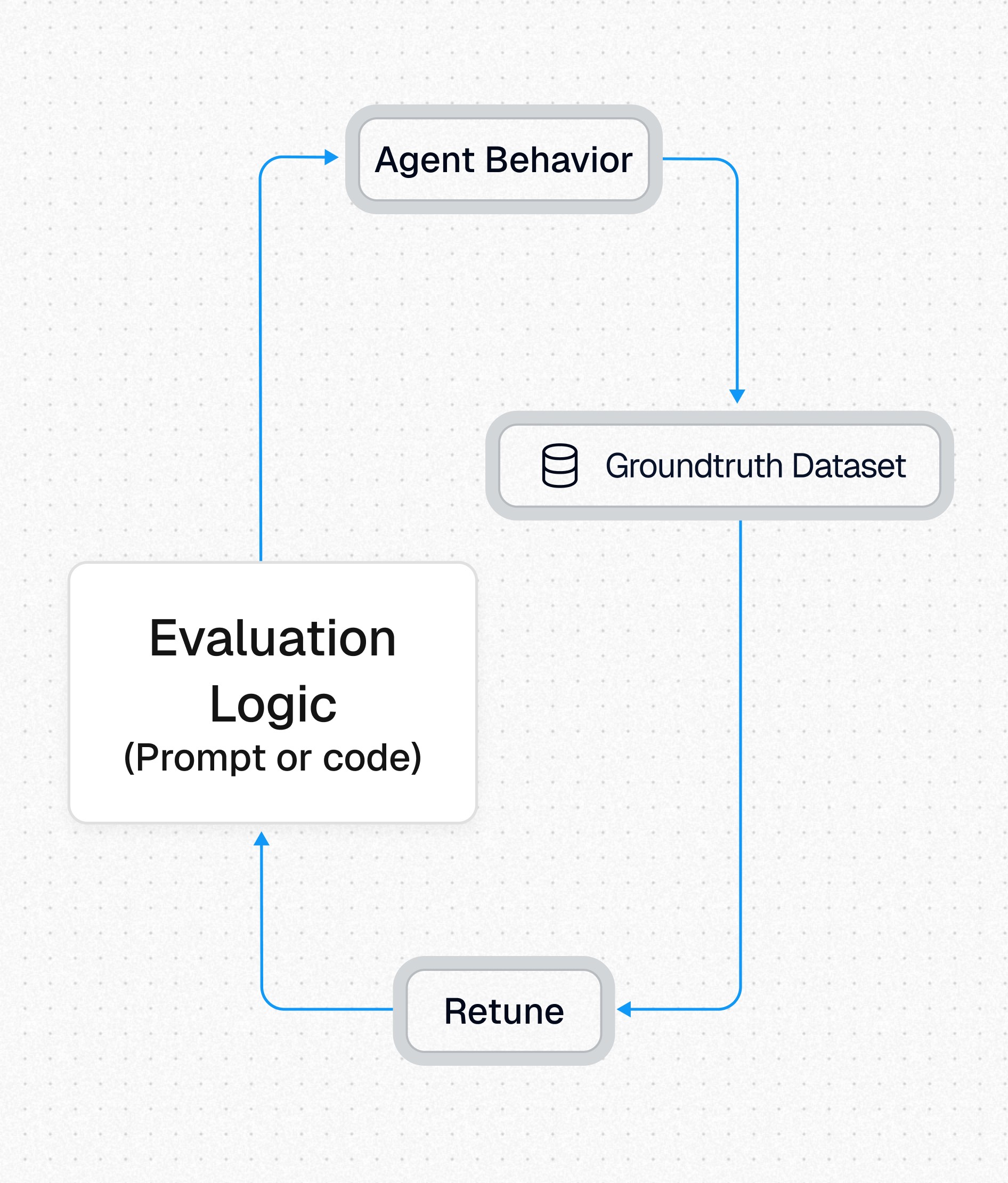

Tuning the Eval

→

SME Annotations

Experts review real LLM outputs and score according to eval criteria, to establish a ground truth reference for tuning

→

Prompt Optimization

Prompt engineers make tweaks and test the prompt F1 scores to measure improvements against the SME reference dataset

→

Few-Shot Examples

Difficult edge cases are added to the prompt as few-shot examples to add more explicit instructions to the model

Step 3

Developing with Evals

→

Measure App Performance

Benchmark the performance of your app and measure specific improvements with A/B tests.

→

Run CI/CD Tests

Execute evals regularly to catch regressions during normal development and maintenance.

→

Measure Production Performance

LLM-as-a-judge evals can be used to score production traffic for daily rollups and casual monitoring.

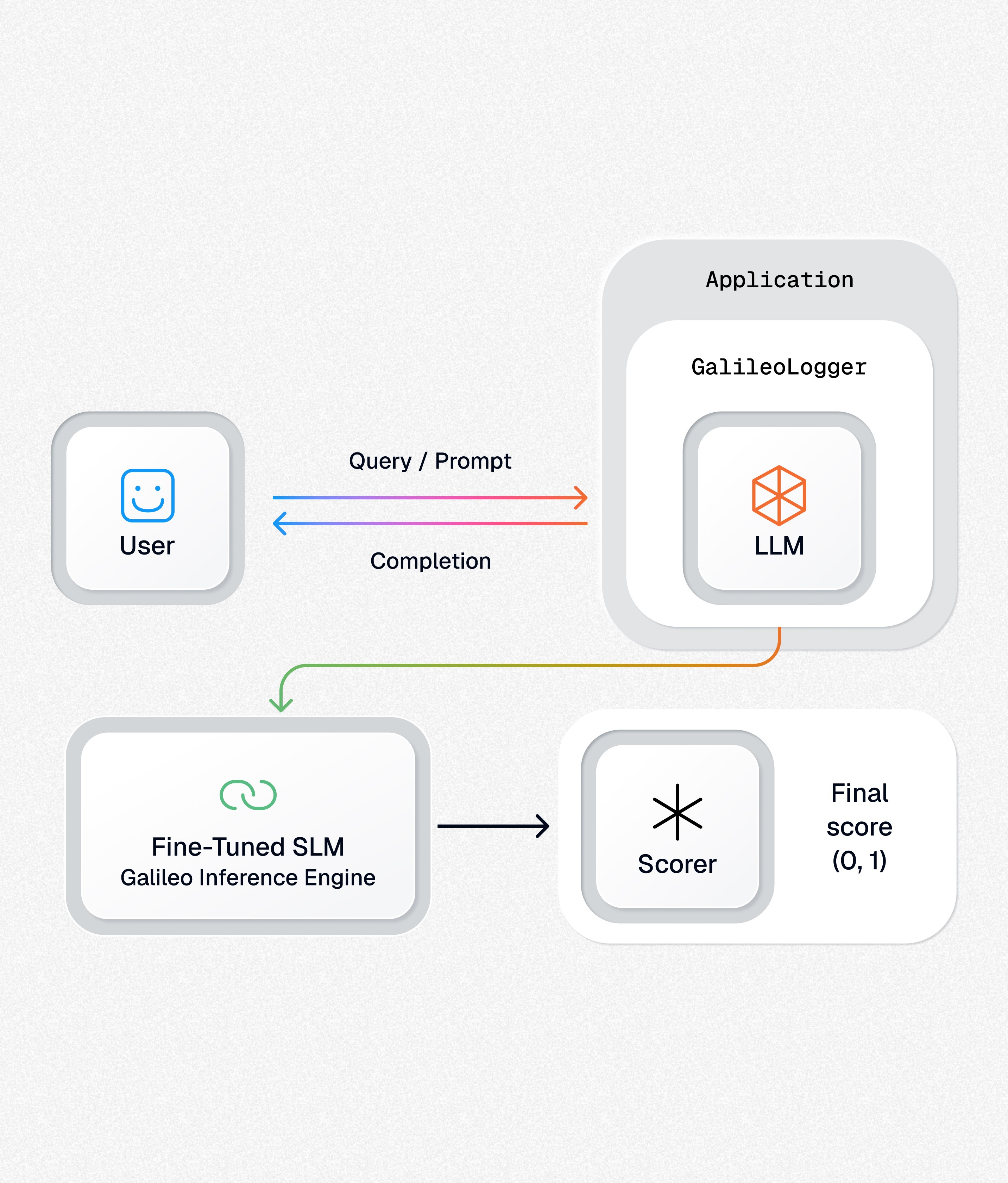

Step 4

Fine-tuning the SLM

Step 5

Step 6

Recycle Learnings

Trusted by enterprises, loved by developers

Ready to start?

Take our free developer course and learn how to execute eval engineering in your projects today.