Dec 21, 2025

How to Build Human-in-the-Loop Oversight for Production AI Agents

Jackson Wells

Integrated Marketing

Jackson Wells

Integrated Marketing

Picture this: Your customer service agent just approved a $50,000 refund to a fraudulent account. Your CFO wants answers about how an AI system made a financial decision of this magnitude without oversight. This scenario illustrates why human-in-the-loop (HITL) agent oversight has become non-negotiable for production AI deployments.

This guide demonstrates how to build production-ready HITL systems that balance autonomous efficiency with safety through confidence-based escalation, regulatory compliance frameworks, and purpose-built architectures. You'll learn quantifiable thresholds, architectural patterns, and operational strategies for reliable agent oversight.

TLDR:

Gartner predicts 40% of agentic AI projects may fail by 2027 due to reliability challenges

Set confidence thresholds at 80-90% based on your risk tolerance and domain requirements

Target 10-15% escalation rates for sustainable review operations without reviewer bottlenecks

Architectural patterns balance autonomy with safety across different risk profiles

EU AI Act and FDA require demonstrable human oversight for high-risk systems

We recently explored this topic on our Chain of Thought podcast, where industry experts shared practical insights and real-world implementation strategies:

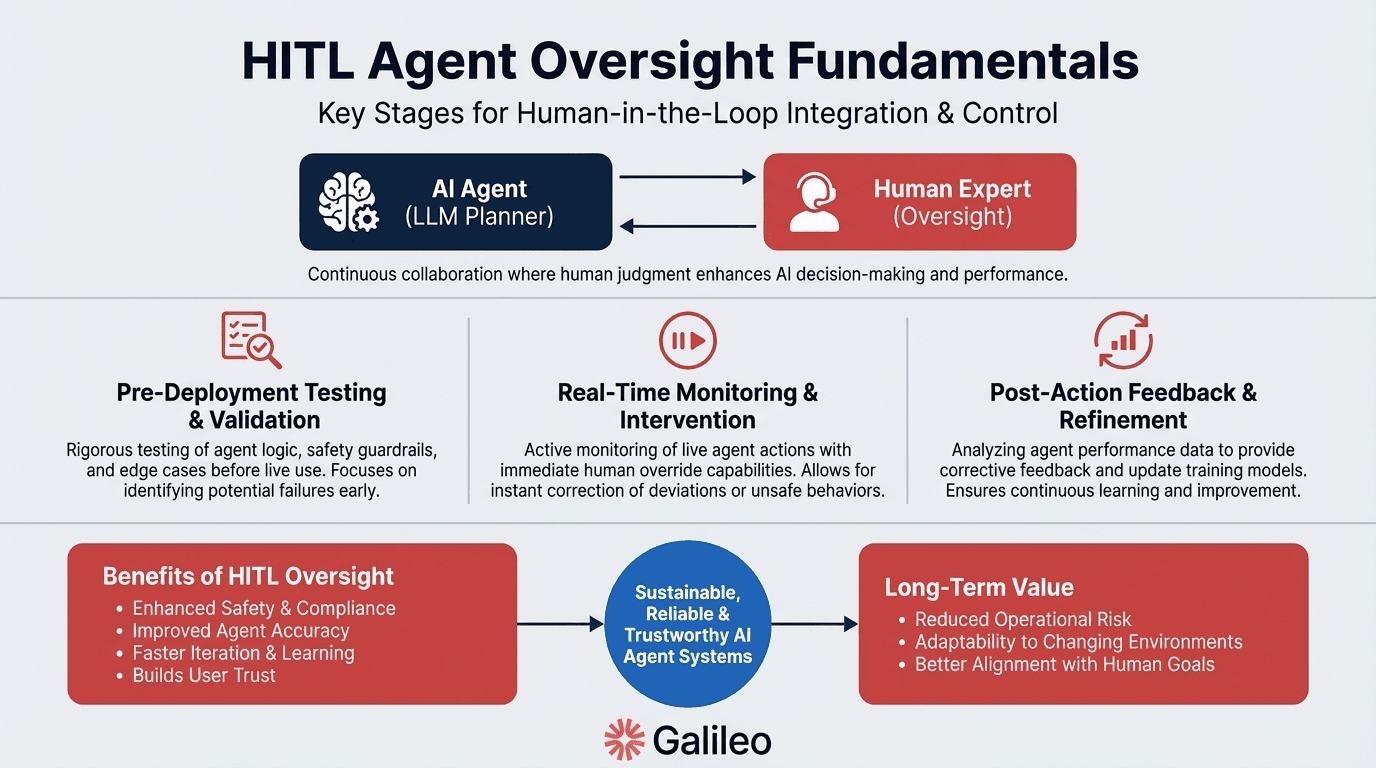

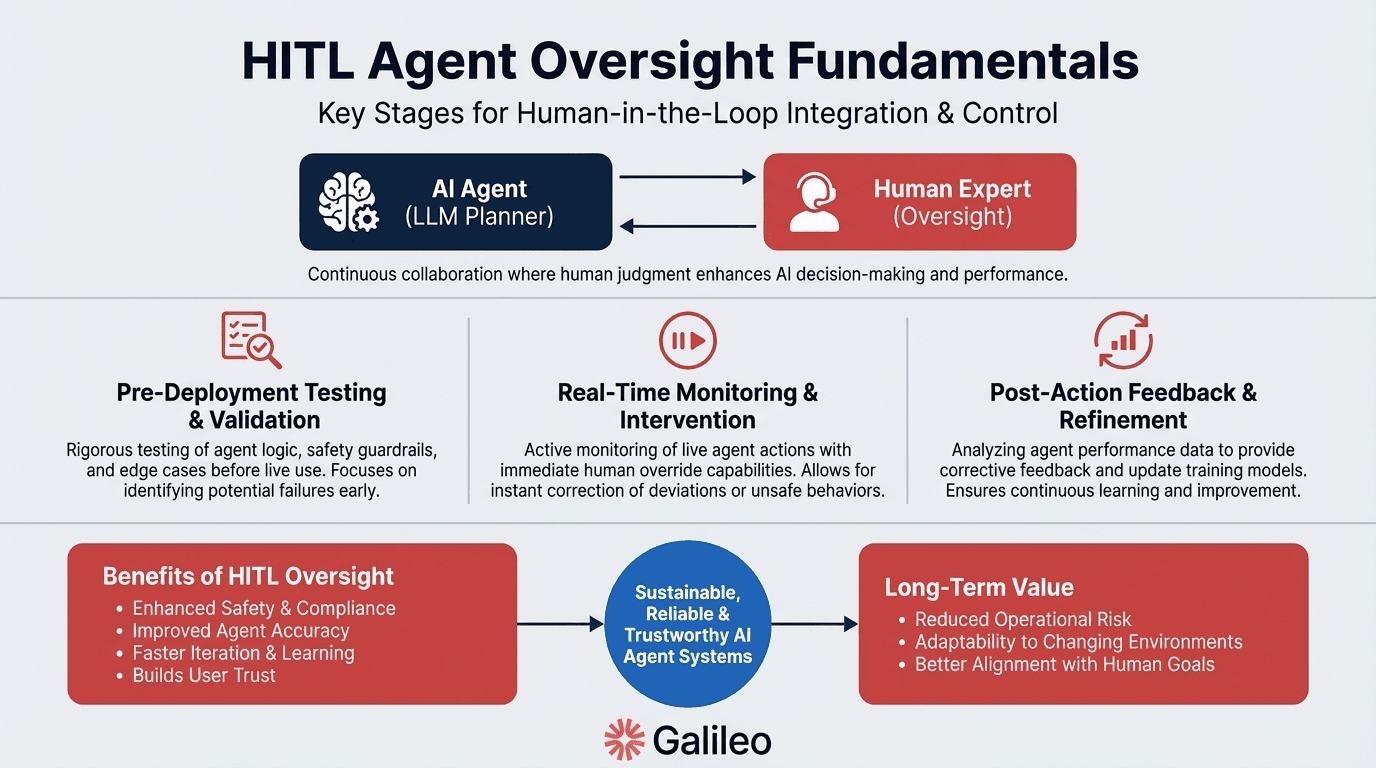

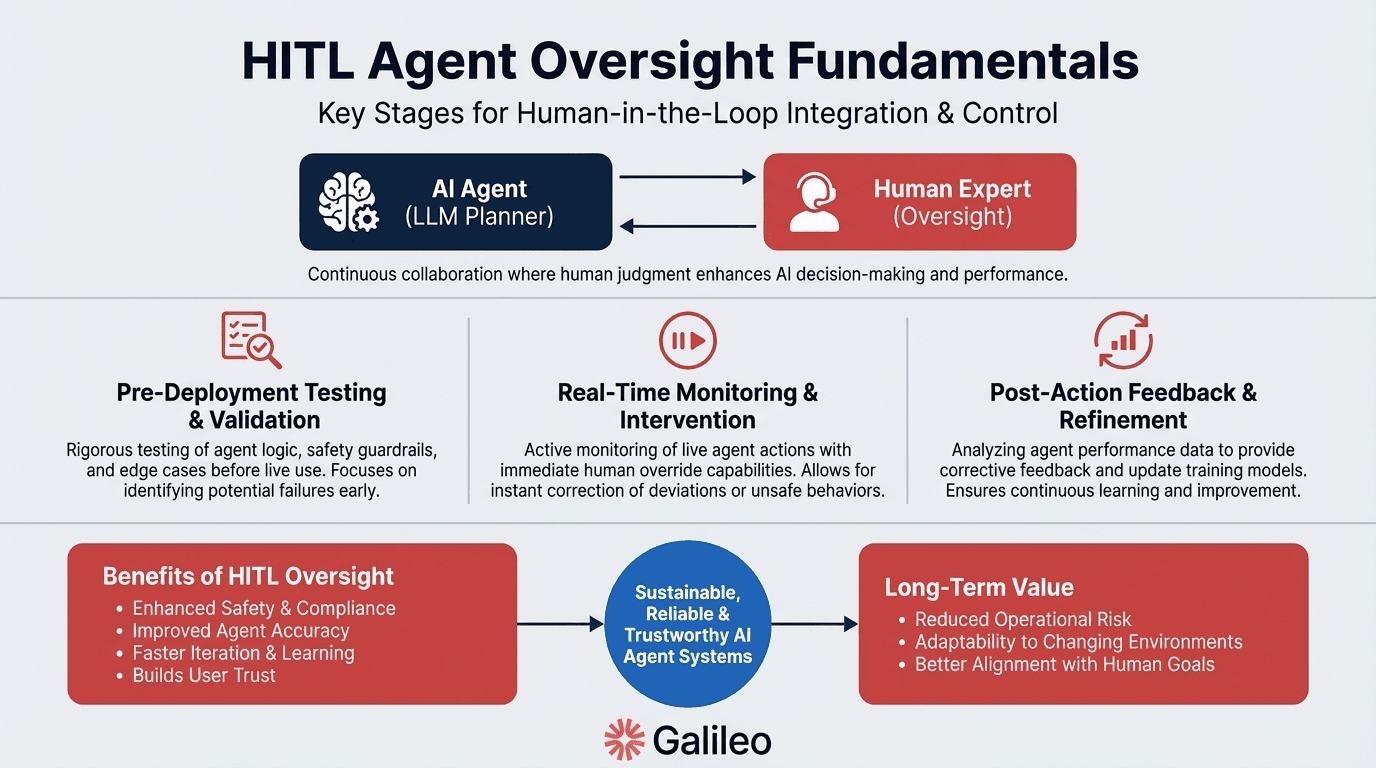

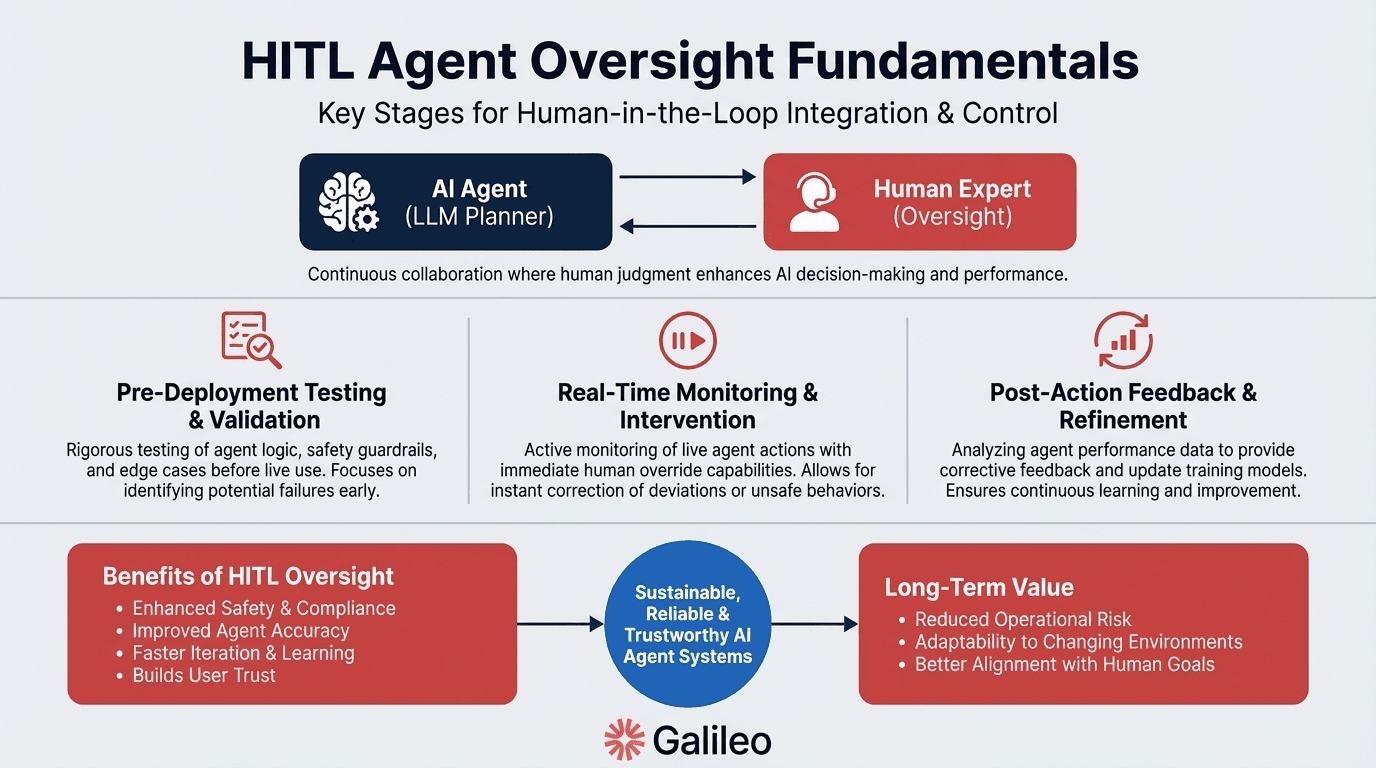

What Is Human-in-the-Loop (HITL) Agent Oversight?

Human-in-the-loop (HITL) agent oversight is an architectural approach that integrates structured human intervention points into autonomous AI systems, enabling operators to review, approve, or override agent decisions at predetermined risk thresholds. HITL architecture maintains automation efficiency for routine decisions while ensuring human expertise guides high-stakes choices involving financial transactions, customer relationships, or regulatory compliance.

Your agents operate across a spectrum from fully autonomous execution to mandatory human approval. Your customer service agent handles most inquiries without intervention but automatically escalates high-risk scenarios: large refunds, VIP accounts, complex judgments, and decisions below your confidence thresholds.

Routine decisions execute autonomously while experienced operators handle scenarios requiring relationship management, risk assessment, or context beyond training data. Your operational escalation rates should stay within the 10-15% range sustainable for review operations.

Gartner predicts 40% of agentic AI projects will be canceled by 2027. Cancellations stem from escalating costs, unclear business value, or inadequate risk controls. HITL architecture provides governance frameworks balancing innovation velocity with operational safety: the fundamental requirement for executive confidence in agent deployments.

The reliability crisis driving human oversight requirements

Your production AI agent systems face a documented reliability crisis. Mandatory oversight architectures address these reliability gaps directly. Research on controlled benchmarks has revealed substantial failure rates, while production deployments face even more severe challenges. Real-world complexity, edge cases, and cascading errors create failure rates substantially higher than test environments.

Simultaneously, you're navigating a critical adoption gap: while 50% of organizations are expected to launch agentic AI pilots by 2027, only 23% currently scale AI in production successfully.

When you deploy high-risk AI agents in regulated industries like financial services or healthcare, human oversight transforms from architectural consideration to legal requirement. The EU AI Act mandates human oversight capabilities for all high-risk AI systems, with specific requirements:

Natural persons must oversee AI system operation

Human operators need authority to intervene in critical decisions

Systems must enable independent review of AI recommendations

Override mechanisms must function without technical barriers

Regulatory frameworks from GDPR, SEC, CFPB, and FDA guidance all require that your AI systems enable meaningful human intervention in decision-making. Your agents can't make credit decisions, insurance underwriting, or employment recommendations without human review mechanisms.

Healthcare deployments must enable professionals to independently review the basis for AI recommendations without relying solely on automated outputs for patient care decisions. These requirements create baseline architectural constraints ensuring your systems demonstrate risk management to auditors and regulators.

What Triggers Determine When Agents Escalate to Humans?

Your production HITL implementations require precise escalation criteria distinguishing when autonomous execution proceeds versus when human judgment becomes mandatory. Your trigger framework should combine confidence score thresholds, risk classifications, escalation rate monitoring, and contextual factors. Together, these create a comprehensive safety net balancing automation efficiency with appropriate human oversight.

Set confidence score thresholds

Confidence thresholds in the 80-90% range serve as quantifiable escalation points. Decisions above this threshold may proceed autonomously, while those below trigger human intervention.

Financial services typically use 90-95% thresholds due to monetary impact. Customer service might accept 80-85% for routine inquiries. Healthcare applications often require 95%+ given patient safety implications. For multi-agent systems, apply more conservative thresholds due to cumulative confidence degradation—a three-agent chain requires escalation even with 90% individual confidence due to compound uncertainty.

Monitor escalation rates as system health indicators

Target 10-15% escalation rate for sustainable human review operations. Rates around 20% indicate manageable operations with higher manual intervention than optimal. When rates reach 60%, excessive escalation signals system miscalibration requiring recalibration.

Monitor this alongside human override rate: the percentage of escalated decisions where reviewers reject the agent's recommendation. These paired metrics provide executive visibility into system reliability while identifying threshold optimization opportunities.

Apply risk classification and context triggers

The EU AI Act establishes multi-tier risk categorization determining mandatory escalation: unacceptable risk (prohibited), high risk (mandatory oversight), limited risk (transparency only), or minimal risk (no requirements). Context-dependent factors trigger escalation independent from confidence scores:

Financial thresholds: Transaction amounts exceeding limits require approval regardless of confidence

Reputational risk: VIP clients or public-facing decisions demand executive review

Stakeholder sensitivity: Time-critical decisions affecting multiple departments trigger human judgment

Task complexity: Situations outside training distribution exceed safety thresholds

Multi-agent chains require more conservative thresholds. Monitor chain length, confidence decay across handoffs, inter-agent disagreement, and circular dependency detection. This layered approach captures risks that confidence scores alone might miss.

Which Architectural Patterns Work for Production HITL Systems?

Effective human oversight requires purpose-built architecture, not monitoring bolted onto autonomous systems. How do you design systems where human judgment is a first-class component rather than an afterthought?

The key is selecting architectures that match your specific workflow requirements: synchronous patterns provide maximum control with latency penalties, asynchronous patterns maintain speed with delayed detection, and hybrid approaches balance both considerations.

Understanding these tradeoffs enables you to design HITL systems that maintain executive confidence while preserving the automation efficiency that justifies your AI investments.

Multi-tier oversight separating planning from execution

When your workflows span multiple departments and six-figure budgets, you should integrate oversight at the planning phase rather than just execution. Multi-tier oversight separates strategic planning from execution agents.

The LLM generates high-level action plans that human operators review for feasibility before authorization. Lower-level agents then execute approved plans with bounded autonomy, while escalation triggers activate for out-of-bounds scenarios.

This pattern suits complex workflows where strategic direction requires human judgment while tactical execution proceeds autonomously. You maintain control over high-level decisions that shape workflow direction while enabling autonomous execution of approved plans.

The architecture provides executive visibility into strategic choices without creating bottlenecks in tactical operations, demonstrating governance to stakeholders while preserving automation efficiency.

Synchronous approval pausing execution

Synchronous approval pauses your agent execution pending human authorization for high-risk operations. Your agent identifies an action requiring confirmation, then the orchestrator pauses and serializes state.

Your system returns FINISH status with invocationId while humans review via UI with full context. The session resumes with approval status, and your agent proceeds or aborts based on human decision.

Synchronous approval provides maximum control for high-risk operations where execution must not proceed without explicit authorization. This pattern introduces 0.5-2.0 second latency per decision but ensures no irreversible actions occur without explicit human approval.

You should use synchronous oversight for financial transactions exceeding thresholds, account modifications, data deletion, or any action that can't be easily reversed. The latency cost is justified when mistakes create irreversible consequences or regulatory violations.

Asynchronous audit for speed with delayed review

Asynchronous audit allows your agents to execute autonomously while logging decisions for later human review. Near-zero latency maintains operational speed, but you accept delayed error detection. Your agents make decisions immediately, comprehensive logging captures full context and reasoning, periodic review queues surface decisions for human assessment, and corrective actions address issues retroactively.

This pattern suits content classification, recommendation systems, or internal processes where you can correct mistakes retroactively without severe consequences. Platforms like Galileo's Insights Engine automate failure pattern detection during asynchronous review, surfacing recurring issues that require attention.

You maintain the speed advantages of autonomous execution while ensuring human oversight identifies systematic problems requiring threshold adjustments or model retraining.

How Do You Maintain Efficiency While Implementing HITL Agent Oversight?

The fundamental tension in HITL design involves preserving your agent efficiency while ensuring safety.

Your production deployments reveal specific technical challenges: human review loops introduce 0.5-2.0 seconds latency per decision, neural network overconfidence requires calibration techniques, human review bottlenecks require maintaining 10-15% escalation rates for sustainable operations, and feedback loop integration requires systematic collection and continuous retraining.

Balance latency against safety requirements

Trading algorithms requiring human approval for each decision face a critical tradeoff: 2-second delays can cost millions in missed opportunities while immediate execution risks catastrophic errors. Human oversight creates unavoidable latency penalties.

Reaction delays typically range from 0.5 to 2.0 seconds depending on task complexity. These latency penalties can impact closed-loop system stability and may cause undesired oscillations in time-sensitive workflows.

However, you can manage this tradeoff through confidence-based routing. You escalate only low-confidence decisions to human review while high-confidence predictions proceed autonomously, though optimal thresholds vary by your deployment context.

Your architecture must balance synchronous oversight providing maximum safety but 0.5-2.0 second delay per decision, asynchronous audit offering near-zero latency but delayed error detection, and threshold-based routing providing a balanced approach suitable for most production scenarios.

This strategic framework enables you to demonstrate risk management to executives while maintaining the operational efficiency that justifies AI investments.

Calibrate confidence scores for reliable escalation

When your agents report 95% confidence but make incorrect predictions, the overconfidence problem breaks threshold-based escalation strategies. Neural networks exhibit systematic overconfidence, producing high confidence scores even for incorrect predictions.

This fundamental challenge breaks threshold-based escalation strategies, creating under-escalation where risky decisions proceed autonomously.

Your production system requires these calibration approaches:

Training-time calibration: Aligning uncertainty estimates with observed error rates during model training

Temperature scaling: Post-hoc adjustment using validation sets to recalibrate probability distributions

Ensemble disagreement: High disagreement across ensemble members triggers escalation regardless of individual confidence

Conformal prediction: Statistical guarantees through prediction sets with coverage probabilities

Specialized evaluation models like Luna-2 SLMs provide calibrated confidence scoring optimized for agent decision assessment. The critical anti-pattern is using raw softmax probabilities as confidence scores without calibration, which leads to systematic over-autonomy in incorrect predictions and undermines your entire escalation strategy.

Integrate systematic feedback loops for continuous improvement

Your human corrections must systematically improve agent performance, not just fix individual errors.

Structured feedback collection requires standardized interfaces requiring reviewers to provide reasoning alongside corrections, categorical feedback enabling pattern analysis across similar scenarios, automated integration pipelines feeding corrections into retraining workflows, and version control tracking model behavior changes after feedback incorporation.

Purpose-built platforms like Galileo's agent observability provide automated feedback collection with traceable lineage from human intervention to model improvement.

This systematic approach transforms human oversight from an operational cost into a continuous improvement mechanism, enabling you to demonstrate ROI through measurable reliability gains that reduce escalation rates while maintaining safety thresholds.

Building agent systems you can trust

Your board wants agent ROI metrics. Production deployments reveal substantial reliability gaps requiring systematic oversight. The gap between laboratory performance and real-world deployment demonstrates why systematic oversight architecture is essential.

Here's how Galileo helps you with AI guardrails:

Automated quality guardrails in CI/CD: Galileo integrates directly into your development workflow, running comprehensive evals on every code change and blocking releases that fail quality thresholds

Multi-dimensional response evaluation: With Galileo's Luna-2 evaluation models, you can assess every output across dozens of quality dimensions—correctness, toxicity, bias, adherence—at 97% lower cost than traditional LLM-based evaluation approaches

Real-time runtime protection: Galileo's Agent Protect scans every prompt and response in production, blocking harmful outputs before they reach users while maintaining detailed compliance logs for audit requirements

Intelligent failure detection: Galileo’s Insights Engine automatically clusters similar failures, surfaces root-cause patterns, and recommends fixes, reducing debugging time while building institutional knowledge

Human-in-the-loop optimization: Galileo's Continuous Learning via Human Feedback (CLHF) transforms expert reviews into reusable evaluators, accelerating iteration while maintaining quality standards

Discover how Galileo provides enterprise-grade AI guardrails with pre-built policies, real-time metrics, and ready-made integrations.

Frequently Asked Questions About Human-in-the-Loop Agent Oversight

What is human-in-the-loop (HITL) in AI agent systems?

Human-in-the-loop (HITL) integrates structured human intervention points throughout AI agent decision-making processes. HITL architectures pause autonomous execution at predetermined trigger points—when confidence falls below thresholds, risk levels exceed acceptable ranges, or regulatory requirements mandate review. Effective HITL systems typically target 10-15% escalation rates, meaning 85-90% of decisions execute autonomously while critical cases receive human oversight.

How do I determine the right confidence threshold for my agent system?

Confidence thresholds commonly range from 80-90%, but your optimal threshold depends on risk tolerance, domain requirements, and operational capacity. Financial services typically use 90-95% thresholds due to regulatory scrutiny. Customer service might accept 80-85% for routine inquiries. Healthcare applications often require 95%+ given patient safety implications. Start conservatively at 90-95%, then monitor escalation metrics to adjust toward 10-15% total escalation.

What escalation rate should I target for production HITL systems?

Your production systems should typically target 10-15% escalation rate for sustainable human review operations. This maintains manageable reviewer workload with adequate protection. Rates around 20% remain operational but indicate higher manual intervention than optimal. When rates reach 60%, excessive escalation creates bottlenecks requiring system recalibration. Monitor this alongside human override rate to identify threshold optimization opportunities.

Should I implement synchronous or asynchronous human oversight?

Choose synchronous oversight when decisions are high-stakes and irreversible; use asynchronous audit for lower-risk scenarios where delayed review is acceptable. Synchronous patterns provide maximum control but introduce 0.5-2.0 second latency per decision. Asynchronous audit maintains near-zero latency but creates delayed error detection. Most production systems implement hybrid approaches using confidence-based routing.

How does Galileo help implement human-in-the-loop oversight?

Galileo provides comprehensive infrastructure for production HITL oversight. The Insights Engine automatically identifies agent failure patterns and incorporates human feedback through adaptive learning. Galileo Protect implements real-time guardrails for prompt injection, toxic language, PII leakage, and hallucination detection. The platform's confidence-based escalation routing automatically directs uncertain decisions to human review while maintaining comprehensive audit trails for regulatory compliance.

Picture this: Your customer service agent just approved a $50,000 refund to a fraudulent account. Your CFO wants answers about how an AI system made a financial decision of this magnitude without oversight. This scenario illustrates why human-in-the-loop (HITL) agent oversight has become non-negotiable for production AI deployments.

This guide demonstrates how to build production-ready HITL systems that balance autonomous efficiency with safety through confidence-based escalation, regulatory compliance frameworks, and purpose-built architectures. You'll learn quantifiable thresholds, architectural patterns, and operational strategies for reliable agent oversight.

TLDR:

Gartner predicts 40% of agentic AI projects may fail by 2027 due to reliability challenges

Set confidence thresholds at 80-90% based on your risk tolerance and domain requirements

Target 10-15% escalation rates for sustainable review operations without reviewer bottlenecks

Architectural patterns balance autonomy with safety across different risk profiles

EU AI Act and FDA require demonstrable human oversight for high-risk systems

We recently explored this topic on our Chain of Thought podcast, where industry experts shared practical insights and real-world implementation strategies:

What Is Human-in-the-Loop (HITL) Agent Oversight?

Human-in-the-loop (HITL) agent oversight is an architectural approach that integrates structured human intervention points into autonomous AI systems, enabling operators to review, approve, or override agent decisions at predetermined risk thresholds. HITL architecture maintains automation efficiency for routine decisions while ensuring human expertise guides high-stakes choices involving financial transactions, customer relationships, or regulatory compliance.

Your agents operate across a spectrum from fully autonomous execution to mandatory human approval. Your customer service agent handles most inquiries without intervention but automatically escalates high-risk scenarios: large refunds, VIP accounts, complex judgments, and decisions below your confidence thresholds.

Routine decisions execute autonomously while experienced operators handle scenarios requiring relationship management, risk assessment, or context beyond training data. Your operational escalation rates should stay within the 10-15% range sustainable for review operations.

Gartner predicts 40% of agentic AI projects will be canceled by 2027. Cancellations stem from escalating costs, unclear business value, or inadequate risk controls. HITL architecture provides governance frameworks balancing innovation velocity with operational safety: the fundamental requirement for executive confidence in agent deployments.

The reliability crisis driving human oversight requirements

Your production AI agent systems face a documented reliability crisis. Mandatory oversight architectures address these reliability gaps directly. Research on controlled benchmarks has revealed substantial failure rates, while production deployments face even more severe challenges. Real-world complexity, edge cases, and cascading errors create failure rates substantially higher than test environments.

Simultaneously, you're navigating a critical adoption gap: while 50% of organizations are expected to launch agentic AI pilots by 2027, only 23% currently scale AI in production successfully.

When you deploy high-risk AI agents in regulated industries like financial services or healthcare, human oversight transforms from architectural consideration to legal requirement. The EU AI Act mandates human oversight capabilities for all high-risk AI systems, with specific requirements:

Natural persons must oversee AI system operation

Human operators need authority to intervene in critical decisions

Systems must enable independent review of AI recommendations

Override mechanisms must function without technical barriers

Regulatory frameworks from GDPR, SEC, CFPB, and FDA guidance all require that your AI systems enable meaningful human intervention in decision-making. Your agents can't make credit decisions, insurance underwriting, or employment recommendations without human review mechanisms.

Healthcare deployments must enable professionals to independently review the basis for AI recommendations without relying solely on automated outputs for patient care decisions. These requirements create baseline architectural constraints ensuring your systems demonstrate risk management to auditors and regulators.

What Triggers Determine When Agents Escalate to Humans?

Your production HITL implementations require precise escalation criteria distinguishing when autonomous execution proceeds versus when human judgment becomes mandatory. Your trigger framework should combine confidence score thresholds, risk classifications, escalation rate monitoring, and contextual factors. Together, these create a comprehensive safety net balancing automation efficiency with appropriate human oversight.

Set confidence score thresholds

Confidence thresholds in the 80-90% range serve as quantifiable escalation points. Decisions above this threshold may proceed autonomously, while those below trigger human intervention.

Financial services typically use 90-95% thresholds due to monetary impact. Customer service might accept 80-85% for routine inquiries. Healthcare applications often require 95%+ given patient safety implications. For multi-agent systems, apply more conservative thresholds due to cumulative confidence degradation—a three-agent chain requires escalation even with 90% individual confidence due to compound uncertainty.

Monitor escalation rates as system health indicators

Target 10-15% escalation rate for sustainable human review operations. Rates around 20% indicate manageable operations with higher manual intervention than optimal. When rates reach 60%, excessive escalation signals system miscalibration requiring recalibration.

Monitor this alongside human override rate: the percentage of escalated decisions where reviewers reject the agent's recommendation. These paired metrics provide executive visibility into system reliability while identifying threshold optimization opportunities.

Apply risk classification and context triggers

The EU AI Act establishes multi-tier risk categorization determining mandatory escalation: unacceptable risk (prohibited), high risk (mandatory oversight), limited risk (transparency only), or minimal risk (no requirements). Context-dependent factors trigger escalation independent from confidence scores:

Financial thresholds: Transaction amounts exceeding limits require approval regardless of confidence

Reputational risk: VIP clients or public-facing decisions demand executive review

Stakeholder sensitivity: Time-critical decisions affecting multiple departments trigger human judgment

Task complexity: Situations outside training distribution exceed safety thresholds

Multi-agent chains require more conservative thresholds. Monitor chain length, confidence decay across handoffs, inter-agent disagreement, and circular dependency detection. This layered approach captures risks that confidence scores alone might miss.

Which Architectural Patterns Work for Production HITL Systems?

Effective human oversight requires purpose-built architecture, not monitoring bolted onto autonomous systems. How do you design systems where human judgment is a first-class component rather than an afterthought?

The key is selecting architectures that match your specific workflow requirements: synchronous patterns provide maximum control with latency penalties, asynchronous patterns maintain speed with delayed detection, and hybrid approaches balance both considerations.

Understanding these tradeoffs enables you to design HITL systems that maintain executive confidence while preserving the automation efficiency that justifies your AI investments.

Multi-tier oversight separating planning from execution

When your workflows span multiple departments and six-figure budgets, you should integrate oversight at the planning phase rather than just execution. Multi-tier oversight separates strategic planning from execution agents.

The LLM generates high-level action plans that human operators review for feasibility before authorization. Lower-level agents then execute approved plans with bounded autonomy, while escalation triggers activate for out-of-bounds scenarios.

This pattern suits complex workflows where strategic direction requires human judgment while tactical execution proceeds autonomously. You maintain control over high-level decisions that shape workflow direction while enabling autonomous execution of approved plans.

The architecture provides executive visibility into strategic choices without creating bottlenecks in tactical operations, demonstrating governance to stakeholders while preserving automation efficiency.

Synchronous approval pausing execution

Synchronous approval pauses your agent execution pending human authorization for high-risk operations. Your agent identifies an action requiring confirmation, then the orchestrator pauses and serializes state.

Your system returns FINISH status with invocationId while humans review via UI with full context. The session resumes with approval status, and your agent proceeds or aborts based on human decision.

Synchronous approval provides maximum control for high-risk operations where execution must not proceed without explicit authorization. This pattern introduces 0.5-2.0 second latency per decision but ensures no irreversible actions occur without explicit human approval.

You should use synchronous oversight for financial transactions exceeding thresholds, account modifications, data deletion, or any action that can't be easily reversed. The latency cost is justified when mistakes create irreversible consequences or regulatory violations.

Asynchronous audit for speed with delayed review

Asynchronous audit allows your agents to execute autonomously while logging decisions for later human review. Near-zero latency maintains operational speed, but you accept delayed error detection. Your agents make decisions immediately, comprehensive logging captures full context and reasoning, periodic review queues surface decisions for human assessment, and corrective actions address issues retroactively.

This pattern suits content classification, recommendation systems, or internal processes where you can correct mistakes retroactively without severe consequences. Platforms like Galileo's Insights Engine automate failure pattern detection during asynchronous review, surfacing recurring issues that require attention.

You maintain the speed advantages of autonomous execution while ensuring human oversight identifies systematic problems requiring threshold adjustments or model retraining.

How Do You Maintain Efficiency While Implementing HITL Agent Oversight?

The fundamental tension in HITL design involves preserving your agent efficiency while ensuring safety.

Your production deployments reveal specific technical challenges: human review loops introduce 0.5-2.0 seconds latency per decision, neural network overconfidence requires calibration techniques, human review bottlenecks require maintaining 10-15% escalation rates for sustainable operations, and feedback loop integration requires systematic collection and continuous retraining.

Balance latency against safety requirements

Trading algorithms requiring human approval for each decision face a critical tradeoff: 2-second delays can cost millions in missed opportunities while immediate execution risks catastrophic errors. Human oversight creates unavoidable latency penalties.

Reaction delays typically range from 0.5 to 2.0 seconds depending on task complexity. These latency penalties can impact closed-loop system stability and may cause undesired oscillations in time-sensitive workflows.

However, you can manage this tradeoff through confidence-based routing. You escalate only low-confidence decisions to human review while high-confidence predictions proceed autonomously, though optimal thresholds vary by your deployment context.

Your architecture must balance synchronous oversight providing maximum safety but 0.5-2.0 second delay per decision, asynchronous audit offering near-zero latency but delayed error detection, and threshold-based routing providing a balanced approach suitable for most production scenarios.

This strategic framework enables you to demonstrate risk management to executives while maintaining the operational efficiency that justifies AI investments.

Calibrate confidence scores for reliable escalation

When your agents report 95% confidence but make incorrect predictions, the overconfidence problem breaks threshold-based escalation strategies. Neural networks exhibit systematic overconfidence, producing high confidence scores even for incorrect predictions.

This fundamental challenge breaks threshold-based escalation strategies, creating under-escalation where risky decisions proceed autonomously.

Your production system requires these calibration approaches:

Training-time calibration: Aligning uncertainty estimates with observed error rates during model training

Temperature scaling: Post-hoc adjustment using validation sets to recalibrate probability distributions

Ensemble disagreement: High disagreement across ensemble members triggers escalation regardless of individual confidence

Conformal prediction: Statistical guarantees through prediction sets with coverage probabilities

Specialized evaluation models like Luna-2 SLMs provide calibrated confidence scoring optimized for agent decision assessment. The critical anti-pattern is using raw softmax probabilities as confidence scores without calibration, which leads to systematic over-autonomy in incorrect predictions and undermines your entire escalation strategy.

Integrate systematic feedback loops for continuous improvement

Your human corrections must systematically improve agent performance, not just fix individual errors.

Structured feedback collection requires standardized interfaces requiring reviewers to provide reasoning alongside corrections, categorical feedback enabling pattern analysis across similar scenarios, automated integration pipelines feeding corrections into retraining workflows, and version control tracking model behavior changes after feedback incorporation.

Purpose-built platforms like Galileo's agent observability provide automated feedback collection with traceable lineage from human intervention to model improvement.

This systematic approach transforms human oversight from an operational cost into a continuous improvement mechanism, enabling you to demonstrate ROI through measurable reliability gains that reduce escalation rates while maintaining safety thresholds.

Building agent systems you can trust

Your board wants agent ROI metrics. Production deployments reveal substantial reliability gaps requiring systematic oversight. The gap between laboratory performance and real-world deployment demonstrates why systematic oversight architecture is essential.

Here's how Galileo helps you with AI guardrails:

Automated quality guardrails in CI/CD: Galileo integrates directly into your development workflow, running comprehensive evals on every code change and blocking releases that fail quality thresholds

Multi-dimensional response evaluation: With Galileo's Luna-2 evaluation models, you can assess every output across dozens of quality dimensions—correctness, toxicity, bias, adherence—at 97% lower cost than traditional LLM-based evaluation approaches

Real-time runtime protection: Galileo's Agent Protect scans every prompt and response in production, blocking harmful outputs before they reach users while maintaining detailed compliance logs for audit requirements

Intelligent failure detection: Galileo’s Insights Engine automatically clusters similar failures, surfaces root-cause patterns, and recommends fixes, reducing debugging time while building institutional knowledge

Human-in-the-loop optimization: Galileo's Continuous Learning via Human Feedback (CLHF) transforms expert reviews into reusable evaluators, accelerating iteration while maintaining quality standards

Discover how Galileo provides enterprise-grade AI guardrails with pre-built policies, real-time metrics, and ready-made integrations.

Frequently Asked Questions About Human-in-the-Loop Agent Oversight

What is human-in-the-loop (HITL) in AI agent systems?

Human-in-the-loop (HITL) integrates structured human intervention points throughout AI agent decision-making processes. HITL architectures pause autonomous execution at predetermined trigger points—when confidence falls below thresholds, risk levels exceed acceptable ranges, or regulatory requirements mandate review. Effective HITL systems typically target 10-15% escalation rates, meaning 85-90% of decisions execute autonomously while critical cases receive human oversight.

How do I determine the right confidence threshold for my agent system?

Confidence thresholds commonly range from 80-90%, but your optimal threshold depends on risk tolerance, domain requirements, and operational capacity. Financial services typically use 90-95% thresholds due to regulatory scrutiny. Customer service might accept 80-85% for routine inquiries. Healthcare applications often require 95%+ given patient safety implications. Start conservatively at 90-95%, then monitor escalation metrics to adjust toward 10-15% total escalation.

What escalation rate should I target for production HITL systems?

Your production systems should typically target 10-15% escalation rate for sustainable human review operations. This maintains manageable reviewer workload with adequate protection. Rates around 20% remain operational but indicate higher manual intervention than optimal. When rates reach 60%, excessive escalation creates bottlenecks requiring system recalibration. Monitor this alongside human override rate to identify threshold optimization opportunities.

Should I implement synchronous or asynchronous human oversight?

Choose synchronous oversight when decisions are high-stakes and irreversible; use asynchronous audit for lower-risk scenarios where delayed review is acceptable. Synchronous patterns provide maximum control but introduce 0.5-2.0 second latency per decision. Asynchronous audit maintains near-zero latency but creates delayed error detection. Most production systems implement hybrid approaches using confidence-based routing.

How does Galileo help implement human-in-the-loop oversight?

Galileo provides comprehensive infrastructure for production HITL oversight. The Insights Engine automatically identifies agent failure patterns and incorporates human feedback through adaptive learning. Galileo Protect implements real-time guardrails for prompt injection, toxic language, PII leakage, and hallucination detection. The platform's confidence-based escalation routing automatically directs uncertain decisions to human review while maintaining comprehensive audit trails for regulatory compliance.

Picture this: Your customer service agent just approved a $50,000 refund to a fraudulent account. Your CFO wants answers about how an AI system made a financial decision of this magnitude without oversight. This scenario illustrates why human-in-the-loop (HITL) agent oversight has become non-negotiable for production AI deployments.

This guide demonstrates how to build production-ready HITL systems that balance autonomous efficiency with safety through confidence-based escalation, regulatory compliance frameworks, and purpose-built architectures. You'll learn quantifiable thresholds, architectural patterns, and operational strategies for reliable agent oversight.

TLDR:

Gartner predicts 40% of agentic AI projects may fail by 2027 due to reliability challenges

Set confidence thresholds at 80-90% based on your risk tolerance and domain requirements

Target 10-15% escalation rates for sustainable review operations without reviewer bottlenecks

Architectural patterns balance autonomy with safety across different risk profiles

EU AI Act and FDA require demonstrable human oversight for high-risk systems

We recently explored this topic on our Chain of Thought podcast, where industry experts shared practical insights and real-world implementation strategies:

What Is Human-in-the-Loop (HITL) Agent Oversight?

Human-in-the-loop (HITL) agent oversight is an architectural approach that integrates structured human intervention points into autonomous AI systems, enabling operators to review, approve, or override agent decisions at predetermined risk thresholds. HITL architecture maintains automation efficiency for routine decisions while ensuring human expertise guides high-stakes choices involving financial transactions, customer relationships, or regulatory compliance.

Your agents operate across a spectrum from fully autonomous execution to mandatory human approval. Your customer service agent handles most inquiries without intervention but automatically escalates high-risk scenarios: large refunds, VIP accounts, complex judgments, and decisions below your confidence thresholds.

Routine decisions execute autonomously while experienced operators handle scenarios requiring relationship management, risk assessment, or context beyond training data. Your operational escalation rates should stay within the 10-15% range sustainable for review operations.

Gartner predicts 40% of agentic AI projects will be canceled by 2027. Cancellations stem from escalating costs, unclear business value, or inadequate risk controls. HITL architecture provides governance frameworks balancing innovation velocity with operational safety: the fundamental requirement for executive confidence in agent deployments.

The reliability crisis driving human oversight requirements

Your production AI agent systems face a documented reliability crisis. Mandatory oversight architectures address these reliability gaps directly. Research on controlled benchmarks has revealed substantial failure rates, while production deployments face even more severe challenges. Real-world complexity, edge cases, and cascading errors create failure rates substantially higher than test environments.

Simultaneously, you're navigating a critical adoption gap: while 50% of organizations are expected to launch agentic AI pilots by 2027, only 23% currently scale AI in production successfully.

When you deploy high-risk AI agents in regulated industries like financial services or healthcare, human oversight transforms from architectural consideration to legal requirement. The EU AI Act mandates human oversight capabilities for all high-risk AI systems, with specific requirements:

Natural persons must oversee AI system operation

Human operators need authority to intervene in critical decisions

Systems must enable independent review of AI recommendations

Override mechanisms must function without technical barriers

Regulatory frameworks from GDPR, SEC, CFPB, and FDA guidance all require that your AI systems enable meaningful human intervention in decision-making. Your agents can't make credit decisions, insurance underwriting, or employment recommendations without human review mechanisms.

Healthcare deployments must enable professionals to independently review the basis for AI recommendations without relying solely on automated outputs for patient care decisions. These requirements create baseline architectural constraints ensuring your systems demonstrate risk management to auditors and regulators.

What Triggers Determine When Agents Escalate to Humans?

Your production HITL implementations require precise escalation criteria distinguishing when autonomous execution proceeds versus when human judgment becomes mandatory. Your trigger framework should combine confidence score thresholds, risk classifications, escalation rate monitoring, and contextual factors. Together, these create a comprehensive safety net balancing automation efficiency with appropriate human oversight.

Set confidence score thresholds

Confidence thresholds in the 80-90% range serve as quantifiable escalation points. Decisions above this threshold may proceed autonomously, while those below trigger human intervention.

Financial services typically use 90-95% thresholds due to monetary impact. Customer service might accept 80-85% for routine inquiries. Healthcare applications often require 95%+ given patient safety implications. For multi-agent systems, apply more conservative thresholds due to cumulative confidence degradation—a three-agent chain requires escalation even with 90% individual confidence due to compound uncertainty.

Monitor escalation rates as system health indicators

Target 10-15% escalation rate for sustainable human review operations. Rates around 20% indicate manageable operations with higher manual intervention than optimal. When rates reach 60%, excessive escalation signals system miscalibration requiring recalibration.

Monitor this alongside human override rate: the percentage of escalated decisions where reviewers reject the agent's recommendation. These paired metrics provide executive visibility into system reliability while identifying threshold optimization opportunities.

Apply risk classification and context triggers

The EU AI Act establishes multi-tier risk categorization determining mandatory escalation: unacceptable risk (prohibited), high risk (mandatory oversight), limited risk (transparency only), or minimal risk (no requirements). Context-dependent factors trigger escalation independent from confidence scores:

Financial thresholds: Transaction amounts exceeding limits require approval regardless of confidence

Reputational risk: VIP clients or public-facing decisions demand executive review

Stakeholder sensitivity: Time-critical decisions affecting multiple departments trigger human judgment

Task complexity: Situations outside training distribution exceed safety thresholds

Multi-agent chains require more conservative thresholds. Monitor chain length, confidence decay across handoffs, inter-agent disagreement, and circular dependency detection. This layered approach captures risks that confidence scores alone might miss.

Which Architectural Patterns Work for Production HITL Systems?

Effective human oversight requires purpose-built architecture, not monitoring bolted onto autonomous systems. How do you design systems where human judgment is a first-class component rather than an afterthought?

The key is selecting architectures that match your specific workflow requirements: synchronous patterns provide maximum control with latency penalties, asynchronous patterns maintain speed with delayed detection, and hybrid approaches balance both considerations.

Understanding these tradeoffs enables you to design HITL systems that maintain executive confidence while preserving the automation efficiency that justifies your AI investments.

Multi-tier oversight separating planning from execution

When your workflows span multiple departments and six-figure budgets, you should integrate oversight at the planning phase rather than just execution. Multi-tier oversight separates strategic planning from execution agents.

The LLM generates high-level action plans that human operators review for feasibility before authorization. Lower-level agents then execute approved plans with bounded autonomy, while escalation triggers activate for out-of-bounds scenarios.

This pattern suits complex workflows where strategic direction requires human judgment while tactical execution proceeds autonomously. You maintain control over high-level decisions that shape workflow direction while enabling autonomous execution of approved plans.

The architecture provides executive visibility into strategic choices without creating bottlenecks in tactical operations, demonstrating governance to stakeholders while preserving automation efficiency.

Synchronous approval pausing execution

Synchronous approval pauses your agent execution pending human authorization for high-risk operations. Your agent identifies an action requiring confirmation, then the orchestrator pauses and serializes state.

Your system returns FINISH status with invocationId while humans review via UI with full context. The session resumes with approval status, and your agent proceeds or aborts based on human decision.

Synchronous approval provides maximum control for high-risk operations where execution must not proceed without explicit authorization. This pattern introduces 0.5-2.0 second latency per decision but ensures no irreversible actions occur without explicit human approval.

You should use synchronous oversight for financial transactions exceeding thresholds, account modifications, data deletion, or any action that can't be easily reversed. The latency cost is justified when mistakes create irreversible consequences or regulatory violations.

Asynchronous audit for speed with delayed review

Asynchronous audit allows your agents to execute autonomously while logging decisions for later human review. Near-zero latency maintains operational speed, but you accept delayed error detection. Your agents make decisions immediately, comprehensive logging captures full context and reasoning, periodic review queues surface decisions for human assessment, and corrective actions address issues retroactively.

This pattern suits content classification, recommendation systems, or internal processes where you can correct mistakes retroactively without severe consequences. Platforms like Galileo's Insights Engine automate failure pattern detection during asynchronous review, surfacing recurring issues that require attention.

You maintain the speed advantages of autonomous execution while ensuring human oversight identifies systematic problems requiring threshold adjustments or model retraining.

How Do You Maintain Efficiency While Implementing HITL Agent Oversight?

The fundamental tension in HITL design involves preserving your agent efficiency while ensuring safety.

Your production deployments reveal specific technical challenges: human review loops introduce 0.5-2.0 seconds latency per decision, neural network overconfidence requires calibration techniques, human review bottlenecks require maintaining 10-15% escalation rates for sustainable operations, and feedback loop integration requires systematic collection and continuous retraining.

Balance latency against safety requirements

Trading algorithms requiring human approval for each decision face a critical tradeoff: 2-second delays can cost millions in missed opportunities while immediate execution risks catastrophic errors. Human oversight creates unavoidable latency penalties.

Reaction delays typically range from 0.5 to 2.0 seconds depending on task complexity. These latency penalties can impact closed-loop system stability and may cause undesired oscillations in time-sensitive workflows.

However, you can manage this tradeoff through confidence-based routing. You escalate only low-confidence decisions to human review while high-confidence predictions proceed autonomously, though optimal thresholds vary by your deployment context.

Your architecture must balance synchronous oversight providing maximum safety but 0.5-2.0 second delay per decision, asynchronous audit offering near-zero latency but delayed error detection, and threshold-based routing providing a balanced approach suitable for most production scenarios.

This strategic framework enables you to demonstrate risk management to executives while maintaining the operational efficiency that justifies AI investments.

Calibrate confidence scores for reliable escalation

When your agents report 95% confidence but make incorrect predictions, the overconfidence problem breaks threshold-based escalation strategies. Neural networks exhibit systematic overconfidence, producing high confidence scores even for incorrect predictions.

This fundamental challenge breaks threshold-based escalation strategies, creating under-escalation where risky decisions proceed autonomously.

Your production system requires these calibration approaches:

Training-time calibration: Aligning uncertainty estimates with observed error rates during model training

Temperature scaling: Post-hoc adjustment using validation sets to recalibrate probability distributions

Ensemble disagreement: High disagreement across ensemble members triggers escalation regardless of individual confidence

Conformal prediction: Statistical guarantees through prediction sets with coverage probabilities

Specialized evaluation models like Luna-2 SLMs provide calibrated confidence scoring optimized for agent decision assessment. The critical anti-pattern is using raw softmax probabilities as confidence scores without calibration, which leads to systematic over-autonomy in incorrect predictions and undermines your entire escalation strategy.

Integrate systematic feedback loops for continuous improvement

Your human corrections must systematically improve agent performance, not just fix individual errors.

Structured feedback collection requires standardized interfaces requiring reviewers to provide reasoning alongside corrections, categorical feedback enabling pattern analysis across similar scenarios, automated integration pipelines feeding corrections into retraining workflows, and version control tracking model behavior changes after feedback incorporation.

Purpose-built platforms like Galileo's agent observability provide automated feedback collection with traceable lineage from human intervention to model improvement.

This systematic approach transforms human oversight from an operational cost into a continuous improvement mechanism, enabling you to demonstrate ROI through measurable reliability gains that reduce escalation rates while maintaining safety thresholds.

Building agent systems you can trust

Your board wants agent ROI metrics. Production deployments reveal substantial reliability gaps requiring systematic oversight. The gap between laboratory performance and real-world deployment demonstrates why systematic oversight architecture is essential.

Here's how Galileo helps you with AI guardrails:

Automated quality guardrails in CI/CD: Galileo integrates directly into your development workflow, running comprehensive evals on every code change and blocking releases that fail quality thresholds

Multi-dimensional response evaluation: With Galileo's Luna-2 evaluation models, you can assess every output across dozens of quality dimensions—correctness, toxicity, bias, adherence—at 97% lower cost than traditional LLM-based evaluation approaches

Real-time runtime protection: Galileo's Agent Protect scans every prompt and response in production, blocking harmful outputs before they reach users while maintaining detailed compliance logs for audit requirements

Intelligent failure detection: Galileo’s Insights Engine automatically clusters similar failures, surfaces root-cause patterns, and recommends fixes, reducing debugging time while building institutional knowledge

Human-in-the-loop optimization: Galileo's Continuous Learning via Human Feedback (CLHF) transforms expert reviews into reusable evaluators, accelerating iteration while maintaining quality standards

Discover how Galileo provides enterprise-grade AI guardrails with pre-built policies, real-time metrics, and ready-made integrations.

Frequently Asked Questions About Human-in-the-Loop Agent Oversight

What is human-in-the-loop (HITL) in AI agent systems?

Human-in-the-loop (HITL) integrates structured human intervention points throughout AI agent decision-making processes. HITL architectures pause autonomous execution at predetermined trigger points—when confidence falls below thresholds, risk levels exceed acceptable ranges, or regulatory requirements mandate review. Effective HITL systems typically target 10-15% escalation rates, meaning 85-90% of decisions execute autonomously while critical cases receive human oversight.

How do I determine the right confidence threshold for my agent system?

Confidence thresholds commonly range from 80-90%, but your optimal threshold depends on risk tolerance, domain requirements, and operational capacity. Financial services typically use 90-95% thresholds due to regulatory scrutiny. Customer service might accept 80-85% for routine inquiries. Healthcare applications often require 95%+ given patient safety implications. Start conservatively at 90-95%, then monitor escalation metrics to adjust toward 10-15% total escalation.

What escalation rate should I target for production HITL systems?

Your production systems should typically target 10-15% escalation rate for sustainable human review operations. This maintains manageable reviewer workload with adequate protection. Rates around 20% remain operational but indicate higher manual intervention than optimal. When rates reach 60%, excessive escalation creates bottlenecks requiring system recalibration. Monitor this alongside human override rate to identify threshold optimization opportunities.

Should I implement synchronous or asynchronous human oversight?

Choose synchronous oversight when decisions are high-stakes and irreversible; use asynchronous audit for lower-risk scenarios where delayed review is acceptable. Synchronous patterns provide maximum control but introduce 0.5-2.0 second latency per decision. Asynchronous audit maintains near-zero latency but creates delayed error detection. Most production systems implement hybrid approaches using confidence-based routing.

How does Galileo help implement human-in-the-loop oversight?

Galileo provides comprehensive infrastructure for production HITL oversight. The Insights Engine automatically identifies agent failure patterns and incorporates human feedback through adaptive learning. Galileo Protect implements real-time guardrails for prompt injection, toxic language, PII leakage, and hallucination detection. The platform's confidence-based escalation routing automatically directs uncertain decisions to human review while maintaining comprehensive audit trails for regulatory compliance.

Picture this: Your customer service agent just approved a $50,000 refund to a fraudulent account. Your CFO wants answers about how an AI system made a financial decision of this magnitude without oversight. This scenario illustrates why human-in-the-loop (HITL) agent oversight has become non-negotiable for production AI deployments.

This guide demonstrates how to build production-ready HITL systems that balance autonomous efficiency with safety through confidence-based escalation, regulatory compliance frameworks, and purpose-built architectures. You'll learn quantifiable thresholds, architectural patterns, and operational strategies for reliable agent oversight.

TLDR:

Gartner predicts 40% of agentic AI projects may fail by 2027 due to reliability challenges

Set confidence thresholds at 80-90% based on your risk tolerance and domain requirements

Target 10-15% escalation rates for sustainable review operations without reviewer bottlenecks

Architectural patterns balance autonomy with safety across different risk profiles

EU AI Act and FDA require demonstrable human oversight for high-risk systems

We recently explored this topic on our Chain of Thought podcast, where industry experts shared practical insights and real-world implementation strategies:

What Is Human-in-the-Loop (HITL) Agent Oversight?

Human-in-the-loop (HITL) agent oversight is an architectural approach that integrates structured human intervention points into autonomous AI systems, enabling operators to review, approve, or override agent decisions at predetermined risk thresholds. HITL architecture maintains automation efficiency for routine decisions while ensuring human expertise guides high-stakes choices involving financial transactions, customer relationships, or regulatory compliance.

Your agents operate across a spectrum from fully autonomous execution to mandatory human approval. Your customer service agent handles most inquiries without intervention but automatically escalates high-risk scenarios: large refunds, VIP accounts, complex judgments, and decisions below your confidence thresholds.

Routine decisions execute autonomously while experienced operators handle scenarios requiring relationship management, risk assessment, or context beyond training data. Your operational escalation rates should stay within the 10-15% range sustainable for review operations.

Gartner predicts 40% of agentic AI projects will be canceled by 2027. Cancellations stem from escalating costs, unclear business value, or inadequate risk controls. HITL architecture provides governance frameworks balancing innovation velocity with operational safety: the fundamental requirement for executive confidence in agent deployments.

The reliability crisis driving human oversight requirements

Your production AI agent systems face a documented reliability crisis. Mandatory oversight architectures address these reliability gaps directly. Research on controlled benchmarks has revealed substantial failure rates, while production deployments face even more severe challenges. Real-world complexity, edge cases, and cascading errors create failure rates substantially higher than test environments.

Simultaneously, you're navigating a critical adoption gap: while 50% of organizations are expected to launch agentic AI pilots by 2027, only 23% currently scale AI in production successfully.

When you deploy high-risk AI agents in regulated industries like financial services or healthcare, human oversight transforms from architectural consideration to legal requirement. The EU AI Act mandates human oversight capabilities for all high-risk AI systems, with specific requirements:

Natural persons must oversee AI system operation

Human operators need authority to intervene in critical decisions

Systems must enable independent review of AI recommendations

Override mechanisms must function without technical barriers

Regulatory frameworks from GDPR, SEC, CFPB, and FDA guidance all require that your AI systems enable meaningful human intervention in decision-making. Your agents can't make credit decisions, insurance underwriting, or employment recommendations without human review mechanisms.

Healthcare deployments must enable professionals to independently review the basis for AI recommendations without relying solely on automated outputs for patient care decisions. These requirements create baseline architectural constraints ensuring your systems demonstrate risk management to auditors and regulators.

What Triggers Determine When Agents Escalate to Humans?

Your production HITL implementations require precise escalation criteria distinguishing when autonomous execution proceeds versus when human judgment becomes mandatory. Your trigger framework should combine confidence score thresholds, risk classifications, escalation rate monitoring, and contextual factors. Together, these create a comprehensive safety net balancing automation efficiency with appropriate human oversight.

Set confidence score thresholds

Confidence thresholds in the 80-90% range serve as quantifiable escalation points. Decisions above this threshold may proceed autonomously, while those below trigger human intervention.

Financial services typically use 90-95% thresholds due to monetary impact. Customer service might accept 80-85% for routine inquiries. Healthcare applications often require 95%+ given patient safety implications. For multi-agent systems, apply more conservative thresholds due to cumulative confidence degradation—a three-agent chain requires escalation even with 90% individual confidence due to compound uncertainty.

Monitor escalation rates as system health indicators

Target 10-15% escalation rate for sustainable human review operations. Rates around 20% indicate manageable operations with higher manual intervention than optimal. When rates reach 60%, excessive escalation signals system miscalibration requiring recalibration.

Monitor this alongside human override rate: the percentage of escalated decisions where reviewers reject the agent's recommendation. These paired metrics provide executive visibility into system reliability while identifying threshold optimization opportunities.

Apply risk classification and context triggers

The EU AI Act establishes multi-tier risk categorization determining mandatory escalation: unacceptable risk (prohibited), high risk (mandatory oversight), limited risk (transparency only), or minimal risk (no requirements). Context-dependent factors trigger escalation independent from confidence scores:

Financial thresholds: Transaction amounts exceeding limits require approval regardless of confidence

Reputational risk: VIP clients or public-facing decisions demand executive review

Stakeholder sensitivity: Time-critical decisions affecting multiple departments trigger human judgment

Task complexity: Situations outside training distribution exceed safety thresholds

Multi-agent chains require more conservative thresholds. Monitor chain length, confidence decay across handoffs, inter-agent disagreement, and circular dependency detection. This layered approach captures risks that confidence scores alone might miss.

Which Architectural Patterns Work for Production HITL Systems?

Effective human oversight requires purpose-built architecture, not monitoring bolted onto autonomous systems. How do you design systems where human judgment is a first-class component rather than an afterthought?

The key is selecting architectures that match your specific workflow requirements: synchronous patterns provide maximum control with latency penalties, asynchronous patterns maintain speed with delayed detection, and hybrid approaches balance both considerations.

Understanding these tradeoffs enables you to design HITL systems that maintain executive confidence while preserving the automation efficiency that justifies your AI investments.

Multi-tier oversight separating planning from execution

When your workflows span multiple departments and six-figure budgets, you should integrate oversight at the planning phase rather than just execution. Multi-tier oversight separates strategic planning from execution agents.

The LLM generates high-level action plans that human operators review for feasibility before authorization. Lower-level agents then execute approved plans with bounded autonomy, while escalation triggers activate for out-of-bounds scenarios.

This pattern suits complex workflows where strategic direction requires human judgment while tactical execution proceeds autonomously. You maintain control over high-level decisions that shape workflow direction while enabling autonomous execution of approved plans.

The architecture provides executive visibility into strategic choices without creating bottlenecks in tactical operations, demonstrating governance to stakeholders while preserving automation efficiency.

Synchronous approval pausing execution

Synchronous approval pauses your agent execution pending human authorization for high-risk operations. Your agent identifies an action requiring confirmation, then the orchestrator pauses and serializes state.

Your system returns FINISH status with invocationId while humans review via UI with full context. The session resumes with approval status, and your agent proceeds or aborts based on human decision.

Synchronous approval provides maximum control for high-risk operations where execution must not proceed without explicit authorization. This pattern introduces 0.5-2.0 second latency per decision but ensures no irreversible actions occur without explicit human approval.

You should use synchronous oversight for financial transactions exceeding thresholds, account modifications, data deletion, or any action that can't be easily reversed. The latency cost is justified when mistakes create irreversible consequences or regulatory violations.

Asynchronous audit for speed with delayed review

Asynchronous audit allows your agents to execute autonomously while logging decisions for later human review. Near-zero latency maintains operational speed, but you accept delayed error detection. Your agents make decisions immediately, comprehensive logging captures full context and reasoning, periodic review queues surface decisions for human assessment, and corrective actions address issues retroactively.

This pattern suits content classification, recommendation systems, or internal processes where you can correct mistakes retroactively without severe consequences. Platforms like Galileo's Insights Engine automate failure pattern detection during asynchronous review, surfacing recurring issues that require attention.

You maintain the speed advantages of autonomous execution while ensuring human oversight identifies systematic problems requiring threshold adjustments or model retraining.

How Do You Maintain Efficiency While Implementing HITL Agent Oversight?

The fundamental tension in HITL design involves preserving your agent efficiency while ensuring safety.

Your production deployments reveal specific technical challenges: human review loops introduce 0.5-2.0 seconds latency per decision, neural network overconfidence requires calibration techniques, human review bottlenecks require maintaining 10-15% escalation rates for sustainable operations, and feedback loop integration requires systematic collection and continuous retraining.

Balance latency against safety requirements

Trading algorithms requiring human approval for each decision face a critical tradeoff: 2-second delays can cost millions in missed opportunities while immediate execution risks catastrophic errors. Human oversight creates unavoidable latency penalties.

Reaction delays typically range from 0.5 to 2.0 seconds depending on task complexity. These latency penalties can impact closed-loop system stability and may cause undesired oscillations in time-sensitive workflows.

However, you can manage this tradeoff through confidence-based routing. You escalate only low-confidence decisions to human review while high-confidence predictions proceed autonomously, though optimal thresholds vary by your deployment context.

Your architecture must balance synchronous oversight providing maximum safety but 0.5-2.0 second delay per decision, asynchronous audit offering near-zero latency but delayed error detection, and threshold-based routing providing a balanced approach suitable for most production scenarios.

This strategic framework enables you to demonstrate risk management to executives while maintaining the operational efficiency that justifies AI investments.

Calibrate confidence scores for reliable escalation

When your agents report 95% confidence but make incorrect predictions, the overconfidence problem breaks threshold-based escalation strategies. Neural networks exhibit systematic overconfidence, producing high confidence scores even for incorrect predictions.

This fundamental challenge breaks threshold-based escalation strategies, creating under-escalation where risky decisions proceed autonomously.

Your production system requires these calibration approaches:

Training-time calibration: Aligning uncertainty estimates with observed error rates during model training

Temperature scaling: Post-hoc adjustment using validation sets to recalibrate probability distributions

Ensemble disagreement: High disagreement across ensemble members triggers escalation regardless of individual confidence

Conformal prediction: Statistical guarantees through prediction sets with coverage probabilities

Specialized evaluation models like Luna-2 SLMs provide calibrated confidence scoring optimized for agent decision assessment. The critical anti-pattern is using raw softmax probabilities as confidence scores without calibration, which leads to systematic over-autonomy in incorrect predictions and undermines your entire escalation strategy.

Integrate systematic feedback loops for continuous improvement

Your human corrections must systematically improve agent performance, not just fix individual errors.

Structured feedback collection requires standardized interfaces requiring reviewers to provide reasoning alongside corrections, categorical feedback enabling pattern analysis across similar scenarios, automated integration pipelines feeding corrections into retraining workflows, and version control tracking model behavior changes after feedback incorporation.

Purpose-built platforms like Galileo's agent observability provide automated feedback collection with traceable lineage from human intervention to model improvement.

This systematic approach transforms human oversight from an operational cost into a continuous improvement mechanism, enabling you to demonstrate ROI through measurable reliability gains that reduce escalation rates while maintaining safety thresholds.

Building agent systems you can trust

Your board wants agent ROI metrics. Production deployments reveal substantial reliability gaps requiring systematic oversight. The gap between laboratory performance and real-world deployment demonstrates why systematic oversight architecture is essential.

Here's how Galileo helps you with AI guardrails:

Automated quality guardrails in CI/CD: Galileo integrates directly into your development workflow, running comprehensive evals on every code change and blocking releases that fail quality thresholds

Multi-dimensional response evaluation: With Galileo's Luna-2 evaluation models, you can assess every output across dozens of quality dimensions—correctness, toxicity, bias, adherence—at 97% lower cost than traditional LLM-based evaluation approaches

Real-time runtime protection: Galileo's Agent Protect scans every prompt and response in production, blocking harmful outputs before they reach users while maintaining detailed compliance logs for audit requirements

Intelligent failure detection: Galileo’s Insights Engine automatically clusters similar failures, surfaces root-cause patterns, and recommends fixes, reducing debugging time while building institutional knowledge

Human-in-the-loop optimization: Galileo's Continuous Learning via Human Feedback (CLHF) transforms expert reviews into reusable evaluators, accelerating iteration while maintaining quality standards

Discover how Galileo provides enterprise-grade AI guardrails with pre-built policies, real-time metrics, and ready-made integrations.

Frequently Asked Questions About Human-in-the-Loop Agent Oversight

What is human-in-the-loop (HITL) in AI agent systems?

Human-in-the-loop (HITL) integrates structured human intervention points throughout AI agent decision-making processes. HITL architectures pause autonomous execution at predetermined trigger points—when confidence falls below thresholds, risk levels exceed acceptable ranges, or regulatory requirements mandate review. Effective HITL systems typically target 10-15% escalation rates, meaning 85-90% of decisions execute autonomously while critical cases receive human oversight.

How do I determine the right confidence threshold for my agent system?

Confidence thresholds commonly range from 80-90%, but your optimal threshold depends on risk tolerance, domain requirements, and operational capacity. Financial services typically use 90-95% thresholds due to regulatory scrutiny. Customer service might accept 80-85% for routine inquiries. Healthcare applications often require 95%+ given patient safety implications. Start conservatively at 90-95%, then monitor escalation metrics to adjust toward 10-15% total escalation.

What escalation rate should I target for production HITL systems?

Your production systems should typically target 10-15% escalation rate for sustainable human review operations. This maintains manageable reviewer workload with adequate protection. Rates around 20% remain operational but indicate higher manual intervention than optimal. When rates reach 60%, excessive escalation creates bottlenecks requiring system recalibration. Monitor this alongside human override rate to identify threshold optimization opportunities.

Should I implement synchronous or asynchronous human oversight?

Choose synchronous oversight when decisions are high-stakes and irreversible; use asynchronous audit for lower-risk scenarios where delayed review is acceptable. Synchronous patterns provide maximum control but introduce 0.5-2.0 second latency per decision. Asynchronous audit maintains near-zero latency but creates delayed error detection. Most production systems implement hybrid approaches using confidence-based routing.

How does Galileo help implement human-in-the-loop oversight?

Galileo provides comprehensive infrastructure for production HITL oversight. The Insights Engine automatically identifies agent failure patterns and incorporates human feedback through adaptive learning. Galileo Protect implements real-time guardrails for prompt injection, toxic language, PII leakage, and hallucination detection. The platform's confidence-based escalation routing automatically directs uncertain decisions to human review while maintaining comprehensive audit trails for regulatory compliance.

Jackson Wells