Feb 2, 2026

What Is RAGChecker? A Fine-Grained Framework for Diagnosing RAG Systems

Your production RAG system generates confidently wrong answers while logs show successful completions. Users escalate tickets about incorrect information. Your team spends hours debugging, only to discover the problem wasn't retrieval quality: your generator was hallucinating despite having the right context.

Traditional metrics like BLEU and ROUGE showed stable performance throughout. You need diagnostic tools that actually pinpoint where failures occur.

You'll understand how its fine-grained metrics differ from aggregate scoring approaches and when claim-level diagnostics justify the computational investment. You'll also learn how to integrate RAGChecker into your MLOps workflows for systematic quality improvement.

TLDR:

RAGChecker uses claim-level entailment checking to attribute RAG failures to specific components

Provides separate diagnostic metrics for retrieval quality versus generation faithfulness

Requires AWS Bedrock Llama3 70B and custom MLOps integration

NeurIPS 2024 validated with 0.62-0.75 Kendall's tau correlation to human judgments

Best suited for offline diagnostic analysis, not real-time production monitoring

Complements observability platforms rather than replacing them

What Is RAGChecker?

RAGChecker is a peer-reviewed evaluation framework that diagnoses exactly where your RAG pipeline breaks, whether in retrieval, generation, or both. The framework uses claim-level analysis to isolate component failures.

Traditional RAG evaluation treats your system as a black box: input goes in, output comes out, and you're left guessing where failures occur. RAGChecker breaks this pattern through fine-grained evaluation that pinpoints specific failure points.

Researchers from Amazon AWS AI, Shanghai Jiao Tong University, and Westlake University published the AtomicClaimFactCheck framework at NeurIPS 2024, introducing a methodology that decomposes responses into atomic claims and performs four-directional comparisons among generated responses, ground truth answers, retrieved documents, and the original question.

You get precise error localization at the statement level rather than scoring entire responses holistically. While traditional metrics assign a single score to your entire output, RAGChecker identifies which specific claims fail and why, revealing exactly which facts are unsupported, which retrievals are irrelevant, and which generation patterns cause hallucinations.

Why Traditional RAG Evaluation Fails

BLEU and ROUGE metrics fail because they reward verbatim copying without verifying accuracy. Research shows BLEU is "insensitive to retrieval quality and can be manipulated by verbatim copying." Your system could achieve high BLEU scores by concatenating retrieved passages, even when those passages are outdated or irrelevant.

BERTScore represents an improvement through contextual embeddings, but still cannot detect hallucinations. Without component-level attribution, you cannot determine whether failures stem from retrieval quality, model choice, or generation parameters. Modern observability platforms address this gap through multi-dimensional metrics.

Traditional metrics provide aggregate scores that obscure where failures actually occur in multi-stage pipelines. Your retrieval module might fail through poor relevance while your generation module hallucinates, but single aggregate scores cannot isolate which component needs fixing.

How RAGChecker Works Under the Hood

Picture your RAG system as a crime scene. RAGChecker plays forensic investigator, collecting evidence (your query, documents, response), extracting claims from each piece, then triangulating what failed. The framework addresses both retrieval and generation components independently through separate diagnostic metrics.

The diagnostic approach provides two distinct categories of metrics:

Retrieval metrics measure whether your system fetches relevant documents and covers necessary claims

Generation metrics assess faithfulness to retrieved context, hallucination rates, and noise sensitivity

Separate diagnostics reveal whether poor performance stems from missing documents, irrelevant retrievals, unfaithful generation, or combinations of these failures

The diagnostic pipeline begins with input collection. The entailment checking mechanism extracts claims and computes relationships between them. Each generated claim pairs systematically with claims from ground truth and retrieved documents.

The LLM scores entailment across three dimensions: faithfulness (whether retrieved context supports the claim), correctness (verification against ground truth), and retrieval quality (whether retrieved documents support ground truth claims). This triangulated approach isolates failures to specific pipeline stages rather than producing a single opaque score.

How Do You Measure Retrieval Quality with RAGChecker?

Your retrieval component determines whether the right information reaches your generator. RAGChecker provides specific metrics to diagnose whether your system fetches relevant documents and covers the claims needed for accurate responses.

Understanding Retrieval Metrics

Your search returns 50 documents but only 3 answer the question. That's a retrieval precision problem RAGChecker catches. The framework provides four retrieval diagnostics:

Metric | What It Measures | Strong Performance | Critical Threshold |

Context Precision | Proportion of retrieved chunks that are relevant | >80% | <70% needs attention |

Context Recall | Proportion of ground truth claims supported by retrieved context | >80% | <60% signals gaps |

Claim Recall | Fraction of ground truth claims your retrieval surfaces | 60-70%+ | <50% indicates misses |

Chunk Attribution | How well retrieved chunks support generated claims | Varies by use case | Monitor for noise |

When Context Precision drops below 70%, you're retrieving noise. When Context Recall stays low, you're missing relevant documents. When Claim Recall crashes despite decent Context metrics, your knowledge base has gaps.

You can use retrieval metrics alongside RAGChecker for continuous monitoring.

Reading the Diagnostic Patterns

When system performance drops 15% in production, RAGChecker reveals the pattern: Context Precision remains at 85%, but Claim Recall dropped from 68% to 52%. Your retrieval quality is fine for documents fetched, but coverage gaps mean essential information isn't being retrieved.

Targeted diagnosis follows directly from metric patterns. Low Context Precision indicates you need to tune ranking algorithms or adjust similarity thresholds to filter noise. Low Context Recall points to expanding search scope, adjusting top-k parameters, or improving query transformation.

Low Claim Recall with acceptable Context metrics suggests knowledge base gaps. These diagnostic patterns enable you to prioritize engineering effort on the specific component causing failures rather than undertaking expensive system-wide rewrites.

How Do You Measure Generation Quality with RAGChecker?

Even with perfect retrieval, your generator can still fail. RAGChecker provides dedicated metrics to assess whether your model faithfully uses retrieved context or invents information on its own.

Explore Generation Metrics

Watch what happens when your model gets perfect context but still hallucinates. Faithfulness measures the proportion of generated claims directly supported by retrieved context, while hallucination detection identifies claims your model invented. These work as opposing forces: faithfulness tracks grounding; hallucination catches fabrication. Production systems need real-time tracking beyond batch evals.

Noise sensitivity measures degradation when irrelevant context pollutes your retrieval set

Context utilization assesses how effectively your generation component uses available retrieved information, revealing whether low performance stems from missing context or underutilization

Self-knowledge evaluates whether your model appropriately relies on parametric knowledge when retrieved context is insufficient

Together, these metrics create a comprehensive view of generation behavior across different failure modes.

Interpret Generation Failures

Your RAG system shows degraded overall accuracy compared to the previous month. RAGChecker reveals high Claim Recall, meaning retrieval provides necessary information, but faithfulness collapsed. You've isolated a pure generation failure: retrieval remains solid, but your generator stopped grounding responses in context.

Remediation strategy shifts completely. Instead of retuning retrieval algorithms or expanding your knowledge base, you focus on generation: adjusting system prompts to emphasize context adherence, implementing eval guardrails that block low-context-adherence responses, or switching to models with better instruction-following capabilities. Systematic experiment tracking enables you to validate improvements before deploying changes to production.

Balance Faithfulness Against Correctness

High faithfulness scores can mask a critical vulnerability in your RAG system. When your generator faithfully reproduces information from retrieved documents, it also faithfully reproduces errors present in those documents. RAGChecker tracks this tension through separate correctness metrics that compare generated claims against ground truth, independent of retrieval quality.

Monitor the gap between faithfulness and correctness scores to detect this failure mode. A system showing 90% faithfulness but only 70% correctness indicates your retrieval pipeline surfaces flawed documents that your generator dutifully follows. This pattern requires different intervention than hallucination problems: instead of constraining your generator, you need to improve document quality, implement source verification, or add retrieval-stage filtering.

Understanding this tradeoff prevents you from over-optimizing faithfulness at the expense of actual accuracy, ensuring your RAG system delivers correct answers rather than faithfully wrong ones.

How to Use RAGChecker Across Different Roles

You and your stakeholders need different views into RAG system performance. Role-based metric reporting bridges the gap between executive dashboards requiring aggregated KPIs and engineering teams needing granular diagnostics for root cause analysis. Understanding these different perspectives helps you communicate RAG quality effectively across your organization.

RAGChecker serves both needs through layered metric reporting that connects component-level failures to business-level performance indicators. Your leadership team tracks high-level SLAs while your engineering teams drill into retrieval precision, claim recall, and faithfulness scores. This dual-layer approach ensures technical improvements translate into metrics that matter for quarterly reviews and resource allocation decisions.

Executive View: Aggregated Metrics for Dashboards and SLAs

Your CTO asks for a single number to track RAG quality. Your engineers know single metrics mask component failures. RAGChecker bridges this gap by providing overall precision, recall, and F1 scores that aggregate component-level metrics into executive-ready performance indicators. Precision measures the proportion of generated claims that are correct according to ground truth. Recall measures coverage. F1 provides balanced assessment accounting for both accuracy and completeness.

These aggregate metrics support quarterly business reviews and SLA definition through production analytics. You can establish performance thresholds aligned with business requirements: maintain overall precision above 90% for customer-facing applications, ensure recall exceeds 75% for compliance documentation systems, or target F1 scores above 85% for internal knowledge management tools.

Engineering View: Diagnostic Slices for Root-Cause Analysis

Your engineering team needs fine-grained component-level metrics that drive precise diagnosis. When production issues arise, drill into retrieval-specific diagnostics: Context Precision revealing noise in retrieved documents, Context Recall showing whether relevant documents were retrieved, Claim Recall identifying which ground truth answer claims are supported by retrieved context. Component-level debugging with trace analysis enables systematic root cause identification.

Generation-focused diagnostics work similarly. Consider what happens when users report nonsensical answers: check faithfulness (62%), hallucination rate (increased from baseline), and noise sensitivity (stable). Low faithfulness with high hallucination directly indicates the generator stopped grounding in context. Adjust system prompts, validate faithfulness recovery in staging, and deploy with confidence that the specific failure mode is addressed.

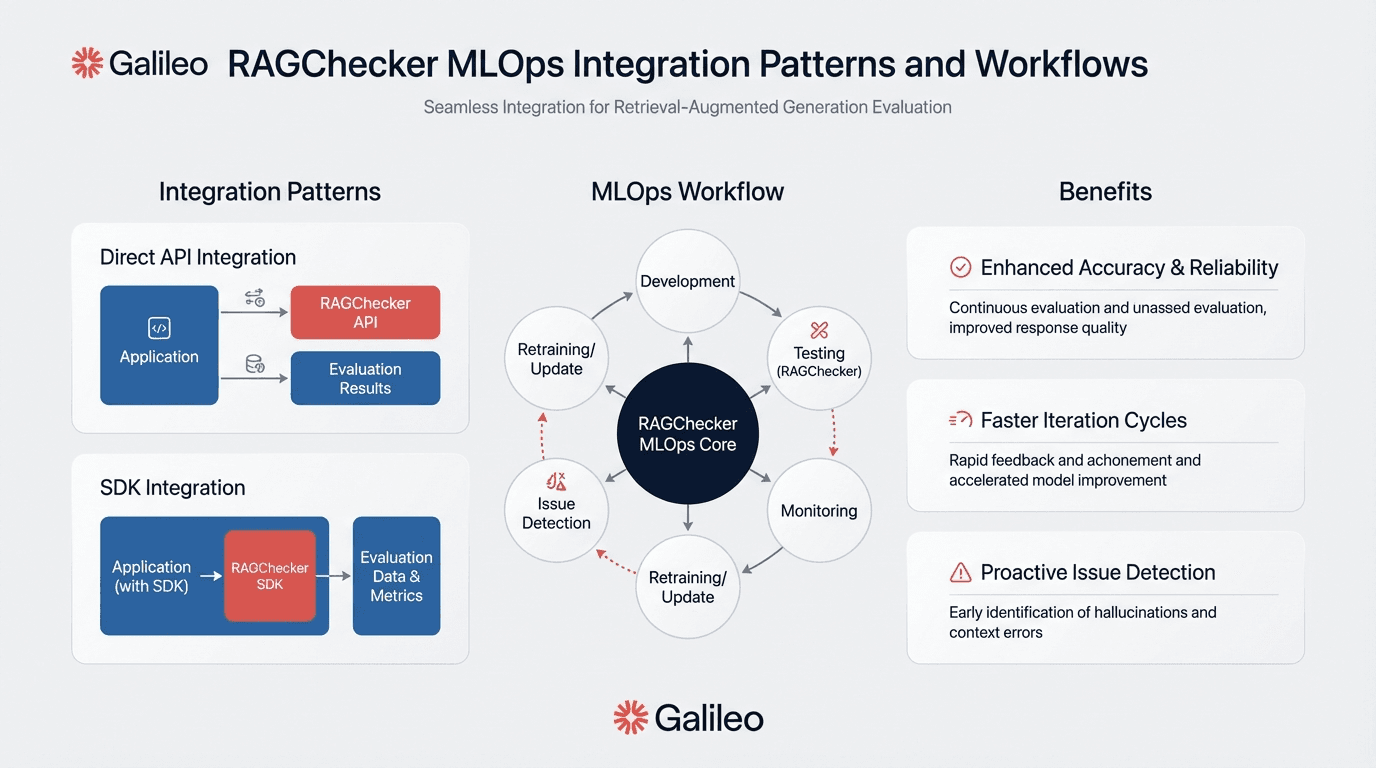

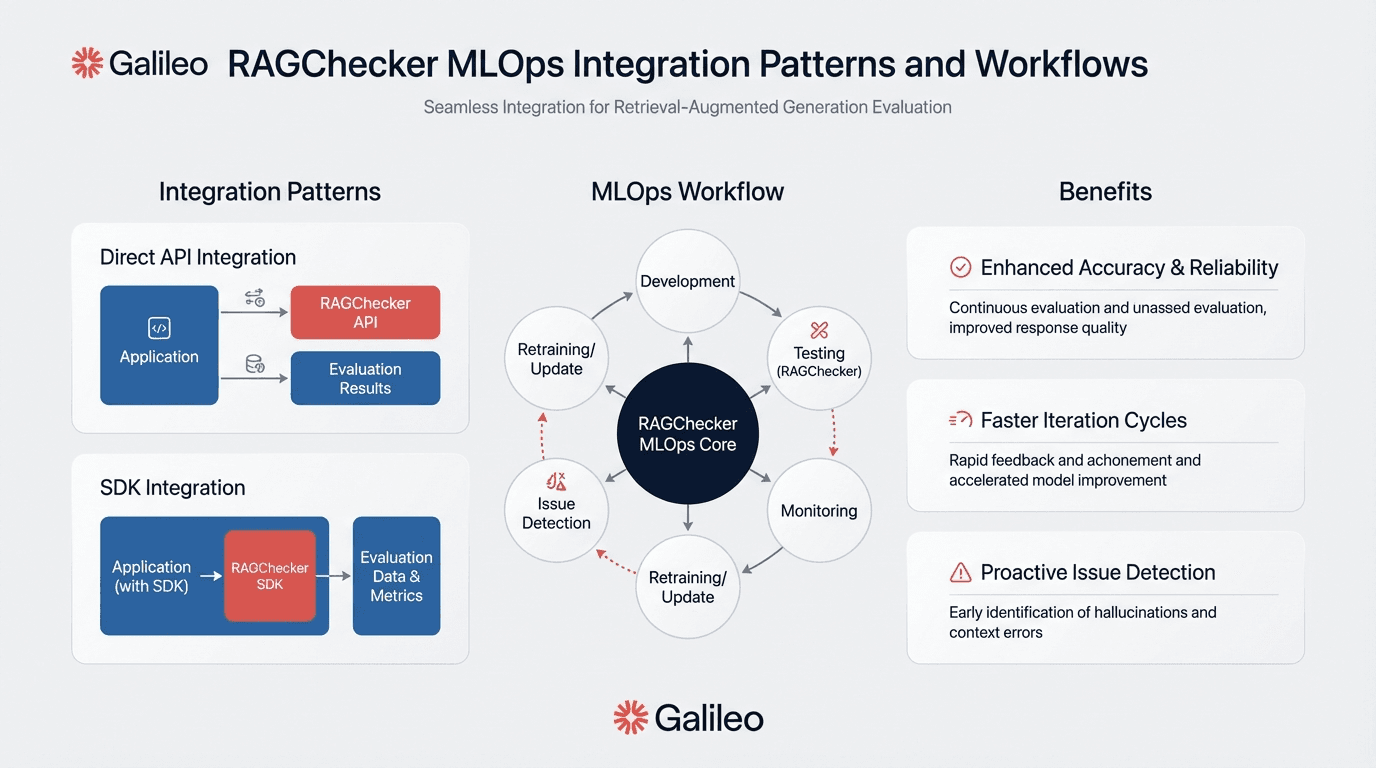

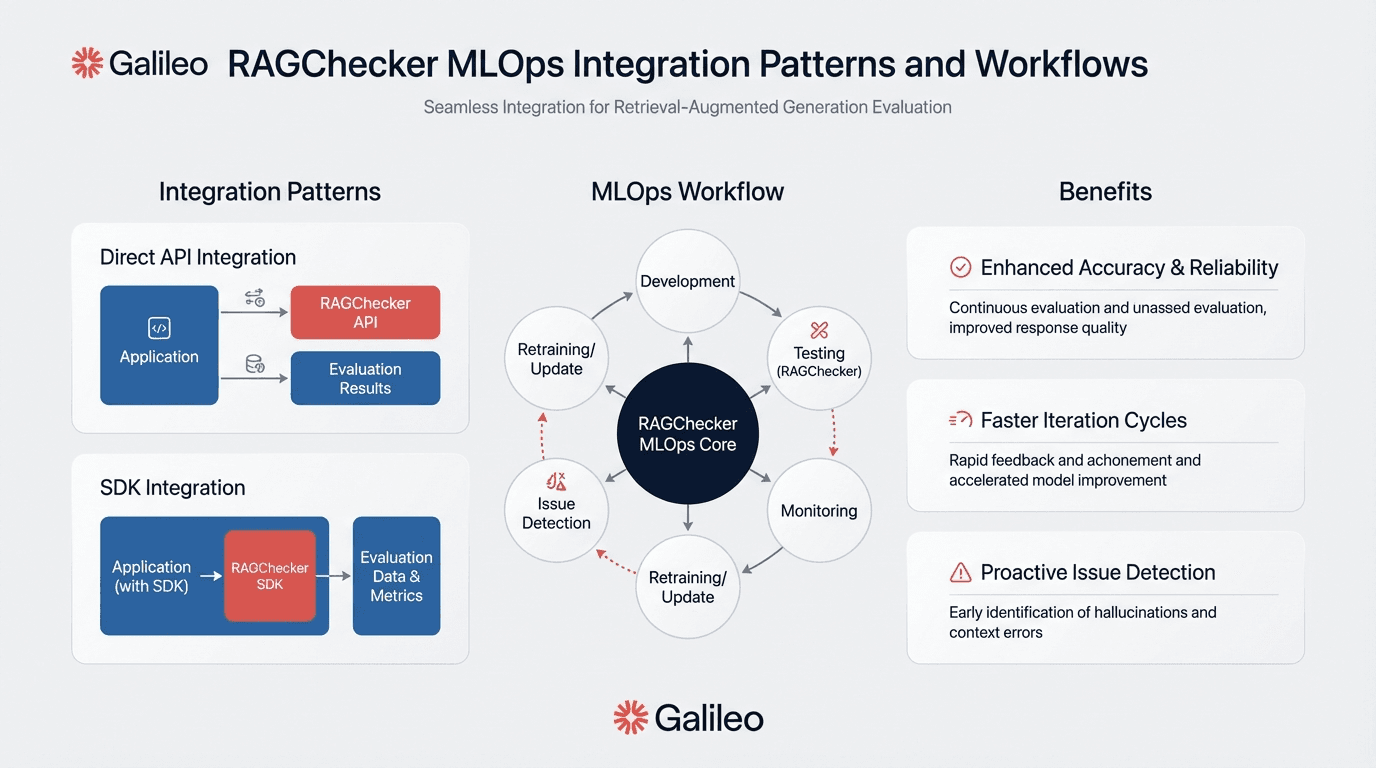

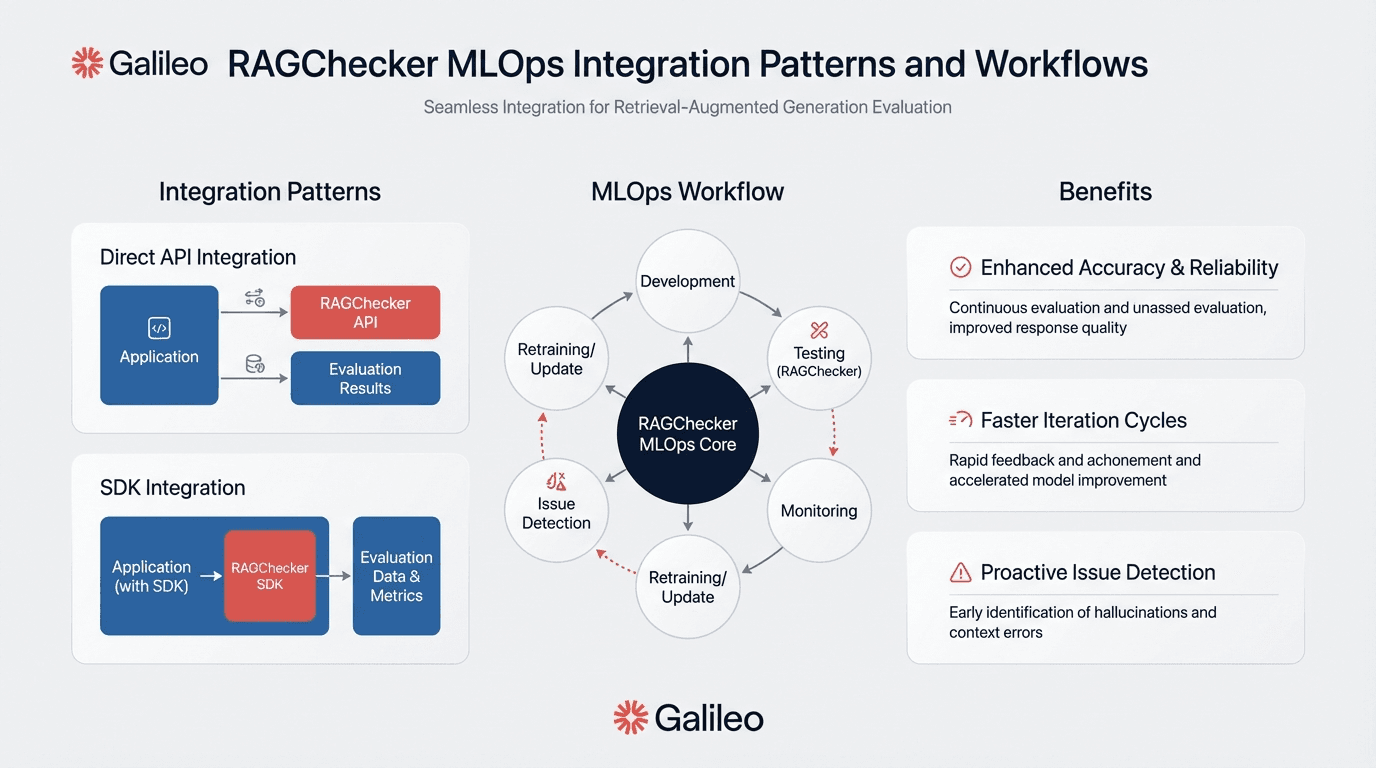

How Do You Integrate RAGChecker into Enterprise MLOps?

Moving from diagnostic insights to operational workflows requires thoughtful integration planning. For your team, RAGChecker's value multiplies when embedded into existing MLOps infrastructure, transforming one-time evals into continuous quality monitoring. However, the framework demands custom engineering work since no turnkey solutions currently exist.

Understanding the integration patterns, governance implications, and operational limitations helps you assess whether the diagnostic depth justifies the implementation investment for your specific use case. The sections below outline practical approaches for CI/CD integration, compliance alignment, and managing known framework limitations.

Configure Integration Patterns

Production monitoring follows a batch eval pattern rather than real-time assessment. Production logs flow through ETL processing, converting to RAGChecker input format, executing evals, and storing metrics in your observability platform. Industry guidance recommends implementing statistical sampling, evaluating 1-10% of traffic rather than 100% to control costs. Sampling strategies enable cost-effective continuous monitoring. Schedule hourly, daily, or weekly batch runs based on your risk tolerance and budget constraints.

Budget 2-4 weeks of senior MLOps engineering time per platform for custom CI/CD integration development, as no turnkey solutions are available. This investment pays dividends through systematic quality tracking, but you should weigh these engineering costs against your diagnostic requirements and existing tooling capabilities.

Apply Governance and Compliance Controls

RAGChecker provides claim-level traceability relevant for audit requirements; you can trace individual claims back to source evidence. However, the framework doesn't generate compliance documentation or provide governance controls required by comprehensive GRC platforms. NIST AI RMF guidance states that trustworthy AI systems require documentation of "AI system design, development processes, data sources, model training, testing, and evaluation results."

RAGChecker addresses the evaluation component but requires integration with broader infrastructure for complete compliance. You can use governance features to complement RAGChecker's evaluation capabilities.

Manage Computational Costs at Scale

RAGChecker's multi-stage LLM pipeline creates significant cost considerations for enterprise deployments. Each eval requires multiple inference calls: claim extraction from responses, claim extraction from retrieved documents, and entailment checking across all claim pairs. For a single query evals, expect 5-10 LLM calls depending on response complexity and document count.

Control costs through strategic sampling and tiered evaluation approaches. Implement lightweight checks on 100% of traffic using simpler metrics, then route flagged responses to full RAGChecker analysis. Batch similar queries together to maximize throughput efficiency.

Cache claim extractions for repeated document chunks to avoid redundant processing. Set cost alerts tied to evaluation volume thresholds, and establish fallback procedures when budgets approach limits. These practices enable continuous diagnostic coverage without unpredictable infrastructure costs undermining your MLOps budget.

RAGChecker Limitations and Considerations

RAGChecker's diagnostic power comes with important constraints you should understand before committing to implementation:

Err or propagation: LLM-based claim extraction introduces inherent uncertainty throughout the evaluation pipeline, potentially missing hallucinations or unsupported synthesis outside detected claims

Flat claim structure: For medical diagnosis or legal analysis, the framework cannot capture causal chains and conditional dependencies that matter for complex reasoning

Reproducibility issues: GitHub Issue #24 documents nondeterministic behavior where identical evaluations produce different metric values, compromising CI/CD pipeline reliability

Missing data handling: Incomplete inputs propagate through the pipeline without clear error messages, producing unreliable downstream metrics

Computational overhead: The multi-stage LLM pipeline creates significant cost and latency constraints for large-scale continuous evaluation

For resource-constrained environments, you should carefully evaluate whether RAGChecker's claim-level diagnostic granularity justifies the computational investment compared to simpler evaluation approaches.

Making RAG Systems Reliable Through Systematic Diagnostics

RAG system failures don't announce themselves clearly. Traditional metrics show stability while your users receive confidently wrong answers. The shift from aggregate scoring to claim-level diagnostics allows precise attribution: you know whether to fix retrieval algorithms, expand knowledge bases, tune generation prompts, or filter noisy context.

RAGChecker provides the diagnostic framework through peer-reviewed methodology and open-source implementation. Claim-level granularity points you toward precise improvements rather than expensive system-wide redesigns.

RAGChecker handles offline diagnostic analysis. Production systems need additional capabilities for real-time monitoring, runtime protection, and operational metrics.

Galileo provides the production infrastructure RAGChecker lacks:

Real-time monitoring: Tracks quality metrics across 100% of production traffic without batch limitations

Hierarchical workflow tracing: Visualizes multi-step agent workflows to pinpoint exact failure points

Runtime protection: Blocks problematic responses before they reach users

Experiment tracking: Systematically validates improvements with A/B testing infrastructure

Production analytics: Surface performance trends without custom ETL development

Native platform integrations: Enable deployment in hours rather than weeks of custom engineering

Galileo’s suite of analytics tools offers visibility and metrics for measuring, evaluating, and optimizing RAG applications, including metrics for chunk attribution and context adherence. Explore how Galileo can improve your RAG implementation.

FAQs

What is claim-level evaluation in RAGChecker?

Claim-level evaluation decomposes responses into atomic verifiable statements called claims, then checks each claim individually against retrieved context and ground truth. You get precise error localization at the statement level rather than scoring entire responses, revealing exactly which facts are unsupported or incorrect.

How do you isolate whether RAG failures stem from retrieval versus generation?

RAGChecker performs triangulated evaluation comparing three pairs independently: retrieved context against ground truth (isolating retrieval quality), generated response against retrieved context (isolating faithfulness), and generated response against ground truth (overall correctness). Low retriever recall with high faithfulness indicates retrieval failure, while high recall with low faithfulness signals generation problems.

How do you integrate RAGChecker into CI/CD pipelines?

The documented approach uses GitHub Actions where code push triggers automated evaluation against test datasets, producing metrics that drive pass/fail gates. For cloud platforms like AWS SageMaker or Google Vertex AI, you'll need to develop custom pipeline steps executing RAGChecker as a containerized processing job. Custom integration typically requires 2-4 weeks of senior MLOps engineering time per platform.

When should you use fine-grained evaluation versus coarse metrics like BLEU?

Use fine-grained evaluation when you need to diagnose specific failure modes for targeted improvements, particularly when aggregate metrics show acceptable performance but users report quality issues. Use coarse metrics for rapid prototyping or when computational costs outweigh diagnostic benefits. RAGChecker's multi-stage LLM pipeline requires significant inference budget compared to simple lexical matching.

How does Galileo help with RAG system evaluation and monitoring?

Galileo offers operational monitoring infrastructure where RAGChecker provides diagnostic analysis. For production systems, Galileo includes real-time metrics, hierarchical workflow tracing, and runtime protection. RAGChecker excels at offline claim-level diagnostics to understand specific failure modes. You can use both: RAGChecker for root cause analysis during development, Galileo for production monitoring.

Your production RAG system generates confidently wrong answers while logs show successful completions. Users escalate tickets about incorrect information. Your team spends hours debugging, only to discover the problem wasn't retrieval quality: your generator was hallucinating despite having the right context.

Traditional metrics like BLEU and ROUGE showed stable performance throughout. You need diagnostic tools that actually pinpoint where failures occur.

You'll understand how its fine-grained metrics differ from aggregate scoring approaches and when claim-level diagnostics justify the computational investment. You'll also learn how to integrate RAGChecker into your MLOps workflows for systematic quality improvement.

TLDR:

RAGChecker uses claim-level entailment checking to attribute RAG failures to specific components

Provides separate diagnostic metrics for retrieval quality versus generation faithfulness

Requires AWS Bedrock Llama3 70B and custom MLOps integration

NeurIPS 2024 validated with 0.62-0.75 Kendall's tau correlation to human judgments

Best suited for offline diagnostic analysis, not real-time production monitoring

Complements observability platforms rather than replacing them

What Is RAGChecker?

RAGChecker is a peer-reviewed evaluation framework that diagnoses exactly where your RAG pipeline breaks, whether in retrieval, generation, or both. The framework uses claim-level analysis to isolate component failures.

Traditional RAG evaluation treats your system as a black box: input goes in, output comes out, and you're left guessing where failures occur. RAGChecker breaks this pattern through fine-grained evaluation that pinpoints specific failure points.

Researchers from Amazon AWS AI, Shanghai Jiao Tong University, and Westlake University published the AtomicClaimFactCheck framework at NeurIPS 2024, introducing a methodology that decomposes responses into atomic claims and performs four-directional comparisons among generated responses, ground truth answers, retrieved documents, and the original question.

You get precise error localization at the statement level rather than scoring entire responses holistically. While traditional metrics assign a single score to your entire output, RAGChecker identifies which specific claims fail and why, revealing exactly which facts are unsupported, which retrievals are irrelevant, and which generation patterns cause hallucinations.

Why Traditional RAG Evaluation Fails

BLEU and ROUGE metrics fail because they reward verbatim copying without verifying accuracy. Research shows BLEU is "insensitive to retrieval quality and can be manipulated by verbatim copying." Your system could achieve high BLEU scores by concatenating retrieved passages, even when those passages are outdated or irrelevant.

BERTScore represents an improvement through contextual embeddings, but still cannot detect hallucinations. Without component-level attribution, you cannot determine whether failures stem from retrieval quality, model choice, or generation parameters. Modern observability platforms address this gap through multi-dimensional metrics.

Traditional metrics provide aggregate scores that obscure where failures actually occur in multi-stage pipelines. Your retrieval module might fail through poor relevance while your generation module hallucinates, but single aggregate scores cannot isolate which component needs fixing.

How RAGChecker Works Under the Hood

Picture your RAG system as a crime scene. RAGChecker plays forensic investigator, collecting evidence (your query, documents, response), extracting claims from each piece, then triangulating what failed. The framework addresses both retrieval and generation components independently through separate diagnostic metrics.

The diagnostic approach provides two distinct categories of metrics:

Retrieval metrics measure whether your system fetches relevant documents and covers necessary claims

Generation metrics assess faithfulness to retrieved context, hallucination rates, and noise sensitivity

Separate diagnostics reveal whether poor performance stems from missing documents, irrelevant retrievals, unfaithful generation, or combinations of these failures

The diagnostic pipeline begins with input collection. The entailment checking mechanism extracts claims and computes relationships between them. Each generated claim pairs systematically with claims from ground truth and retrieved documents.

The LLM scores entailment across three dimensions: faithfulness (whether retrieved context supports the claim), correctness (verification against ground truth), and retrieval quality (whether retrieved documents support ground truth claims). This triangulated approach isolates failures to specific pipeline stages rather than producing a single opaque score.

How Do You Measure Retrieval Quality with RAGChecker?

Your retrieval component determines whether the right information reaches your generator. RAGChecker provides specific metrics to diagnose whether your system fetches relevant documents and covers the claims needed for accurate responses.

Understanding Retrieval Metrics

Your search returns 50 documents but only 3 answer the question. That's a retrieval precision problem RAGChecker catches. The framework provides four retrieval diagnostics:

Metric | What It Measures | Strong Performance | Critical Threshold |

Context Precision | Proportion of retrieved chunks that are relevant | >80% | <70% needs attention |

Context Recall | Proportion of ground truth claims supported by retrieved context | >80% | <60% signals gaps |

Claim Recall | Fraction of ground truth claims your retrieval surfaces | 60-70%+ | <50% indicates misses |

Chunk Attribution | How well retrieved chunks support generated claims | Varies by use case | Monitor for noise |

When Context Precision drops below 70%, you're retrieving noise. When Context Recall stays low, you're missing relevant documents. When Claim Recall crashes despite decent Context metrics, your knowledge base has gaps.

You can use retrieval metrics alongside RAGChecker for continuous monitoring.

Reading the Diagnostic Patterns

When system performance drops 15% in production, RAGChecker reveals the pattern: Context Precision remains at 85%, but Claim Recall dropped from 68% to 52%. Your retrieval quality is fine for documents fetched, but coverage gaps mean essential information isn't being retrieved.

Targeted diagnosis follows directly from metric patterns. Low Context Precision indicates you need to tune ranking algorithms or adjust similarity thresholds to filter noise. Low Context Recall points to expanding search scope, adjusting top-k parameters, or improving query transformation.

Low Claim Recall with acceptable Context metrics suggests knowledge base gaps. These diagnostic patterns enable you to prioritize engineering effort on the specific component causing failures rather than undertaking expensive system-wide rewrites.

How Do You Measure Generation Quality with RAGChecker?

Even with perfect retrieval, your generator can still fail. RAGChecker provides dedicated metrics to assess whether your model faithfully uses retrieved context or invents information on its own.

Explore Generation Metrics

Watch what happens when your model gets perfect context but still hallucinates. Faithfulness measures the proportion of generated claims directly supported by retrieved context, while hallucination detection identifies claims your model invented. These work as opposing forces: faithfulness tracks grounding; hallucination catches fabrication. Production systems need real-time tracking beyond batch evals.

Noise sensitivity measures degradation when irrelevant context pollutes your retrieval set

Context utilization assesses how effectively your generation component uses available retrieved information, revealing whether low performance stems from missing context or underutilization

Self-knowledge evaluates whether your model appropriately relies on parametric knowledge when retrieved context is insufficient

Together, these metrics create a comprehensive view of generation behavior across different failure modes.

Interpret Generation Failures

Your RAG system shows degraded overall accuracy compared to the previous month. RAGChecker reveals high Claim Recall, meaning retrieval provides necessary information, but faithfulness collapsed. You've isolated a pure generation failure: retrieval remains solid, but your generator stopped grounding responses in context.

Remediation strategy shifts completely. Instead of retuning retrieval algorithms or expanding your knowledge base, you focus on generation: adjusting system prompts to emphasize context adherence, implementing eval guardrails that block low-context-adherence responses, or switching to models with better instruction-following capabilities. Systematic experiment tracking enables you to validate improvements before deploying changes to production.

Balance Faithfulness Against Correctness

High faithfulness scores can mask a critical vulnerability in your RAG system. When your generator faithfully reproduces information from retrieved documents, it also faithfully reproduces errors present in those documents. RAGChecker tracks this tension through separate correctness metrics that compare generated claims against ground truth, independent of retrieval quality.

Monitor the gap between faithfulness and correctness scores to detect this failure mode. A system showing 90% faithfulness but only 70% correctness indicates your retrieval pipeline surfaces flawed documents that your generator dutifully follows. This pattern requires different intervention than hallucination problems: instead of constraining your generator, you need to improve document quality, implement source verification, or add retrieval-stage filtering.

Understanding this tradeoff prevents you from over-optimizing faithfulness at the expense of actual accuracy, ensuring your RAG system delivers correct answers rather than faithfully wrong ones.

How to Use RAGChecker Across Different Roles

You and your stakeholders need different views into RAG system performance. Role-based metric reporting bridges the gap between executive dashboards requiring aggregated KPIs and engineering teams needing granular diagnostics for root cause analysis. Understanding these different perspectives helps you communicate RAG quality effectively across your organization.

RAGChecker serves both needs through layered metric reporting that connects component-level failures to business-level performance indicators. Your leadership team tracks high-level SLAs while your engineering teams drill into retrieval precision, claim recall, and faithfulness scores. This dual-layer approach ensures technical improvements translate into metrics that matter for quarterly reviews and resource allocation decisions.

Executive View: Aggregated Metrics for Dashboards and SLAs

Your CTO asks for a single number to track RAG quality. Your engineers know single metrics mask component failures. RAGChecker bridges this gap by providing overall precision, recall, and F1 scores that aggregate component-level metrics into executive-ready performance indicators. Precision measures the proportion of generated claims that are correct according to ground truth. Recall measures coverage. F1 provides balanced assessment accounting for both accuracy and completeness.

These aggregate metrics support quarterly business reviews and SLA definition through production analytics. You can establish performance thresholds aligned with business requirements: maintain overall precision above 90% for customer-facing applications, ensure recall exceeds 75% for compliance documentation systems, or target F1 scores above 85% for internal knowledge management tools.

Engineering View: Diagnostic Slices for Root-Cause Analysis

Your engineering team needs fine-grained component-level metrics that drive precise diagnosis. When production issues arise, drill into retrieval-specific diagnostics: Context Precision revealing noise in retrieved documents, Context Recall showing whether relevant documents were retrieved, Claim Recall identifying which ground truth answer claims are supported by retrieved context. Component-level debugging with trace analysis enables systematic root cause identification.

Generation-focused diagnostics work similarly. Consider what happens when users report nonsensical answers: check faithfulness (62%), hallucination rate (increased from baseline), and noise sensitivity (stable). Low faithfulness with high hallucination directly indicates the generator stopped grounding in context. Adjust system prompts, validate faithfulness recovery in staging, and deploy with confidence that the specific failure mode is addressed.

How Do You Integrate RAGChecker into Enterprise MLOps?

Moving from diagnostic insights to operational workflows requires thoughtful integration planning. For your team, RAGChecker's value multiplies when embedded into existing MLOps infrastructure, transforming one-time evals into continuous quality monitoring. However, the framework demands custom engineering work since no turnkey solutions currently exist.

Understanding the integration patterns, governance implications, and operational limitations helps you assess whether the diagnostic depth justifies the implementation investment for your specific use case. The sections below outline practical approaches for CI/CD integration, compliance alignment, and managing known framework limitations.

Configure Integration Patterns

Production monitoring follows a batch eval pattern rather than real-time assessment. Production logs flow through ETL processing, converting to RAGChecker input format, executing evals, and storing metrics in your observability platform. Industry guidance recommends implementing statistical sampling, evaluating 1-10% of traffic rather than 100% to control costs. Sampling strategies enable cost-effective continuous monitoring. Schedule hourly, daily, or weekly batch runs based on your risk tolerance and budget constraints.

Budget 2-4 weeks of senior MLOps engineering time per platform for custom CI/CD integration development, as no turnkey solutions are available. This investment pays dividends through systematic quality tracking, but you should weigh these engineering costs against your diagnostic requirements and existing tooling capabilities.

Apply Governance and Compliance Controls

RAGChecker provides claim-level traceability relevant for audit requirements; you can trace individual claims back to source evidence. However, the framework doesn't generate compliance documentation or provide governance controls required by comprehensive GRC platforms. NIST AI RMF guidance states that trustworthy AI systems require documentation of "AI system design, development processes, data sources, model training, testing, and evaluation results."

RAGChecker addresses the evaluation component but requires integration with broader infrastructure for complete compliance. You can use governance features to complement RAGChecker's evaluation capabilities.

Manage Computational Costs at Scale

RAGChecker's multi-stage LLM pipeline creates significant cost considerations for enterprise deployments. Each eval requires multiple inference calls: claim extraction from responses, claim extraction from retrieved documents, and entailment checking across all claim pairs. For a single query evals, expect 5-10 LLM calls depending on response complexity and document count.

Control costs through strategic sampling and tiered evaluation approaches. Implement lightweight checks on 100% of traffic using simpler metrics, then route flagged responses to full RAGChecker analysis. Batch similar queries together to maximize throughput efficiency.

Cache claim extractions for repeated document chunks to avoid redundant processing. Set cost alerts tied to evaluation volume thresholds, and establish fallback procedures when budgets approach limits. These practices enable continuous diagnostic coverage without unpredictable infrastructure costs undermining your MLOps budget.

RAGChecker Limitations and Considerations

RAGChecker's diagnostic power comes with important constraints you should understand before committing to implementation:

Err or propagation: LLM-based claim extraction introduces inherent uncertainty throughout the evaluation pipeline, potentially missing hallucinations or unsupported synthesis outside detected claims

Flat claim structure: For medical diagnosis or legal analysis, the framework cannot capture causal chains and conditional dependencies that matter for complex reasoning

Reproducibility issues: GitHub Issue #24 documents nondeterministic behavior where identical evaluations produce different metric values, compromising CI/CD pipeline reliability

Missing data handling: Incomplete inputs propagate through the pipeline without clear error messages, producing unreliable downstream metrics

Computational overhead: The multi-stage LLM pipeline creates significant cost and latency constraints for large-scale continuous evaluation

For resource-constrained environments, you should carefully evaluate whether RAGChecker's claim-level diagnostic granularity justifies the computational investment compared to simpler evaluation approaches.

Making RAG Systems Reliable Through Systematic Diagnostics

RAG system failures don't announce themselves clearly. Traditional metrics show stability while your users receive confidently wrong answers. The shift from aggregate scoring to claim-level diagnostics allows precise attribution: you know whether to fix retrieval algorithms, expand knowledge bases, tune generation prompts, or filter noisy context.

RAGChecker provides the diagnostic framework through peer-reviewed methodology and open-source implementation. Claim-level granularity points you toward precise improvements rather than expensive system-wide redesigns.

RAGChecker handles offline diagnostic analysis. Production systems need additional capabilities for real-time monitoring, runtime protection, and operational metrics.

Galileo provides the production infrastructure RAGChecker lacks:

Real-time monitoring: Tracks quality metrics across 100% of production traffic without batch limitations

Hierarchical workflow tracing: Visualizes multi-step agent workflows to pinpoint exact failure points

Runtime protection: Blocks problematic responses before they reach users

Experiment tracking: Systematically validates improvements with A/B testing infrastructure

Production analytics: Surface performance trends without custom ETL development

Native platform integrations: Enable deployment in hours rather than weeks of custom engineering

Galileo’s suite of analytics tools offers visibility and metrics for measuring, evaluating, and optimizing RAG applications, including metrics for chunk attribution and context adherence. Explore how Galileo can improve your RAG implementation.

FAQs

What is claim-level evaluation in RAGChecker?

Claim-level evaluation decomposes responses into atomic verifiable statements called claims, then checks each claim individually against retrieved context and ground truth. You get precise error localization at the statement level rather than scoring entire responses, revealing exactly which facts are unsupported or incorrect.

How do you isolate whether RAG failures stem from retrieval versus generation?

RAGChecker performs triangulated evaluation comparing three pairs independently: retrieved context against ground truth (isolating retrieval quality), generated response against retrieved context (isolating faithfulness), and generated response against ground truth (overall correctness). Low retriever recall with high faithfulness indicates retrieval failure, while high recall with low faithfulness signals generation problems.

How do you integrate RAGChecker into CI/CD pipelines?

The documented approach uses GitHub Actions where code push triggers automated evaluation against test datasets, producing metrics that drive pass/fail gates. For cloud platforms like AWS SageMaker or Google Vertex AI, you'll need to develop custom pipeline steps executing RAGChecker as a containerized processing job. Custom integration typically requires 2-4 weeks of senior MLOps engineering time per platform.

When should you use fine-grained evaluation versus coarse metrics like BLEU?

Use fine-grained evaluation when you need to diagnose specific failure modes for targeted improvements, particularly when aggregate metrics show acceptable performance but users report quality issues. Use coarse metrics for rapid prototyping or when computational costs outweigh diagnostic benefits. RAGChecker's multi-stage LLM pipeline requires significant inference budget compared to simple lexical matching.

How does Galileo help with RAG system evaluation and monitoring?

Galileo offers operational monitoring infrastructure where RAGChecker provides diagnostic analysis. For production systems, Galileo includes real-time metrics, hierarchical workflow tracing, and runtime protection. RAGChecker excels at offline claim-level diagnostics to understand specific failure modes. You can use both: RAGChecker for root cause analysis during development, Galileo for production monitoring.

Your production RAG system generates confidently wrong answers while logs show successful completions. Users escalate tickets about incorrect information. Your team spends hours debugging, only to discover the problem wasn't retrieval quality: your generator was hallucinating despite having the right context.

Traditional metrics like BLEU and ROUGE showed stable performance throughout. You need diagnostic tools that actually pinpoint where failures occur.

You'll understand how its fine-grained metrics differ from aggregate scoring approaches and when claim-level diagnostics justify the computational investment. You'll also learn how to integrate RAGChecker into your MLOps workflows for systematic quality improvement.

TLDR:

RAGChecker uses claim-level entailment checking to attribute RAG failures to specific components

Provides separate diagnostic metrics for retrieval quality versus generation faithfulness

Requires AWS Bedrock Llama3 70B and custom MLOps integration

NeurIPS 2024 validated with 0.62-0.75 Kendall's tau correlation to human judgments

Best suited for offline diagnostic analysis, not real-time production monitoring

Complements observability platforms rather than replacing them

What Is RAGChecker?

RAGChecker is a peer-reviewed evaluation framework that diagnoses exactly where your RAG pipeline breaks, whether in retrieval, generation, or both. The framework uses claim-level analysis to isolate component failures.

Traditional RAG evaluation treats your system as a black box: input goes in, output comes out, and you're left guessing where failures occur. RAGChecker breaks this pattern through fine-grained evaluation that pinpoints specific failure points.

Researchers from Amazon AWS AI, Shanghai Jiao Tong University, and Westlake University published the AtomicClaimFactCheck framework at NeurIPS 2024, introducing a methodology that decomposes responses into atomic claims and performs four-directional comparisons among generated responses, ground truth answers, retrieved documents, and the original question.

You get precise error localization at the statement level rather than scoring entire responses holistically. While traditional metrics assign a single score to your entire output, RAGChecker identifies which specific claims fail and why, revealing exactly which facts are unsupported, which retrievals are irrelevant, and which generation patterns cause hallucinations.

Why Traditional RAG Evaluation Fails

BLEU and ROUGE metrics fail because they reward verbatim copying without verifying accuracy. Research shows BLEU is "insensitive to retrieval quality and can be manipulated by verbatim copying." Your system could achieve high BLEU scores by concatenating retrieved passages, even when those passages are outdated or irrelevant.

BERTScore represents an improvement through contextual embeddings, but still cannot detect hallucinations. Without component-level attribution, you cannot determine whether failures stem from retrieval quality, model choice, or generation parameters. Modern observability platforms address this gap through multi-dimensional metrics.

Traditional metrics provide aggregate scores that obscure where failures actually occur in multi-stage pipelines. Your retrieval module might fail through poor relevance while your generation module hallucinates, but single aggregate scores cannot isolate which component needs fixing.

How RAGChecker Works Under the Hood

Picture your RAG system as a crime scene. RAGChecker plays forensic investigator, collecting evidence (your query, documents, response), extracting claims from each piece, then triangulating what failed. The framework addresses both retrieval and generation components independently through separate diagnostic metrics.

The diagnostic approach provides two distinct categories of metrics:

Retrieval metrics measure whether your system fetches relevant documents and covers necessary claims

Generation metrics assess faithfulness to retrieved context, hallucination rates, and noise sensitivity

Separate diagnostics reveal whether poor performance stems from missing documents, irrelevant retrievals, unfaithful generation, or combinations of these failures

The diagnostic pipeline begins with input collection. The entailment checking mechanism extracts claims and computes relationships between them. Each generated claim pairs systematically with claims from ground truth and retrieved documents.

The LLM scores entailment across three dimensions: faithfulness (whether retrieved context supports the claim), correctness (verification against ground truth), and retrieval quality (whether retrieved documents support ground truth claims). This triangulated approach isolates failures to specific pipeline stages rather than producing a single opaque score.

How Do You Measure Retrieval Quality with RAGChecker?

Your retrieval component determines whether the right information reaches your generator. RAGChecker provides specific metrics to diagnose whether your system fetches relevant documents and covers the claims needed for accurate responses.

Understanding Retrieval Metrics

Your search returns 50 documents but only 3 answer the question. That's a retrieval precision problem RAGChecker catches. The framework provides four retrieval diagnostics:

Metric | What It Measures | Strong Performance | Critical Threshold |

Context Precision | Proportion of retrieved chunks that are relevant | >80% | <70% needs attention |

Context Recall | Proportion of ground truth claims supported by retrieved context | >80% | <60% signals gaps |

Claim Recall | Fraction of ground truth claims your retrieval surfaces | 60-70%+ | <50% indicates misses |

Chunk Attribution | How well retrieved chunks support generated claims | Varies by use case | Monitor for noise |

When Context Precision drops below 70%, you're retrieving noise. When Context Recall stays low, you're missing relevant documents. When Claim Recall crashes despite decent Context metrics, your knowledge base has gaps.

You can use retrieval metrics alongside RAGChecker for continuous monitoring.

Reading the Diagnostic Patterns

When system performance drops 15% in production, RAGChecker reveals the pattern: Context Precision remains at 85%, but Claim Recall dropped from 68% to 52%. Your retrieval quality is fine for documents fetched, but coverage gaps mean essential information isn't being retrieved.

Targeted diagnosis follows directly from metric patterns. Low Context Precision indicates you need to tune ranking algorithms or adjust similarity thresholds to filter noise. Low Context Recall points to expanding search scope, adjusting top-k parameters, or improving query transformation.

Low Claim Recall with acceptable Context metrics suggests knowledge base gaps. These diagnostic patterns enable you to prioritize engineering effort on the specific component causing failures rather than undertaking expensive system-wide rewrites.

How Do You Measure Generation Quality with RAGChecker?

Even with perfect retrieval, your generator can still fail. RAGChecker provides dedicated metrics to assess whether your model faithfully uses retrieved context or invents information on its own.

Explore Generation Metrics

Watch what happens when your model gets perfect context but still hallucinates. Faithfulness measures the proportion of generated claims directly supported by retrieved context, while hallucination detection identifies claims your model invented. These work as opposing forces: faithfulness tracks grounding; hallucination catches fabrication. Production systems need real-time tracking beyond batch evals.

Noise sensitivity measures degradation when irrelevant context pollutes your retrieval set

Context utilization assesses how effectively your generation component uses available retrieved information, revealing whether low performance stems from missing context or underutilization

Self-knowledge evaluates whether your model appropriately relies on parametric knowledge when retrieved context is insufficient

Together, these metrics create a comprehensive view of generation behavior across different failure modes.

Interpret Generation Failures

Your RAG system shows degraded overall accuracy compared to the previous month. RAGChecker reveals high Claim Recall, meaning retrieval provides necessary information, but faithfulness collapsed. You've isolated a pure generation failure: retrieval remains solid, but your generator stopped grounding responses in context.

Remediation strategy shifts completely. Instead of retuning retrieval algorithms or expanding your knowledge base, you focus on generation: adjusting system prompts to emphasize context adherence, implementing eval guardrails that block low-context-adherence responses, or switching to models with better instruction-following capabilities. Systematic experiment tracking enables you to validate improvements before deploying changes to production.

Balance Faithfulness Against Correctness

High faithfulness scores can mask a critical vulnerability in your RAG system. When your generator faithfully reproduces information from retrieved documents, it also faithfully reproduces errors present in those documents. RAGChecker tracks this tension through separate correctness metrics that compare generated claims against ground truth, independent of retrieval quality.

Monitor the gap between faithfulness and correctness scores to detect this failure mode. A system showing 90% faithfulness but only 70% correctness indicates your retrieval pipeline surfaces flawed documents that your generator dutifully follows. This pattern requires different intervention than hallucination problems: instead of constraining your generator, you need to improve document quality, implement source verification, or add retrieval-stage filtering.

Understanding this tradeoff prevents you from over-optimizing faithfulness at the expense of actual accuracy, ensuring your RAG system delivers correct answers rather than faithfully wrong ones.

How to Use RAGChecker Across Different Roles

You and your stakeholders need different views into RAG system performance. Role-based metric reporting bridges the gap between executive dashboards requiring aggregated KPIs and engineering teams needing granular diagnostics for root cause analysis. Understanding these different perspectives helps you communicate RAG quality effectively across your organization.

RAGChecker serves both needs through layered metric reporting that connects component-level failures to business-level performance indicators. Your leadership team tracks high-level SLAs while your engineering teams drill into retrieval precision, claim recall, and faithfulness scores. This dual-layer approach ensures technical improvements translate into metrics that matter for quarterly reviews and resource allocation decisions.

Executive View: Aggregated Metrics for Dashboards and SLAs

Your CTO asks for a single number to track RAG quality. Your engineers know single metrics mask component failures. RAGChecker bridges this gap by providing overall precision, recall, and F1 scores that aggregate component-level metrics into executive-ready performance indicators. Precision measures the proportion of generated claims that are correct according to ground truth. Recall measures coverage. F1 provides balanced assessment accounting for both accuracy and completeness.

These aggregate metrics support quarterly business reviews and SLA definition through production analytics. You can establish performance thresholds aligned with business requirements: maintain overall precision above 90% for customer-facing applications, ensure recall exceeds 75% for compliance documentation systems, or target F1 scores above 85% for internal knowledge management tools.

Engineering View: Diagnostic Slices for Root-Cause Analysis

Your engineering team needs fine-grained component-level metrics that drive precise diagnosis. When production issues arise, drill into retrieval-specific diagnostics: Context Precision revealing noise in retrieved documents, Context Recall showing whether relevant documents were retrieved, Claim Recall identifying which ground truth answer claims are supported by retrieved context. Component-level debugging with trace analysis enables systematic root cause identification.

Generation-focused diagnostics work similarly. Consider what happens when users report nonsensical answers: check faithfulness (62%), hallucination rate (increased from baseline), and noise sensitivity (stable). Low faithfulness with high hallucination directly indicates the generator stopped grounding in context. Adjust system prompts, validate faithfulness recovery in staging, and deploy with confidence that the specific failure mode is addressed.

How Do You Integrate RAGChecker into Enterprise MLOps?

Moving from diagnostic insights to operational workflows requires thoughtful integration planning. For your team, RAGChecker's value multiplies when embedded into existing MLOps infrastructure, transforming one-time evals into continuous quality monitoring. However, the framework demands custom engineering work since no turnkey solutions currently exist.

Understanding the integration patterns, governance implications, and operational limitations helps you assess whether the diagnostic depth justifies the implementation investment for your specific use case. The sections below outline practical approaches for CI/CD integration, compliance alignment, and managing known framework limitations.

Configure Integration Patterns

Production monitoring follows a batch eval pattern rather than real-time assessment. Production logs flow through ETL processing, converting to RAGChecker input format, executing evals, and storing metrics in your observability platform. Industry guidance recommends implementing statistical sampling, evaluating 1-10% of traffic rather than 100% to control costs. Sampling strategies enable cost-effective continuous monitoring. Schedule hourly, daily, or weekly batch runs based on your risk tolerance and budget constraints.

Budget 2-4 weeks of senior MLOps engineering time per platform for custom CI/CD integration development, as no turnkey solutions are available. This investment pays dividends through systematic quality tracking, but you should weigh these engineering costs against your diagnostic requirements and existing tooling capabilities.

Apply Governance and Compliance Controls

RAGChecker provides claim-level traceability relevant for audit requirements; you can trace individual claims back to source evidence. However, the framework doesn't generate compliance documentation or provide governance controls required by comprehensive GRC platforms. NIST AI RMF guidance states that trustworthy AI systems require documentation of "AI system design, development processes, data sources, model training, testing, and evaluation results."

RAGChecker addresses the evaluation component but requires integration with broader infrastructure for complete compliance. You can use governance features to complement RAGChecker's evaluation capabilities.

Manage Computational Costs at Scale

RAGChecker's multi-stage LLM pipeline creates significant cost considerations for enterprise deployments. Each eval requires multiple inference calls: claim extraction from responses, claim extraction from retrieved documents, and entailment checking across all claim pairs. For a single query evals, expect 5-10 LLM calls depending on response complexity and document count.

Control costs through strategic sampling and tiered evaluation approaches. Implement lightweight checks on 100% of traffic using simpler metrics, then route flagged responses to full RAGChecker analysis. Batch similar queries together to maximize throughput efficiency.

Cache claim extractions for repeated document chunks to avoid redundant processing. Set cost alerts tied to evaluation volume thresholds, and establish fallback procedures when budgets approach limits. These practices enable continuous diagnostic coverage without unpredictable infrastructure costs undermining your MLOps budget.

RAGChecker Limitations and Considerations

RAGChecker's diagnostic power comes with important constraints you should understand before committing to implementation:

Err or propagation: LLM-based claim extraction introduces inherent uncertainty throughout the evaluation pipeline, potentially missing hallucinations or unsupported synthesis outside detected claims

Flat claim structure: For medical diagnosis or legal analysis, the framework cannot capture causal chains and conditional dependencies that matter for complex reasoning

Reproducibility issues: GitHub Issue #24 documents nondeterministic behavior where identical evaluations produce different metric values, compromising CI/CD pipeline reliability

Missing data handling: Incomplete inputs propagate through the pipeline without clear error messages, producing unreliable downstream metrics

Computational overhead: The multi-stage LLM pipeline creates significant cost and latency constraints for large-scale continuous evaluation

For resource-constrained environments, you should carefully evaluate whether RAGChecker's claim-level diagnostic granularity justifies the computational investment compared to simpler evaluation approaches.

Making RAG Systems Reliable Through Systematic Diagnostics

RAG system failures don't announce themselves clearly. Traditional metrics show stability while your users receive confidently wrong answers. The shift from aggregate scoring to claim-level diagnostics allows precise attribution: you know whether to fix retrieval algorithms, expand knowledge bases, tune generation prompts, or filter noisy context.

RAGChecker provides the diagnostic framework through peer-reviewed methodology and open-source implementation. Claim-level granularity points you toward precise improvements rather than expensive system-wide redesigns.

RAGChecker handles offline diagnostic analysis. Production systems need additional capabilities for real-time monitoring, runtime protection, and operational metrics.

Galileo provides the production infrastructure RAGChecker lacks:

Real-time monitoring: Tracks quality metrics across 100% of production traffic without batch limitations

Hierarchical workflow tracing: Visualizes multi-step agent workflows to pinpoint exact failure points

Runtime protection: Blocks problematic responses before they reach users

Experiment tracking: Systematically validates improvements with A/B testing infrastructure

Production analytics: Surface performance trends without custom ETL development

Native platform integrations: Enable deployment in hours rather than weeks of custom engineering

Galileo’s suite of analytics tools offers visibility and metrics for measuring, evaluating, and optimizing RAG applications, including metrics for chunk attribution and context adherence. Explore how Galileo can improve your RAG implementation.

FAQs

What is claim-level evaluation in RAGChecker?

Claim-level evaluation decomposes responses into atomic verifiable statements called claims, then checks each claim individually against retrieved context and ground truth. You get precise error localization at the statement level rather than scoring entire responses, revealing exactly which facts are unsupported or incorrect.

How do you isolate whether RAG failures stem from retrieval versus generation?

RAGChecker performs triangulated evaluation comparing three pairs independently: retrieved context against ground truth (isolating retrieval quality), generated response against retrieved context (isolating faithfulness), and generated response against ground truth (overall correctness). Low retriever recall with high faithfulness indicates retrieval failure, while high recall with low faithfulness signals generation problems.

How do you integrate RAGChecker into CI/CD pipelines?

The documented approach uses GitHub Actions where code push triggers automated evaluation against test datasets, producing metrics that drive pass/fail gates. For cloud platforms like AWS SageMaker or Google Vertex AI, you'll need to develop custom pipeline steps executing RAGChecker as a containerized processing job. Custom integration typically requires 2-4 weeks of senior MLOps engineering time per platform.

When should you use fine-grained evaluation versus coarse metrics like BLEU?

Use fine-grained evaluation when you need to diagnose specific failure modes for targeted improvements, particularly when aggregate metrics show acceptable performance but users report quality issues. Use coarse metrics for rapid prototyping or when computational costs outweigh diagnostic benefits. RAGChecker's multi-stage LLM pipeline requires significant inference budget compared to simple lexical matching.

How does Galileo help with RAG system evaluation and monitoring?

Galileo offers operational monitoring infrastructure where RAGChecker provides diagnostic analysis. For production systems, Galileo includes real-time metrics, hierarchical workflow tracing, and runtime protection. RAGChecker excels at offline claim-level diagnostics to understand specific failure modes. You can use both: RAGChecker for root cause analysis during development, Galileo for production monitoring.

Your production RAG system generates confidently wrong answers while logs show successful completions. Users escalate tickets about incorrect information. Your team spends hours debugging, only to discover the problem wasn't retrieval quality: your generator was hallucinating despite having the right context.

Traditional metrics like BLEU and ROUGE showed stable performance throughout. You need diagnostic tools that actually pinpoint where failures occur.

You'll understand how its fine-grained metrics differ from aggregate scoring approaches and when claim-level diagnostics justify the computational investment. You'll also learn how to integrate RAGChecker into your MLOps workflows for systematic quality improvement.

TLDR:

RAGChecker uses claim-level entailment checking to attribute RAG failures to specific components

Provides separate diagnostic metrics for retrieval quality versus generation faithfulness

Requires AWS Bedrock Llama3 70B and custom MLOps integration

NeurIPS 2024 validated with 0.62-0.75 Kendall's tau correlation to human judgments

Best suited for offline diagnostic analysis, not real-time production monitoring

Complements observability platforms rather than replacing them

What Is RAGChecker?

RAGChecker is a peer-reviewed evaluation framework that diagnoses exactly where your RAG pipeline breaks, whether in retrieval, generation, or both. The framework uses claim-level analysis to isolate component failures.

Traditional RAG evaluation treats your system as a black box: input goes in, output comes out, and you're left guessing where failures occur. RAGChecker breaks this pattern through fine-grained evaluation that pinpoints specific failure points.

Researchers from Amazon AWS AI, Shanghai Jiao Tong University, and Westlake University published the AtomicClaimFactCheck framework at NeurIPS 2024, introducing a methodology that decomposes responses into atomic claims and performs four-directional comparisons among generated responses, ground truth answers, retrieved documents, and the original question.

You get precise error localization at the statement level rather than scoring entire responses holistically. While traditional metrics assign a single score to your entire output, RAGChecker identifies which specific claims fail and why, revealing exactly which facts are unsupported, which retrievals are irrelevant, and which generation patterns cause hallucinations.

Why Traditional RAG Evaluation Fails

BLEU and ROUGE metrics fail because they reward verbatim copying without verifying accuracy. Research shows BLEU is "insensitive to retrieval quality and can be manipulated by verbatim copying." Your system could achieve high BLEU scores by concatenating retrieved passages, even when those passages are outdated or irrelevant.

BERTScore represents an improvement through contextual embeddings, but still cannot detect hallucinations. Without component-level attribution, you cannot determine whether failures stem from retrieval quality, model choice, or generation parameters. Modern observability platforms address this gap through multi-dimensional metrics.

Traditional metrics provide aggregate scores that obscure where failures actually occur in multi-stage pipelines. Your retrieval module might fail through poor relevance while your generation module hallucinates, but single aggregate scores cannot isolate which component needs fixing.

How RAGChecker Works Under the Hood

Picture your RAG system as a crime scene. RAGChecker plays forensic investigator, collecting evidence (your query, documents, response), extracting claims from each piece, then triangulating what failed. The framework addresses both retrieval and generation components independently through separate diagnostic metrics.

The diagnostic approach provides two distinct categories of metrics:

Retrieval metrics measure whether your system fetches relevant documents and covers necessary claims

Generation metrics assess faithfulness to retrieved context, hallucination rates, and noise sensitivity

Separate diagnostics reveal whether poor performance stems from missing documents, irrelevant retrievals, unfaithful generation, or combinations of these failures

The diagnostic pipeline begins with input collection. The entailment checking mechanism extracts claims and computes relationships between them. Each generated claim pairs systematically with claims from ground truth and retrieved documents.

The LLM scores entailment across three dimensions: faithfulness (whether retrieved context supports the claim), correctness (verification against ground truth), and retrieval quality (whether retrieved documents support ground truth claims). This triangulated approach isolates failures to specific pipeline stages rather than producing a single opaque score.

How Do You Measure Retrieval Quality with RAGChecker?

Your retrieval component determines whether the right information reaches your generator. RAGChecker provides specific metrics to diagnose whether your system fetches relevant documents and covers the claims needed for accurate responses.

Understanding Retrieval Metrics

Your search returns 50 documents but only 3 answer the question. That's a retrieval precision problem RAGChecker catches. The framework provides four retrieval diagnostics:

Metric | What It Measures | Strong Performance | Critical Threshold |

Context Precision | Proportion of retrieved chunks that are relevant | >80% | <70% needs attention |

Context Recall | Proportion of ground truth claims supported by retrieved context | >80% | <60% signals gaps |

Claim Recall | Fraction of ground truth claims your retrieval surfaces | 60-70%+ | <50% indicates misses |

Chunk Attribution | How well retrieved chunks support generated claims | Varies by use case | Monitor for noise |

When Context Precision drops below 70%, you're retrieving noise. When Context Recall stays low, you're missing relevant documents. When Claim Recall crashes despite decent Context metrics, your knowledge base has gaps.

You can use retrieval metrics alongside RAGChecker for continuous monitoring.

Reading the Diagnostic Patterns

When system performance drops 15% in production, RAGChecker reveals the pattern: Context Precision remains at 85%, but Claim Recall dropped from 68% to 52%. Your retrieval quality is fine for documents fetched, but coverage gaps mean essential information isn't being retrieved.

Targeted diagnosis follows directly from metric patterns. Low Context Precision indicates you need to tune ranking algorithms or adjust similarity thresholds to filter noise. Low Context Recall points to expanding search scope, adjusting top-k parameters, or improving query transformation.

Low Claim Recall with acceptable Context metrics suggests knowledge base gaps. These diagnostic patterns enable you to prioritize engineering effort on the specific component causing failures rather than undertaking expensive system-wide rewrites.

How Do You Measure Generation Quality with RAGChecker?

Even with perfect retrieval, your generator can still fail. RAGChecker provides dedicated metrics to assess whether your model faithfully uses retrieved context or invents information on its own.

Explore Generation Metrics

Watch what happens when your model gets perfect context but still hallucinates. Faithfulness measures the proportion of generated claims directly supported by retrieved context, while hallucination detection identifies claims your model invented. These work as opposing forces: faithfulness tracks grounding; hallucination catches fabrication. Production systems need real-time tracking beyond batch evals.

Noise sensitivity measures degradation when irrelevant context pollutes your retrieval set

Context utilization assesses how effectively your generation component uses available retrieved information, revealing whether low performance stems from missing context or underutilization

Self-knowledge evaluates whether your model appropriately relies on parametric knowledge when retrieved context is insufficient

Together, these metrics create a comprehensive view of generation behavior across different failure modes.

Interpret Generation Failures

Your RAG system shows degraded overall accuracy compared to the previous month. RAGChecker reveals high Claim Recall, meaning retrieval provides necessary information, but faithfulness collapsed. You've isolated a pure generation failure: retrieval remains solid, but your generator stopped grounding responses in context.

Remediation strategy shifts completely. Instead of retuning retrieval algorithms or expanding your knowledge base, you focus on generation: adjusting system prompts to emphasize context adherence, implementing eval guardrails that block low-context-adherence responses, or switching to models with better instruction-following capabilities. Systematic experiment tracking enables you to validate improvements before deploying changes to production.

Balance Faithfulness Against Correctness

High faithfulness scores can mask a critical vulnerability in your RAG system. When your generator faithfully reproduces information from retrieved documents, it also faithfully reproduces errors present in those documents. RAGChecker tracks this tension through separate correctness metrics that compare generated claims against ground truth, independent of retrieval quality.

Monitor the gap between faithfulness and correctness scores to detect this failure mode. A system showing 90% faithfulness but only 70% correctness indicates your retrieval pipeline surfaces flawed documents that your generator dutifully follows. This pattern requires different intervention than hallucination problems: instead of constraining your generator, you need to improve document quality, implement source verification, or add retrieval-stage filtering.

Understanding this tradeoff prevents you from over-optimizing faithfulness at the expense of actual accuracy, ensuring your RAG system delivers correct answers rather than faithfully wrong ones.

How to Use RAGChecker Across Different Roles

You and your stakeholders need different views into RAG system performance. Role-based metric reporting bridges the gap between executive dashboards requiring aggregated KPIs and engineering teams needing granular diagnostics for root cause analysis. Understanding these different perspectives helps you communicate RAG quality effectively across your organization.

RAGChecker serves both needs through layered metric reporting that connects component-level failures to business-level performance indicators. Your leadership team tracks high-level SLAs while your engineering teams drill into retrieval precision, claim recall, and faithfulness scores. This dual-layer approach ensures technical improvements translate into metrics that matter for quarterly reviews and resource allocation decisions.

Executive View: Aggregated Metrics for Dashboards and SLAs

Your CTO asks for a single number to track RAG quality. Your engineers know single metrics mask component failures. RAGChecker bridges this gap by providing overall precision, recall, and F1 scores that aggregate component-level metrics into executive-ready performance indicators. Precision measures the proportion of generated claims that are correct according to ground truth. Recall measures coverage. F1 provides balanced assessment accounting for both accuracy and completeness.

These aggregate metrics support quarterly business reviews and SLA definition through production analytics. You can establish performance thresholds aligned with business requirements: maintain overall precision above 90% for customer-facing applications, ensure recall exceeds 75% for compliance documentation systems, or target F1 scores above 85% for internal knowledge management tools.

Engineering View: Diagnostic Slices for Root-Cause Analysis

Your engineering team needs fine-grained component-level metrics that drive precise diagnosis. When production issues arise, drill into retrieval-specific diagnostics: Context Precision revealing noise in retrieved documents, Context Recall showing whether relevant documents were retrieved, Claim Recall identifying which ground truth answer claims are supported by retrieved context. Component-level debugging with trace analysis enables systematic root cause identification.

Generation-focused diagnostics work similarly. Consider what happens when users report nonsensical answers: check faithfulness (62%), hallucination rate (increased from baseline), and noise sensitivity (stable). Low faithfulness with high hallucination directly indicates the generator stopped grounding in context. Adjust system prompts, validate faithfulness recovery in staging, and deploy with confidence that the specific failure mode is addressed.

How Do You Integrate RAGChecker into Enterprise MLOps?

Moving from diagnostic insights to operational workflows requires thoughtful integration planning. For your team, RAGChecker's value multiplies when embedded into existing MLOps infrastructure, transforming one-time evals into continuous quality monitoring. However, the framework demands custom engineering work since no turnkey solutions currently exist.

Understanding the integration patterns, governance implications, and operational limitations helps you assess whether the diagnostic depth justifies the implementation investment for your specific use case. The sections below outline practical approaches for CI/CD integration, compliance alignment, and managing known framework limitations.