Dec 13, 2025

How Architecture Patterns and Ownership Models Scale AI Guardrails

Jackson Wells

Integrated Marketing

Jackson Wells

Integrated Marketing

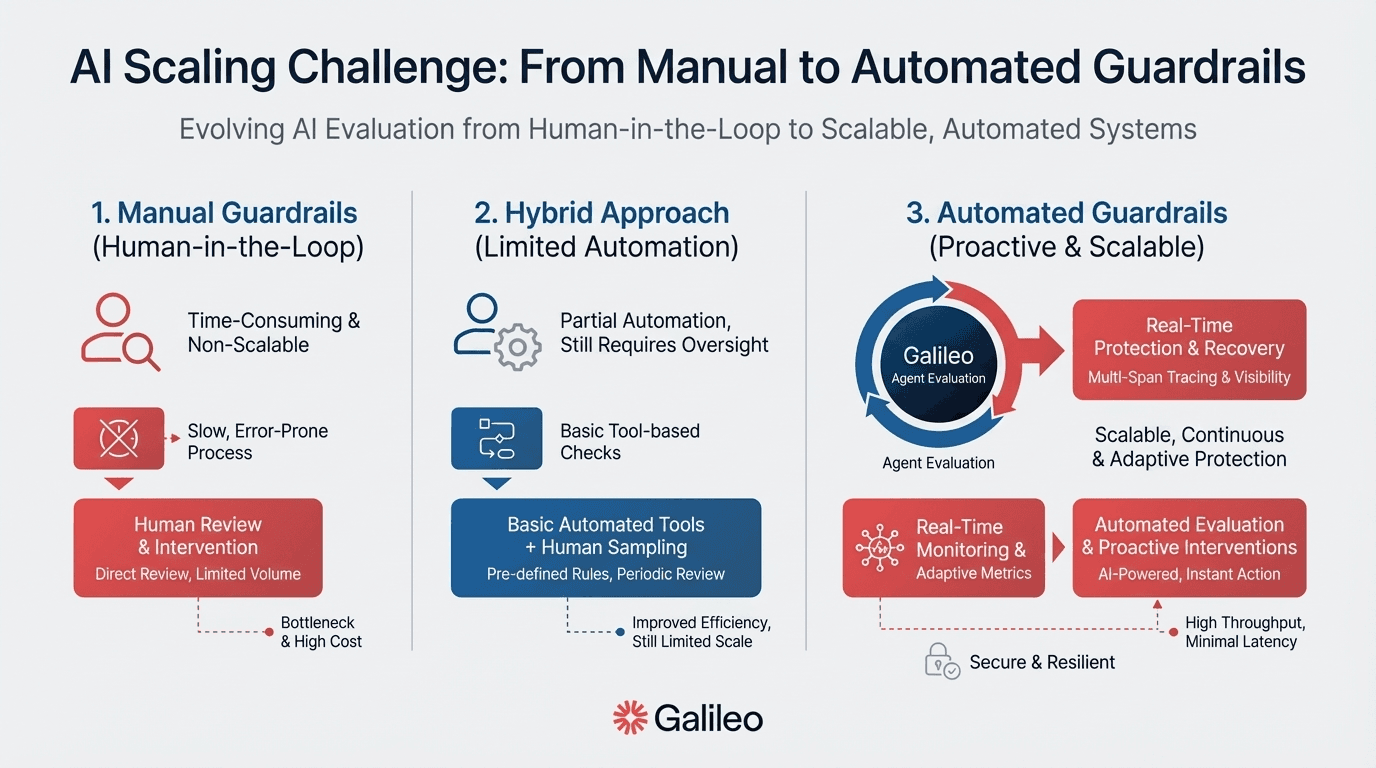

Your AI agents are multiplying faster than your governance capabilities. Manual reviews that worked for pilot programs now create deployment bottlenecks, and you're discovering policy violations in production rather than preventing them.

The cost of getting this wrong: $10.22 million per data breach on average, with 95% of AI pilots failing to deliver measurable ROI.

For platform teams and engineering leaders deploying AI at scale, the core tension is maintaining consistent safety and compliance controls across dozens of systems without slowing innovation to a crawl.

This article breaks down the guardrail architecture patterns—centralized service layers, layered request-path controls, API gateway enforcement—and operating models that scale with your team growth and product complexity.

We recently explored this topic on our Chain of Thought podcast, where industry experts shared practical insights and real-world implementation strategies:

Why are AI guardrails the key to scaling AI initiatives?

Guardrails change the economics of AI deployment. Without them, every new AI application requires its own safety review, its own compliance sign-off, its own security audit. Your platform team becomes a bottleneck, and product teams start shipping without approval because waiting six weeks for a review isn't viable when competitors are moving in days.

Your competitors face the same paradox: 88% of enterprises use AI in at least one business function, yet only one-third have scaled AI enterprise-wide. The gap between adoption and value realization stems from a failure to implement systematic governance architectures. AI-related incidents reached 233 in 2024, representing a 56.4% increase over 2023.

AI guardrails enable scaling by solving the fundamental tension between velocity and safety. When you move from 3 pilot systems to 20 production applications, manual safety reviews become bottlenecks. AI Guardrails automate these controls, allowing your engineering teams to ship faster while maintaining consistent governance.

The scaling advantage manifests in three ways.

First, guardrails eliminate redundant work: instead of each product team building their own safety controls, they consume centralized guardrail services. This reduces the compliance work that adds no value while accelerating approval processes.

Second, guardrails provide consistent protection across all AI systems. Whether you're deploying a customer service chatbot or a financial analysis agent, the same baseline controls apply automatically.

Third, guardrails enable autonomous operation at scale. Your 3 AI systems could be manually monitored; your 20 systems require automated real-time intervention.

When does ad hoc AI guardrailing break down?

Ad hoc governance works until it doesn't. The failure mode is predictable: gradual erosion followed by sudden collapse.

The erosion starts with workarounds:

Teams bypass reviews to hit deadlines. A product team needs to ship by quarter-end, so they skip the full security review and promise to circle back. That circle-back never happens, and now you have an unvalidated system in production.

Safety logic gets copy-pasted without context. Another team copies guardrail code from a different project without understanding the edge cases it was designed to handle. The code runs, but the protection is superficial.

Thresholds get tuned into uselessness. A third team implements content filtering but sets thresholds so permissive they're functionally decorative. The guardrail exists on paper but catches nothing in practice.

Ownership dissolves across teams. Nobody knows what controls actually exist across your AI portfolio. PII redaction is implemented three different ways. Output validation is someone else's responsibility, except it isn't anyone's.

Failure patterns stay invisible. When guardrails exist as scattered code across twelve repositories, you can't analyze trends. Which prompt injection techniques are attackers actually using? What percentage of flagged outputs are false positives? Where are your real vulnerabilities? Ad hoc implementations generate incidents. Systematic guardrails generate the telemetry that makes your AI systems smarter over time.

None of these decisions look dangerous in isolation. Collectively, they create the conditions for public failure. This can be an agent exposing customer data, a chatbot violating brand guidelines, a financial tool hallucinating numbers that make it into a client presentation.

What architectural patterns are used to scale AI guardrails?

Repeatable architecture separates organizations stuck in pilot purgatory from those running AI at enterprise scale."

AI guardrail taxonomy

Before implementing any architecture, you need shared language. Without it, your platform team's "output filtering" means toxicity classifiers while security interprets it as keyword blocking and product engineers think it's content moderation. Three teams, three definitions, architecture discussions that go nowhere.

A practical taxonomy organizes guardrails into four layers:

AI governance: Policies and frameworks establishing risk thresholds and approval workflows

Runtime inspection and enforcement: Real-time controls including input validation, output filtering, and intervention logic

Information governance: Data quality validation, PII handling, and compliance checks

Infrastructure and stack: Platform-level security controls, access management, and audit logging

The layers are interdependent. Runtime enforcement without AI governance means your guardrails have no policy basis—teams argue endlessly about what should be blocked.

Governance without runtime enforcement means policies exist in documentation but not in code. Information governance gaps mean your other controls are processing data that shouldn't reach your AI systems in the first place.

This taxonomy transforms vague requirements into precise technical specifications. When a stakeholder asks for "safer AI," you can decompose the request: which layer is the gap in?

Central guardrail service layer

Most teams let each product team implement their own guardrails, creating redundant code that teams maintain separately.

A centralized service pattern solves this through shared infrastructure where all AI interactions flow through a central service enforcing input validation before LLM processing, real-time monitoring during execution, output filtering post-processing, comprehensive logging and metrics, and compliance data storage.

Production platforms demonstrate sub-200ms interception latency, handling input validation (prompt injection, PII, toxicity) and output filtering (hallucinations, context adherence) through centralized APIs that any product team can consume without reimplementing safety logic.

Start centralized when you have 10-50 engineers. Your platform team should own all guardrail infrastructure while product teams consume guardrails as managed services, ensuring consistent standards across all AI applications without building safety infrastructure from scratch.

Layered guardrails in the request path

You probably treat guardrails as binary: either a prompt passes validation or it doesn't. Production systems require more nuance. Your tenth product team needs healthcare-specific PII controls that your baseline configuration doesn't address. Financial services applications require transaction limits that customer service chatbots don't.

Beyond 50 engineers, you need flexibility for product-specific requirements while maintaining baseline controls. Platforms implement inheritance models where base guardrails defined centrally automatically inherit to all AI products, but product teams can layer additional controls. Production frameworks demonstrate multi-layer security:

Inputs get scanned for jailbreak attempts through adversarial pattern recognition

Agent reasoning steps get validated using chain-of-thought auditing

Generated code undergoes real-time static analysis

Each component operates at a distinct stage of the AI agent lifecycle to provide redundant protection even if individual layers fail, a critical design principle when adversaries actively probe for single points of failure.

API gateway as an enforcement point

Your API gateway already handles authentication, rate limiting, and routing, yet most teams build separate systems for AI guardrails. The gateway sits at the ideal intervention point: between users and your AI systems, where it can intercept requests before they reach models and validate responses before they reach users.

Gateways already possess the infrastructure components required for guardrails:

Request routing logic for directing traffic through safety pipelines

Header manipulation capabilities for context passing

Logging pipelines aggregating telemetry data

High-throughput processing handling enterprise scale

When you cross 100 engineers, you need agent inventory and identity management tracking all AI systems, centralized policies applied consistently across products with configurable overrides, unified observability aggregating telemetry from all agents, control and coordination tools for multi-agent workflows, and automated audit capabilities generating compliance evidence across all products.

Who owns AI guardrails at scale?

Architecture patterns fail without clear ownership. The most contentious question in your planning meetings isn't which technology to use; it's who is accountable when guardrails fail and who has authority to update policies without creating bottlenecks.

Successful operating models require platform teams with the right expertise, governance forums with clear decision rights, and guardrails embedded throughout your software development lifecycle.

Staff your guardrail platform team

How do you staff a specialized function when you're managing only three AI applications? AI guardrails require expertise spanning ML engineering, security, compliance, and ethics, yet you can't hire five specialists for a system supporting three applications.

Enterprise AI teams with 10-50 engineers typically need 5-15 person teams combining MLOps Engineers (3-5 FTEs) automating deployment pipelines, AI Ethics Specialists (1-2 FTEs) developing policy frameworks, and Security Engineers (2-3 FTEs) performing threat modeling.

When you cross 50 engineers, add Compliance Officers (1-2 FTEs) ensuring regulatory alignment and expand to 20-50 distributed practitioners. Leading frameworks integrate these roles across four SDLC phases: Design (ethics and policy), Development (MLOps automation), Deployment (security validation), and Operations (product management and monitoring).

Many AI pilots struggle to achieve measurable ROI, and governance challenges are frequently cited as barriers to success. Effective systematic oversight can help control compliance costs and maintain necessary controls, addressing issues that often impact AI pilot programs.

Define governance forums and decision rights

Without clear authority, product teams either bypass governance entirely or negotiations stall releases. MIT Sloan research on agentic AI enterprise transformation emphasizes that scaling AI requires redesigned decision rights and accountability structures through systematic governance frameworks, not just better tooling.

The UK Government AI Playbook establishes AI Governance Boards with multi-stakeholder representation requiring clear reporting lines to senior management, formally defined decision rights, and documented terms of reference.

Effective committees balance oversight with velocity: they establish policies defining risk thresholds, determine which AI applications require human oversight, and review aggregate metrics demonstrating compliance trends rather than approving every model deployment.

Embed AI guardrails across the SDLC

Most teams treat guardrail compliance as a pre-launch gate. By then, technical debt has accumulated and refactoring is expensive.

Production teams embed governance throughout development: catching violations at code commit when fixing them costs hours instead of weeks, validating safety controls during build pipelines before they reach staging environments, and monitoring compliance in production with automated remediation.

Industry best practices solve this through "shift-left" governance embedded across four key phases: design phase policy creation, development phase controls embedded in code workflows, deployment phase pre-production validation gates, and operations phase real-time runtime monitoring.

The shift-left approach works because it aligns incentives: developers receive immediate feedback on policy violations rather than discovering failures weeks later during review gates.

AI guardrails as a competitive advantage

The organizations winning with AI aren't moving fast and breaking things. They're moving fast because they built the governance infrastructure that lets them. Guardrails are the foundation that makes sustained innovation possible. Start building that foundation now.

Here's how Galileo helps you with AI guardrails:

Automated quality guardrails in CI/CD: Galileo integrates directly into your development workflow, running comprehensive evaluations on every code change and blocking releases that fail quality thresholds

Multi-dimensional response evaluation: With Galileo's Luna-2 evaluation models, you can assess every output across dozens of quality dimensions—correctness, toxicity, bias, adherence—at 97% lower cost than traditional LLM-based evaluation approaches

Real-time runtime protection: Galileo's Agent Protect scans every prompt and response in production, blocking harmful outputs before they reach users while maintaining detailed compliance logs for audit requirements

Intelligent failure detection: Galileo’s Insights Engine automatically clusters similar failures, surfaces root-cause patterns, and recommends fixes, reducing debugging time while building institutional knowledge

Human-in-the-loop optimization: Galileo's Continuous Learning via Human Feedback (CLHF) transforms expert reviews into reusable evaluators, accelerating iteration while maintaining quality standards

Discover how Galileo provides enterprise-grade AI guardrails with pre-built policies, real-time metrics, and ready-made integrations.

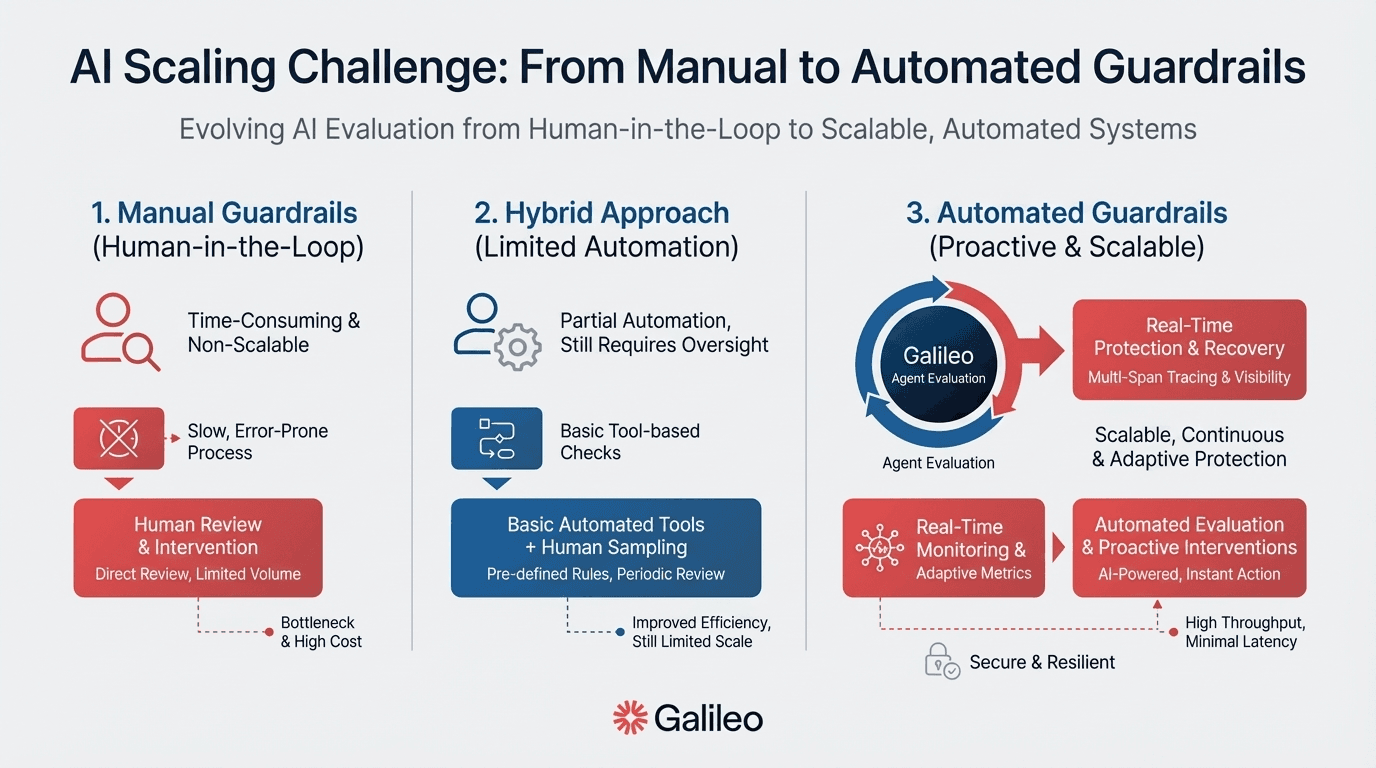

Your AI agents are multiplying faster than your governance capabilities. Manual reviews that worked for pilot programs now create deployment bottlenecks, and you're discovering policy violations in production rather than preventing them.

The cost of getting this wrong: $10.22 million per data breach on average, with 95% of AI pilots failing to deliver measurable ROI.

For platform teams and engineering leaders deploying AI at scale, the core tension is maintaining consistent safety and compliance controls across dozens of systems without slowing innovation to a crawl.

This article breaks down the guardrail architecture patterns—centralized service layers, layered request-path controls, API gateway enforcement—and operating models that scale with your team growth and product complexity.

We recently explored this topic on our Chain of Thought podcast, where industry experts shared practical insights and real-world implementation strategies:

Why are AI guardrails the key to scaling AI initiatives?

Guardrails change the economics of AI deployment. Without them, every new AI application requires its own safety review, its own compliance sign-off, its own security audit. Your platform team becomes a bottleneck, and product teams start shipping without approval because waiting six weeks for a review isn't viable when competitors are moving in days.

Your competitors face the same paradox: 88% of enterprises use AI in at least one business function, yet only one-third have scaled AI enterprise-wide. The gap between adoption and value realization stems from a failure to implement systematic governance architectures. AI-related incidents reached 233 in 2024, representing a 56.4% increase over 2023.

AI guardrails enable scaling by solving the fundamental tension between velocity and safety. When you move from 3 pilot systems to 20 production applications, manual safety reviews become bottlenecks. AI Guardrails automate these controls, allowing your engineering teams to ship faster while maintaining consistent governance.

The scaling advantage manifests in three ways.

First, guardrails eliminate redundant work: instead of each product team building their own safety controls, they consume centralized guardrail services. This reduces the compliance work that adds no value while accelerating approval processes.

Second, guardrails provide consistent protection across all AI systems. Whether you're deploying a customer service chatbot or a financial analysis agent, the same baseline controls apply automatically.

Third, guardrails enable autonomous operation at scale. Your 3 AI systems could be manually monitored; your 20 systems require automated real-time intervention.

When does ad hoc AI guardrailing break down?

Ad hoc governance works until it doesn't. The failure mode is predictable: gradual erosion followed by sudden collapse.

The erosion starts with workarounds:

Teams bypass reviews to hit deadlines. A product team needs to ship by quarter-end, so they skip the full security review and promise to circle back. That circle-back never happens, and now you have an unvalidated system in production.

Safety logic gets copy-pasted without context. Another team copies guardrail code from a different project without understanding the edge cases it was designed to handle. The code runs, but the protection is superficial.

Thresholds get tuned into uselessness. A third team implements content filtering but sets thresholds so permissive they're functionally decorative. The guardrail exists on paper but catches nothing in practice.

Ownership dissolves across teams. Nobody knows what controls actually exist across your AI portfolio. PII redaction is implemented three different ways. Output validation is someone else's responsibility, except it isn't anyone's.

Failure patterns stay invisible. When guardrails exist as scattered code across twelve repositories, you can't analyze trends. Which prompt injection techniques are attackers actually using? What percentage of flagged outputs are false positives? Where are your real vulnerabilities? Ad hoc implementations generate incidents. Systematic guardrails generate the telemetry that makes your AI systems smarter over time.

None of these decisions look dangerous in isolation. Collectively, they create the conditions for public failure. This can be an agent exposing customer data, a chatbot violating brand guidelines, a financial tool hallucinating numbers that make it into a client presentation.

What architectural patterns are used to scale AI guardrails?

Repeatable architecture separates organizations stuck in pilot purgatory from those running AI at enterprise scale."

AI guardrail taxonomy

Before implementing any architecture, you need shared language. Without it, your platform team's "output filtering" means toxicity classifiers while security interprets it as keyword blocking and product engineers think it's content moderation. Three teams, three definitions, architecture discussions that go nowhere.

A practical taxonomy organizes guardrails into four layers:

AI governance: Policies and frameworks establishing risk thresholds and approval workflows

Runtime inspection and enforcement: Real-time controls including input validation, output filtering, and intervention logic

Information governance: Data quality validation, PII handling, and compliance checks

Infrastructure and stack: Platform-level security controls, access management, and audit logging

The layers are interdependent. Runtime enforcement without AI governance means your guardrails have no policy basis—teams argue endlessly about what should be blocked.

Governance without runtime enforcement means policies exist in documentation but not in code. Information governance gaps mean your other controls are processing data that shouldn't reach your AI systems in the first place.

This taxonomy transforms vague requirements into precise technical specifications. When a stakeholder asks for "safer AI," you can decompose the request: which layer is the gap in?

Central guardrail service layer

Most teams let each product team implement their own guardrails, creating redundant code that teams maintain separately.

A centralized service pattern solves this through shared infrastructure where all AI interactions flow through a central service enforcing input validation before LLM processing, real-time monitoring during execution, output filtering post-processing, comprehensive logging and metrics, and compliance data storage.

Production platforms demonstrate sub-200ms interception latency, handling input validation (prompt injection, PII, toxicity) and output filtering (hallucinations, context adherence) through centralized APIs that any product team can consume without reimplementing safety logic.

Start centralized when you have 10-50 engineers. Your platform team should own all guardrail infrastructure while product teams consume guardrails as managed services, ensuring consistent standards across all AI applications without building safety infrastructure from scratch.

Layered guardrails in the request path

You probably treat guardrails as binary: either a prompt passes validation or it doesn't. Production systems require more nuance. Your tenth product team needs healthcare-specific PII controls that your baseline configuration doesn't address. Financial services applications require transaction limits that customer service chatbots don't.

Beyond 50 engineers, you need flexibility for product-specific requirements while maintaining baseline controls. Platforms implement inheritance models where base guardrails defined centrally automatically inherit to all AI products, but product teams can layer additional controls. Production frameworks demonstrate multi-layer security:

Inputs get scanned for jailbreak attempts through adversarial pattern recognition

Agent reasoning steps get validated using chain-of-thought auditing

Generated code undergoes real-time static analysis

Each component operates at a distinct stage of the AI agent lifecycle to provide redundant protection even if individual layers fail, a critical design principle when adversaries actively probe for single points of failure.

API gateway as an enforcement point

Your API gateway already handles authentication, rate limiting, and routing, yet most teams build separate systems for AI guardrails. The gateway sits at the ideal intervention point: between users and your AI systems, where it can intercept requests before they reach models and validate responses before they reach users.

Gateways already possess the infrastructure components required for guardrails:

Request routing logic for directing traffic through safety pipelines

Header manipulation capabilities for context passing

Logging pipelines aggregating telemetry data

High-throughput processing handling enterprise scale

When you cross 100 engineers, you need agent inventory and identity management tracking all AI systems, centralized policies applied consistently across products with configurable overrides, unified observability aggregating telemetry from all agents, control and coordination tools for multi-agent workflows, and automated audit capabilities generating compliance evidence across all products.

Who owns AI guardrails at scale?

Architecture patterns fail without clear ownership. The most contentious question in your planning meetings isn't which technology to use; it's who is accountable when guardrails fail and who has authority to update policies without creating bottlenecks.

Successful operating models require platform teams with the right expertise, governance forums with clear decision rights, and guardrails embedded throughout your software development lifecycle.

Staff your guardrail platform team

How do you staff a specialized function when you're managing only three AI applications? AI guardrails require expertise spanning ML engineering, security, compliance, and ethics, yet you can't hire five specialists for a system supporting three applications.

Enterprise AI teams with 10-50 engineers typically need 5-15 person teams combining MLOps Engineers (3-5 FTEs) automating deployment pipelines, AI Ethics Specialists (1-2 FTEs) developing policy frameworks, and Security Engineers (2-3 FTEs) performing threat modeling.

When you cross 50 engineers, add Compliance Officers (1-2 FTEs) ensuring regulatory alignment and expand to 20-50 distributed practitioners. Leading frameworks integrate these roles across four SDLC phases: Design (ethics and policy), Development (MLOps automation), Deployment (security validation), and Operations (product management and monitoring).

Many AI pilots struggle to achieve measurable ROI, and governance challenges are frequently cited as barriers to success. Effective systematic oversight can help control compliance costs and maintain necessary controls, addressing issues that often impact AI pilot programs.

Define governance forums and decision rights

Without clear authority, product teams either bypass governance entirely or negotiations stall releases. MIT Sloan research on agentic AI enterprise transformation emphasizes that scaling AI requires redesigned decision rights and accountability structures through systematic governance frameworks, not just better tooling.

The UK Government AI Playbook establishes AI Governance Boards with multi-stakeholder representation requiring clear reporting lines to senior management, formally defined decision rights, and documented terms of reference.

Effective committees balance oversight with velocity: they establish policies defining risk thresholds, determine which AI applications require human oversight, and review aggregate metrics demonstrating compliance trends rather than approving every model deployment.

Embed AI guardrails across the SDLC

Most teams treat guardrail compliance as a pre-launch gate. By then, technical debt has accumulated and refactoring is expensive.

Production teams embed governance throughout development: catching violations at code commit when fixing them costs hours instead of weeks, validating safety controls during build pipelines before they reach staging environments, and monitoring compliance in production with automated remediation.

Industry best practices solve this through "shift-left" governance embedded across four key phases: design phase policy creation, development phase controls embedded in code workflows, deployment phase pre-production validation gates, and operations phase real-time runtime monitoring.

The shift-left approach works because it aligns incentives: developers receive immediate feedback on policy violations rather than discovering failures weeks later during review gates.

AI guardrails as a competitive advantage

The organizations winning with AI aren't moving fast and breaking things. They're moving fast because they built the governance infrastructure that lets them. Guardrails are the foundation that makes sustained innovation possible. Start building that foundation now.

Here's how Galileo helps you with AI guardrails:

Automated quality guardrails in CI/CD: Galileo integrates directly into your development workflow, running comprehensive evaluations on every code change and blocking releases that fail quality thresholds

Multi-dimensional response evaluation: With Galileo's Luna-2 evaluation models, you can assess every output across dozens of quality dimensions—correctness, toxicity, bias, adherence—at 97% lower cost than traditional LLM-based evaluation approaches

Real-time runtime protection: Galileo's Agent Protect scans every prompt and response in production, blocking harmful outputs before they reach users while maintaining detailed compliance logs for audit requirements

Intelligent failure detection: Galileo’s Insights Engine automatically clusters similar failures, surfaces root-cause patterns, and recommends fixes, reducing debugging time while building institutional knowledge

Human-in-the-loop optimization: Galileo's Continuous Learning via Human Feedback (CLHF) transforms expert reviews into reusable evaluators, accelerating iteration while maintaining quality standards

Discover how Galileo provides enterprise-grade AI guardrails with pre-built policies, real-time metrics, and ready-made integrations.

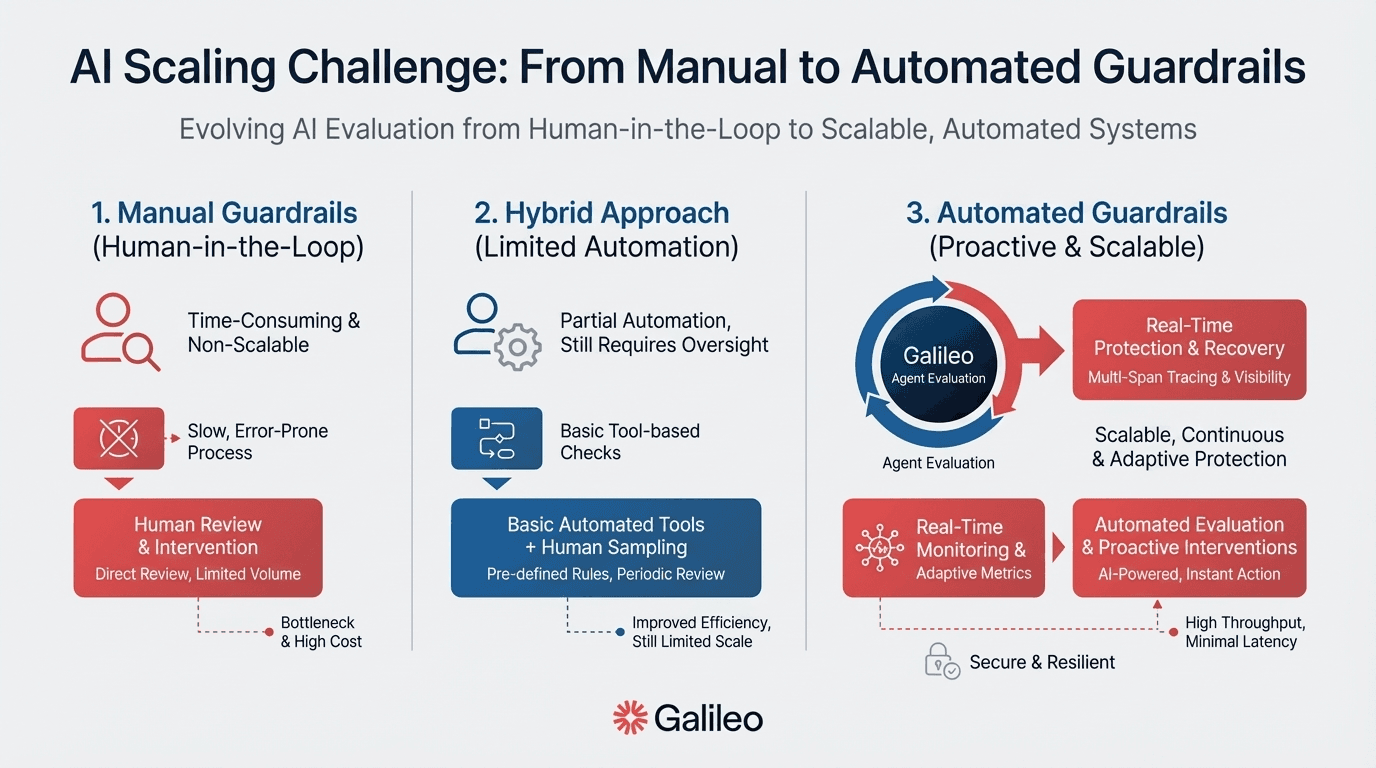

Your AI agents are multiplying faster than your governance capabilities. Manual reviews that worked for pilot programs now create deployment bottlenecks, and you're discovering policy violations in production rather than preventing them.

The cost of getting this wrong: $10.22 million per data breach on average, with 95% of AI pilots failing to deliver measurable ROI.

For platform teams and engineering leaders deploying AI at scale, the core tension is maintaining consistent safety and compliance controls across dozens of systems without slowing innovation to a crawl.

This article breaks down the guardrail architecture patterns—centralized service layers, layered request-path controls, API gateway enforcement—and operating models that scale with your team growth and product complexity.

We recently explored this topic on our Chain of Thought podcast, where industry experts shared practical insights and real-world implementation strategies:

Why are AI guardrails the key to scaling AI initiatives?

Guardrails change the economics of AI deployment. Without them, every new AI application requires its own safety review, its own compliance sign-off, its own security audit. Your platform team becomes a bottleneck, and product teams start shipping without approval because waiting six weeks for a review isn't viable when competitors are moving in days.

Your competitors face the same paradox: 88% of enterprises use AI in at least one business function, yet only one-third have scaled AI enterprise-wide. The gap between adoption and value realization stems from a failure to implement systematic governance architectures. AI-related incidents reached 233 in 2024, representing a 56.4% increase over 2023.

AI guardrails enable scaling by solving the fundamental tension between velocity and safety. When you move from 3 pilot systems to 20 production applications, manual safety reviews become bottlenecks. AI Guardrails automate these controls, allowing your engineering teams to ship faster while maintaining consistent governance.

The scaling advantage manifests in three ways.

First, guardrails eliminate redundant work: instead of each product team building their own safety controls, they consume centralized guardrail services. This reduces the compliance work that adds no value while accelerating approval processes.

Second, guardrails provide consistent protection across all AI systems. Whether you're deploying a customer service chatbot or a financial analysis agent, the same baseline controls apply automatically.

Third, guardrails enable autonomous operation at scale. Your 3 AI systems could be manually monitored; your 20 systems require automated real-time intervention.

When does ad hoc AI guardrailing break down?

Ad hoc governance works until it doesn't. The failure mode is predictable: gradual erosion followed by sudden collapse.

The erosion starts with workarounds:

Teams bypass reviews to hit deadlines. A product team needs to ship by quarter-end, so they skip the full security review and promise to circle back. That circle-back never happens, and now you have an unvalidated system in production.

Safety logic gets copy-pasted without context. Another team copies guardrail code from a different project without understanding the edge cases it was designed to handle. The code runs, but the protection is superficial.

Thresholds get tuned into uselessness. A third team implements content filtering but sets thresholds so permissive they're functionally decorative. The guardrail exists on paper but catches nothing in practice.

Ownership dissolves across teams. Nobody knows what controls actually exist across your AI portfolio. PII redaction is implemented three different ways. Output validation is someone else's responsibility, except it isn't anyone's.

Failure patterns stay invisible. When guardrails exist as scattered code across twelve repositories, you can't analyze trends. Which prompt injection techniques are attackers actually using? What percentage of flagged outputs are false positives? Where are your real vulnerabilities? Ad hoc implementations generate incidents. Systematic guardrails generate the telemetry that makes your AI systems smarter over time.

None of these decisions look dangerous in isolation. Collectively, they create the conditions for public failure. This can be an agent exposing customer data, a chatbot violating brand guidelines, a financial tool hallucinating numbers that make it into a client presentation.

What architectural patterns are used to scale AI guardrails?

Repeatable architecture separates organizations stuck in pilot purgatory from those running AI at enterprise scale."

AI guardrail taxonomy

Before implementing any architecture, you need shared language. Without it, your platform team's "output filtering" means toxicity classifiers while security interprets it as keyword blocking and product engineers think it's content moderation. Three teams, three definitions, architecture discussions that go nowhere.

A practical taxonomy organizes guardrails into four layers:

AI governance: Policies and frameworks establishing risk thresholds and approval workflows

Runtime inspection and enforcement: Real-time controls including input validation, output filtering, and intervention logic

Information governance: Data quality validation, PII handling, and compliance checks

Infrastructure and stack: Platform-level security controls, access management, and audit logging

The layers are interdependent. Runtime enforcement without AI governance means your guardrails have no policy basis—teams argue endlessly about what should be blocked.

Governance without runtime enforcement means policies exist in documentation but not in code. Information governance gaps mean your other controls are processing data that shouldn't reach your AI systems in the first place.

This taxonomy transforms vague requirements into precise technical specifications. When a stakeholder asks for "safer AI," you can decompose the request: which layer is the gap in?

Central guardrail service layer

Most teams let each product team implement their own guardrails, creating redundant code that teams maintain separately.

A centralized service pattern solves this through shared infrastructure where all AI interactions flow through a central service enforcing input validation before LLM processing, real-time monitoring during execution, output filtering post-processing, comprehensive logging and metrics, and compliance data storage.

Production platforms demonstrate sub-200ms interception latency, handling input validation (prompt injection, PII, toxicity) and output filtering (hallucinations, context adherence) through centralized APIs that any product team can consume without reimplementing safety logic.

Start centralized when you have 10-50 engineers. Your platform team should own all guardrail infrastructure while product teams consume guardrails as managed services, ensuring consistent standards across all AI applications without building safety infrastructure from scratch.

Layered guardrails in the request path

You probably treat guardrails as binary: either a prompt passes validation or it doesn't. Production systems require more nuance. Your tenth product team needs healthcare-specific PII controls that your baseline configuration doesn't address. Financial services applications require transaction limits that customer service chatbots don't.

Beyond 50 engineers, you need flexibility for product-specific requirements while maintaining baseline controls. Platforms implement inheritance models where base guardrails defined centrally automatically inherit to all AI products, but product teams can layer additional controls. Production frameworks demonstrate multi-layer security:

Inputs get scanned for jailbreak attempts through adversarial pattern recognition

Agent reasoning steps get validated using chain-of-thought auditing

Generated code undergoes real-time static analysis

Each component operates at a distinct stage of the AI agent lifecycle to provide redundant protection even if individual layers fail, a critical design principle when adversaries actively probe for single points of failure.

API gateway as an enforcement point

Your API gateway already handles authentication, rate limiting, and routing, yet most teams build separate systems for AI guardrails. The gateway sits at the ideal intervention point: between users and your AI systems, where it can intercept requests before they reach models and validate responses before they reach users.

Gateways already possess the infrastructure components required for guardrails:

Request routing logic for directing traffic through safety pipelines

Header manipulation capabilities for context passing

Logging pipelines aggregating telemetry data

High-throughput processing handling enterprise scale

When you cross 100 engineers, you need agent inventory and identity management tracking all AI systems, centralized policies applied consistently across products with configurable overrides, unified observability aggregating telemetry from all agents, control and coordination tools for multi-agent workflows, and automated audit capabilities generating compliance evidence across all products.

Who owns AI guardrails at scale?

Architecture patterns fail without clear ownership. The most contentious question in your planning meetings isn't which technology to use; it's who is accountable when guardrails fail and who has authority to update policies without creating bottlenecks.

Successful operating models require platform teams with the right expertise, governance forums with clear decision rights, and guardrails embedded throughout your software development lifecycle.

Staff your guardrail platform team

How do you staff a specialized function when you're managing only three AI applications? AI guardrails require expertise spanning ML engineering, security, compliance, and ethics, yet you can't hire five specialists for a system supporting three applications.

Enterprise AI teams with 10-50 engineers typically need 5-15 person teams combining MLOps Engineers (3-5 FTEs) automating deployment pipelines, AI Ethics Specialists (1-2 FTEs) developing policy frameworks, and Security Engineers (2-3 FTEs) performing threat modeling.

When you cross 50 engineers, add Compliance Officers (1-2 FTEs) ensuring regulatory alignment and expand to 20-50 distributed practitioners. Leading frameworks integrate these roles across four SDLC phases: Design (ethics and policy), Development (MLOps automation), Deployment (security validation), and Operations (product management and monitoring).

Many AI pilots struggle to achieve measurable ROI, and governance challenges are frequently cited as barriers to success. Effective systematic oversight can help control compliance costs and maintain necessary controls, addressing issues that often impact AI pilot programs.

Define governance forums and decision rights

Without clear authority, product teams either bypass governance entirely or negotiations stall releases. MIT Sloan research on agentic AI enterprise transformation emphasizes that scaling AI requires redesigned decision rights and accountability structures through systematic governance frameworks, not just better tooling.

The UK Government AI Playbook establishes AI Governance Boards with multi-stakeholder representation requiring clear reporting lines to senior management, formally defined decision rights, and documented terms of reference.

Effective committees balance oversight with velocity: they establish policies defining risk thresholds, determine which AI applications require human oversight, and review aggregate metrics demonstrating compliance trends rather than approving every model deployment.

Embed AI guardrails across the SDLC

Most teams treat guardrail compliance as a pre-launch gate. By then, technical debt has accumulated and refactoring is expensive.

Production teams embed governance throughout development: catching violations at code commit when fixing them costs hours instead of weeks, validating safety controls during build pipelines before they reach staging environments, and monitoring compliance in production with automated remediation.

Industry best practices solve this through "shift-left" governance embedded across four key phases: design phase policy creation, development phase controls embedded in code workflows, deployment phase pre-production validation gates, and operations phase real-time runtime monitoring.

The shift-left approach works because it aligns incentives: developers receive immediate feedback on policy violations rather than discovering failures weeks later during review gates.

AI guardrails as a competitive advantage

The organizations winning with AI aren't moving fast and breaking things. They're moving fast because they built the governance infrastructure that lets them. Guardrails are the foundation that makes sustained innovation possible. Start building that foundation now.

Here's how Galileo helps you with AI guardrails:

Automated quality guardrails in CI/CD: Galileo integrates directly into your development workflow, running comprehensive evaluations on every code change and blocking releases that fail quality thresholds

Multi-dimensional response evaluation: With Galileo's Luna-2 evaluation models, you can assess every output across dozens of quality dimensions—correctness, toxicity, bias, adherence—at 97% lower cost than traditional LLM-based evaluation approaches

Real-time runtime protection: Galileo's Agent Protect scans every prompt and response in production, blocking harmful outputs before they reach users while maintaining detailed compliance logs for audit requirements

Intelligent failure detection: Galileo’s Insights Engine automatically clusters similar failures, surfaces root-cause patterns, and recommends fixes, reducing debugging time while building institutional knowledge

Human-in-the-loop optimization: Galileo's Continuous Learning via Human Feedback (CLHF) transforms expert reviews into reusable evaluators, accelerating iteration while maintaining quality standards

Discover how Galileo provides enterprise-grade AI guardrails with pre-built policies, real-time metrics, and ready-made integrations.

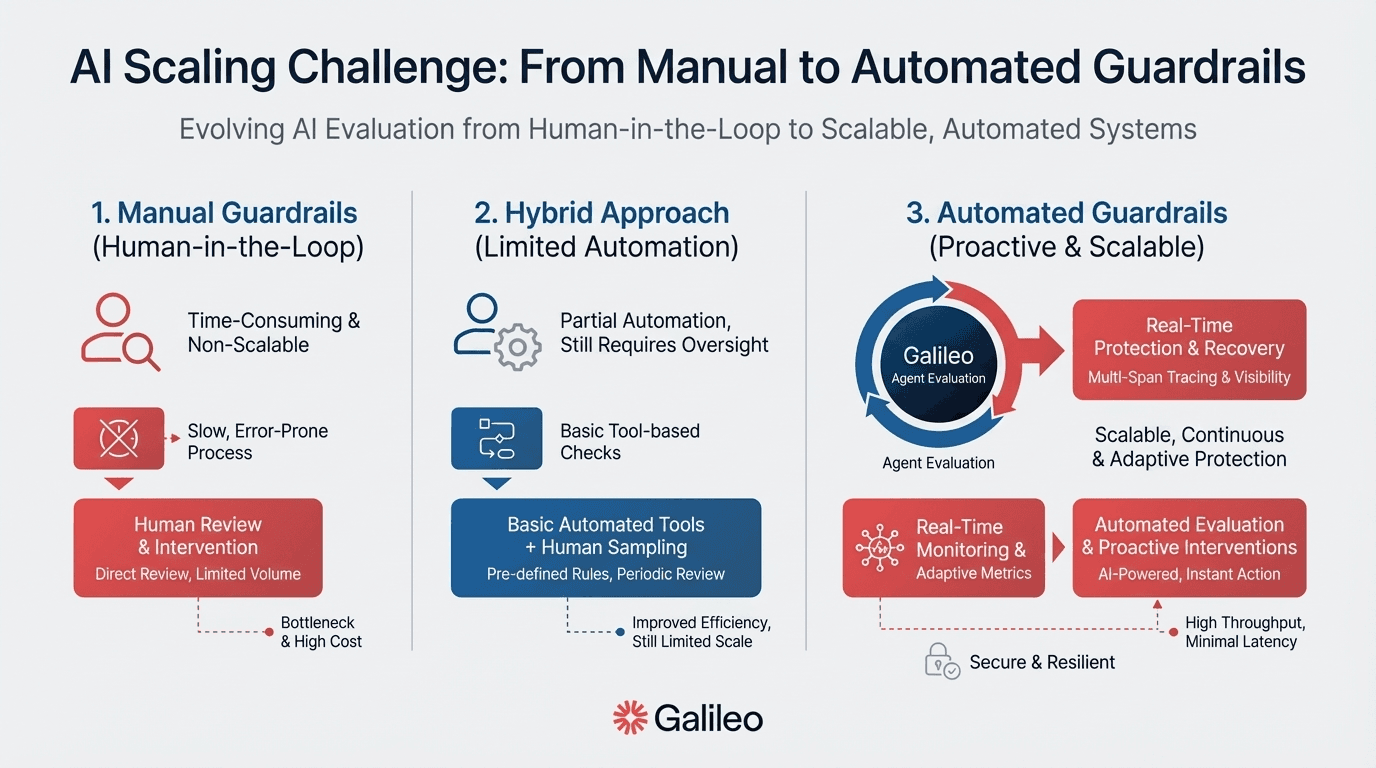

Your AI agents are multiplying faster than your governance capabilities. Manual reviews that worked for pilot programs now create deployment bottlenecks, and you're discovering policy violations in production rather than preventing them.

The cost of getting this wrong: $10.22 million per data breach on average, with 95% of AI pilots failing to deliver measurable ROI.

For platform teams and engineering leaders deploying AI at scale, the core tension is maintaining consistent safety and compliance controls across dozens of systems without slowing innovation to a crawl.

This article breaks down the guardrail architecture patterns—centralized service layers, layered request-path controls, API gateway enforcement—and operating models that scale with your team growth and product complexity.

We recently explored this topic on our Chain of Thought podcast, where industry experts shared practical insights and real-world implementation strategies:

Why are AI guardrails the key to scaling AI initiatives?

Guardrails change the economics of AI deployment. Without them, every new AI application requires its own safety review, its own compliance sign-off, its own security audit. Your platform team becomes a bottleneck, and product teams start shipping without approval because waiting six weeks for a review isn't viable when competitors are moving in days.

Your competitors face the same paradox: 88% of enterprises use AI in at least one business function, yet only one-third have scaled AI enterprise-wide. The gap between adoption and value realization stems from a failure to implement systematic governance architectures. AI-related incidents reached 233 in 2024, representing a 56.4% increase over 2023.

AI guardrails enable scaling by solving the fundamental tension between velocity and safety. When you move from 3 pilot systems to 20 production applications, manual safety reviews become bottlenecks. AI Guardrails automate these controls, allowing your engineering teams to ship faster while maintaining consistent governance.

The scaling advantage manifests in three ways.

First, guardrails eliminate redundant work: instead of each product team building their own safety controls, they consume centralized guardrail services. This reduces the compliance work that adds no value while accelerating approval processes.

Second, guardrails provide consistent protection across all AI systems. Whether you're deploying a customer service chatbot or a financial analysis agent, the same baseline controls apply automatically.

Third, guardrails enable autonomous operation at scale. Your 3 AI systems could be manually monitored; your 20 systems require automated real-time intervention.

When does ad hoc AI guardrailing break down?

Ad hoc governance works until it doesn't. The failure mode is predictable: gradual erosion followed by sudden collapse.

The erosion starts with workarounds:

Teams bypass reviews to hit deadlines. A product team needs to ship by quarter-end, so they skip the full security review and promise to circle back. That circle-back never happens, and now you have an unvalidated system in production.

Safety logic gets copy-pasted without context. Another team copies guardrail code from a different project without understanding the edge cases it was designed to handle. The code runs, but the protection is superficial.

Thresholds get tuned into uselessness. A third team implements content filtering but sets thresholds so permissive they're functionally decorative. The guardrail exists on paper but catches nothing in practice.

Ownership dissolves across teams. Nobody knows what controls actually exist across your AI portfolio. PII redaction is implemented three different ways. Output validation is someone else's responsibility, except it isn't anyone's.

Failure patterns stay invisible. When guardrails exist as scattered code across twelve repositories, you can't analyze trends. Which prompt injection techniques are attackers actually using? What percentage of flagged outputs are false positives? Where are your real vulnerabilities? Ad hoc implementations generate incidents. Systematic guardrails generate the telemetry that makes your AI systems smarter over time.

None of these decisions look dangerous in isolation. Collectively, they create the conditions for public failure. This can be an agent exposing customer data, a chatbot violating brand guidelines, a financial tool hallucinating numbers that make it into a client presentation.

What architectural patterns are used to scale AI guardrails?

Repeatable architecture separates organizations stuck in pilot purgatory from those running AI at enterprise scale."

AI guardrail taxonomy

Before implementing any architecture, you need shared language. Without it, your platform team's "output filtering" means toxicity classifiers while security interprets it as keyword blocking and product engineers think it's content moderation. Three teams, three definitions, architecture discussions that go nowhere.

A practical taxonomy organizes guardrails into four layers:

AI governance: Policies and frameworks establishing risk thresholds and approval workflows

Runtime inspection and enforcement: Real-time controls including input validation, output filtering, and intervention logic

Information governance: Data quality validation, PII handling, and compliance checks

Infrastructure and stack: Platform-level security controls, access management, and audit logging

The layers are interdependent. Runtime enforcement without AI governance means your guardrails have no policy basis—teams argue endlessly about what should be blocked.

Governance without runtime enforcement means policies exist in documentation but not in code. Information governance gaps mean your other controls are processing data that shouldn't reach your AI systems in the first place.

This taxonomy transforms vague requirements into precise technical specifications. When a stakeholder asks for "safer AI," you can decompose the request: which layer is the gap in?

Central guardrail service layer

Most teams let each product team implement their own guardrails, creating redundant code that teams maintain separately.

A centralized service pattern solves this through shared infrastructure where all AI interactions flow through a central service enforcing input validation before LLM processing, real-time monitoring during execution, output filtering post-processing, comprehensive logging and metrics, and compliance data storage.

Production platforms demonstrate sub-200ms interception latency, handling input validation (prompt injection, PII, toxicity) and output filtering (hallucinations, context adherence) through centralized APIs that any product team can consume without reimplementing safety logic.

Start centralized when you have 10-50 engineers. Your platform team should own all guardrail infrastructure while product teams consume guardrails as managed services, ensuring consistent standards across all AI applications without building safety infrastructure from scratch.

Layered guardrails in the request path

You probably treat guardrails as binary: either a prompt passes validation or it doesn't. Production systems require more nuance. Your tenth product team needs healthcare-specific PII controls that your baseline configuration doesn't address. Financial services applications require transaction limits that customer service chatbots don't.

Beyond 50 engineers, you need flexibility for product-specific requirements while maintaining baseline controls. Platforms implement inheritance models where base guardrails defined centrally automatically inherit to all AI products, but product teams can layer additional controls. Production frameworks demonstrate multi-layer security:

Inputs get scanned for jailbreak attempts through adversarial pattern recognition

Agent reasoning steps get validated using chain-of-thought auditing

Generated code undergoes real-time static analysis

Each component operates at a distinct stage of the AI agent lifecycle to provide redundant protection even if individual layers fail, a critical design principle when adversaries actively probe for single points of failure.

API gateway as an enforcement point

Your API gateway already handles authentication, rate limiting, and routing, yet most teams build separate systems for AI guardrails. The gateway sits at the ideal intervention point: between users and your AI systems, where it can intercept requests before they reach models and validate responses before they reach users.

Gateways already possess the infrastructure components required for guardrails:

Request routing logic for directing traffic through safety pipelines

Header manipulation capabilities for context passing

Logging pipelines aggregating telemetry data

High-throughput processing handling enterprise scale

When you cross 100 engineers, you need agent inventory and identity management tracking all AI systems, centralized policies applied consistently across products with configurable overrides, unified observability aggregating telemetry from all agents, control and coordination tools for multi-agent workflows, and automated audit capabilities generating compliance evidence across all products.

Who owns AI guardrails at scale?

Architecture patterns fail without clear ownership. The most contentious question in your planning meetings isn't which technology to use; it's who is accountable when guardrails fail and who has authority to update policies without creating bottlenecks.

Successful operating models require platform teams with the right expertise, governance forums with clear decision rights, and guardrails embedded throughout your software development lifecycle.

Staff your guardrail platform team

How do you staff a specialized function when you're managing only three AI applications? AI guardrails require expertise spanning ML engineering, security, compliance, and ethics, yet you can't hire five specialists for a system supporting three applications.

Enterprise AI teams with 10-50 engineers typically need 5-15 person teams combining MLOps Engineers (3-5 FTEs) automating deployment pipelines, AI Ethics Specialists (1-2 FTEs) developing policy frameworks, and Security Engineers (2-3 FTEs) performing threat modeling.

When you cross 50 engineers, add Compliance Officers (1-2 FTEs) ensuring regulatory alignment and expand to 20-50 distributed practitioners. Leading frameworks integrate these roles across four SDLC phases: Design (ethics and policy), Development (MLOps automation), Deployment (security validation), and Operations (product management and monitoring).

Many AI pilots struggle to achieve measurable ROI, and governance challenges are frequently cited as barriers to success. Effective systematic oversight can help control compliance costs and maintain necessary controls, addressing issues that often impact AI pilot programs.

Define governance forums and decision rights

Without clear authority, product teams either bypass governance entirely or negotiations stall releases. MIT Sloan research on agentic AI enterprise transformation emphasizes that scaling AI requires redesigned decision rights and accountability structures through systematic governance frameworks, not just better tooling.

The UK Government AI Playbook establishes AI Governance Boards with multi-stakeholder representation requiring clear reporting lines to senior management, formally defined decision rights, and documented terms of reference.

Effective committees balance oversight with velocity: they establish policies defining risk thresholds, determine which AI applications require human oversight, and review aggregate metrics demonstrating compliance trends rather than approving every model deployment.

Embed AI guardrails across the SDLC

Most teams treat guardrail compliance as a pre-launch gate. By then, technical debt has accumulated and refactoring is expensive.

Production teams embed governance throughout development: catching violations at code commit when fixing them costs hours instead of weeks, validating safety controls during build pipelines before they reach staging environments, and monitoring compliance in production with automated remediation.

Industry best practices solve this through "shift-left" governance embedded across four key phases: design phase policy creation, development phase controls embedded in code workflows, deployment phase pre-production validation gates, and operations phase real-time runtime monitoring.

The shift-left approach works because it aligns incentives: developers receive immediate feedback on policy violations rather than discovering failures weeks later during review gates.

AI guardrails as a competitive advantage

The organizations winning with AI aren't moving fast and breaking things. They're moving fast because they built the governance infrastructure that lets them. Guardrails are the foundation that makes sustained innovation possible. Start building that foundation now.

Here's how Galileo helps you with AI guardrails:

Automated quality guardrails in CI/CD: Galileo integrates directly into your development workflow, running comprehensive evaluations on every code change and blocking releases that fail quality thresholds

Multi-dimensional response evaluation: With Galileo's Luna-2 evaluation models, you can assess every output across dozens of quality dimensions—correctness, toxicity, bias, adherence—at 97% lower cost than traditional LLM-based evaluation approaches

Real-time runtime protection: Galileo's Agent Protect scans every prompt and response in production, blocking harmful outputs before they reach users while maintaining detailed compliance logs for audit requirements

Intelligent failure detection: Galileo’s Insights Engine automatically clusters similar failures, surfaces root-cause patterns, and recommends fixes, reducing debugging time while building institutional knowledge

Human-in-the-loop optimization: Galileo's Continuous Learning via Human Feedback (CLHF) transforms expert reviews into reusable evaluators, accelerating iteration while maintaining quality standards

Discover how Galileo provides enterprise-grade AI guardrails with pre-built policies, real-time metrics, and ready-made integrations.

Jackson Wells