Feb 2, 2026

PaperBench Explained: Why AI Agents Score 21% vs 41% for Humans

Your AI agent generates clean code for a novel algorithm. Hours later, it's stuck debugging environment errors. The gap between code generation and full execution represents the frontier challenge for autonomous AI.

PaperBench, OpenAI's benchmark released in April 2025, provides the first standardized measurement of this critical capability gap. Unlike traditional benchmarks testing isolated skills, this benchmark evaluates end-to-end research replication. It requires AI agents to understand cutting-edge papers, build complete codebases from scratch, and reproduce empirical results without human intervention.

TLDR

PaperBench measures AI agents' ability to replicate 20 ICML 2024 Spotlight papers autonomously

Best AI agents achieve 21% success versus 41% for human PhD researchers

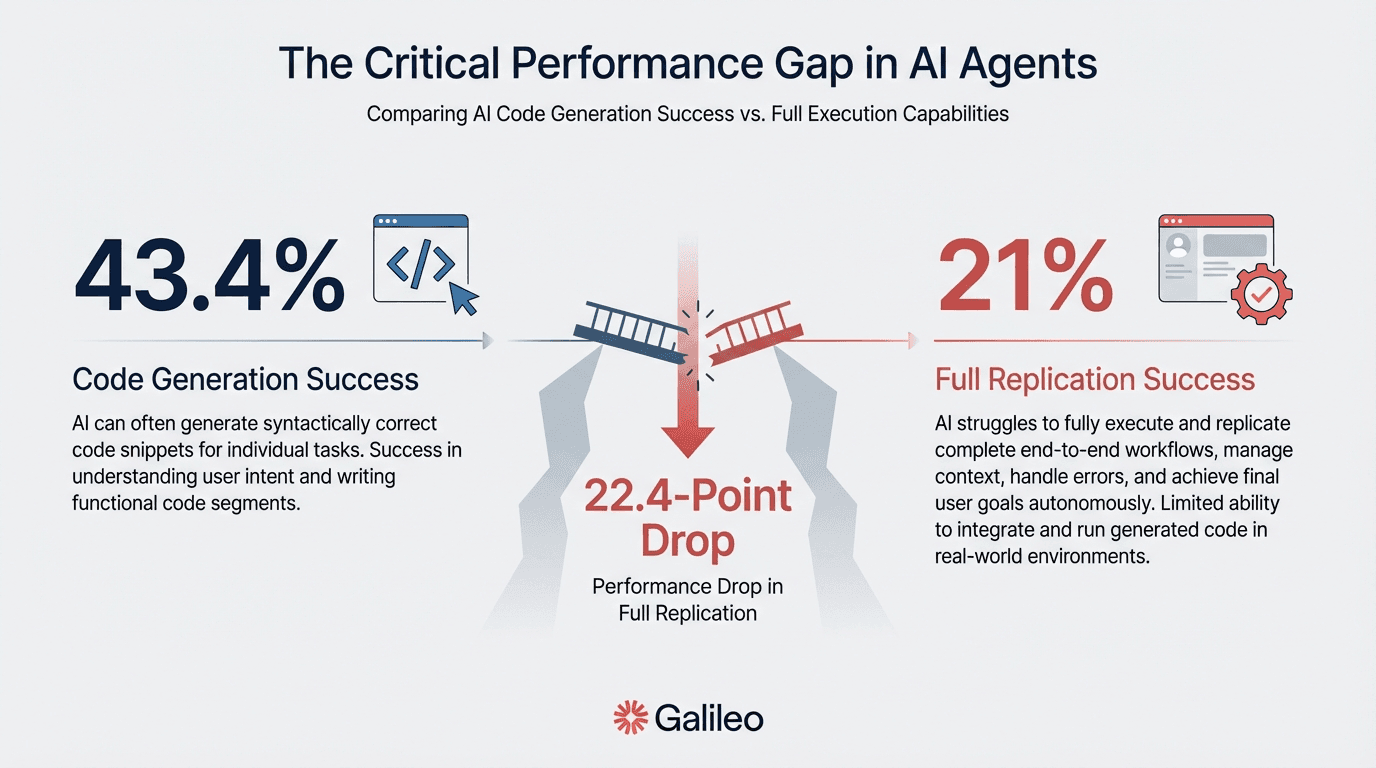

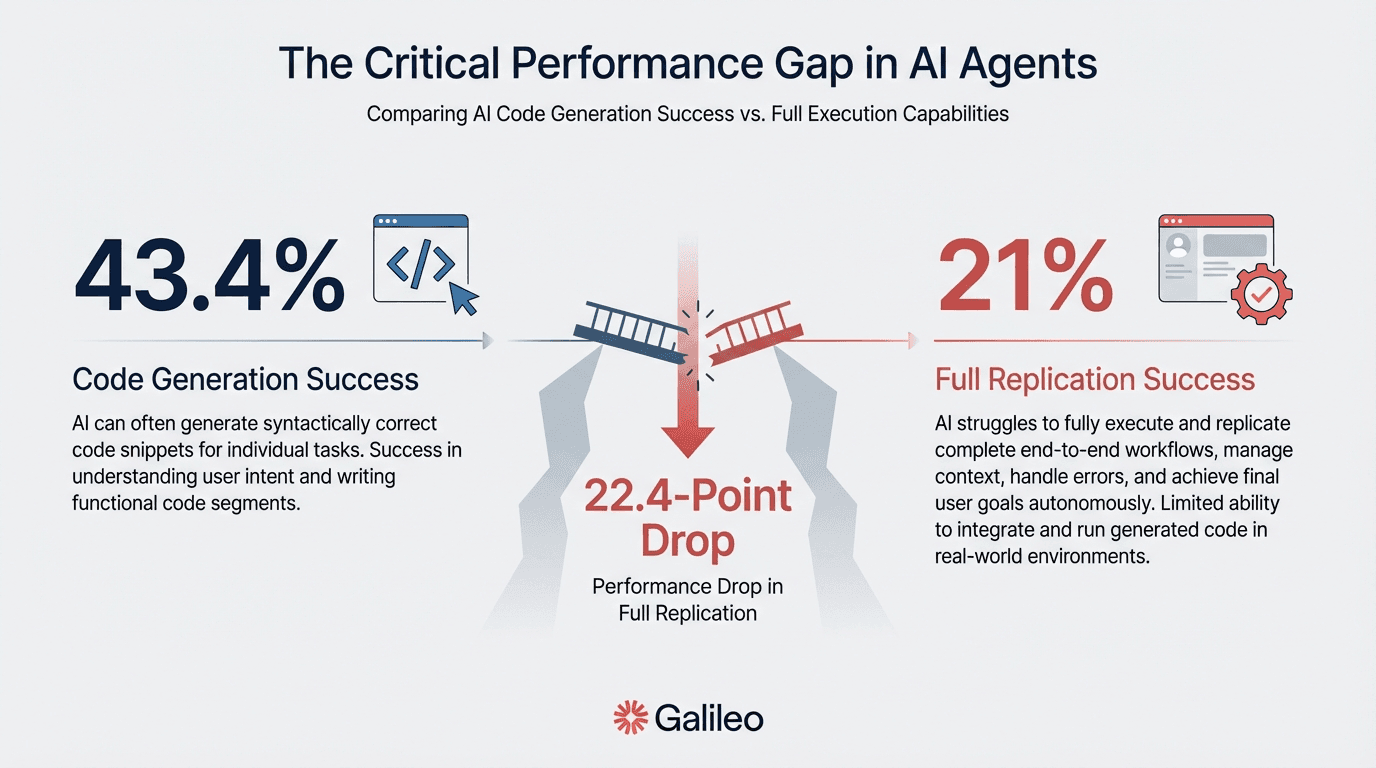

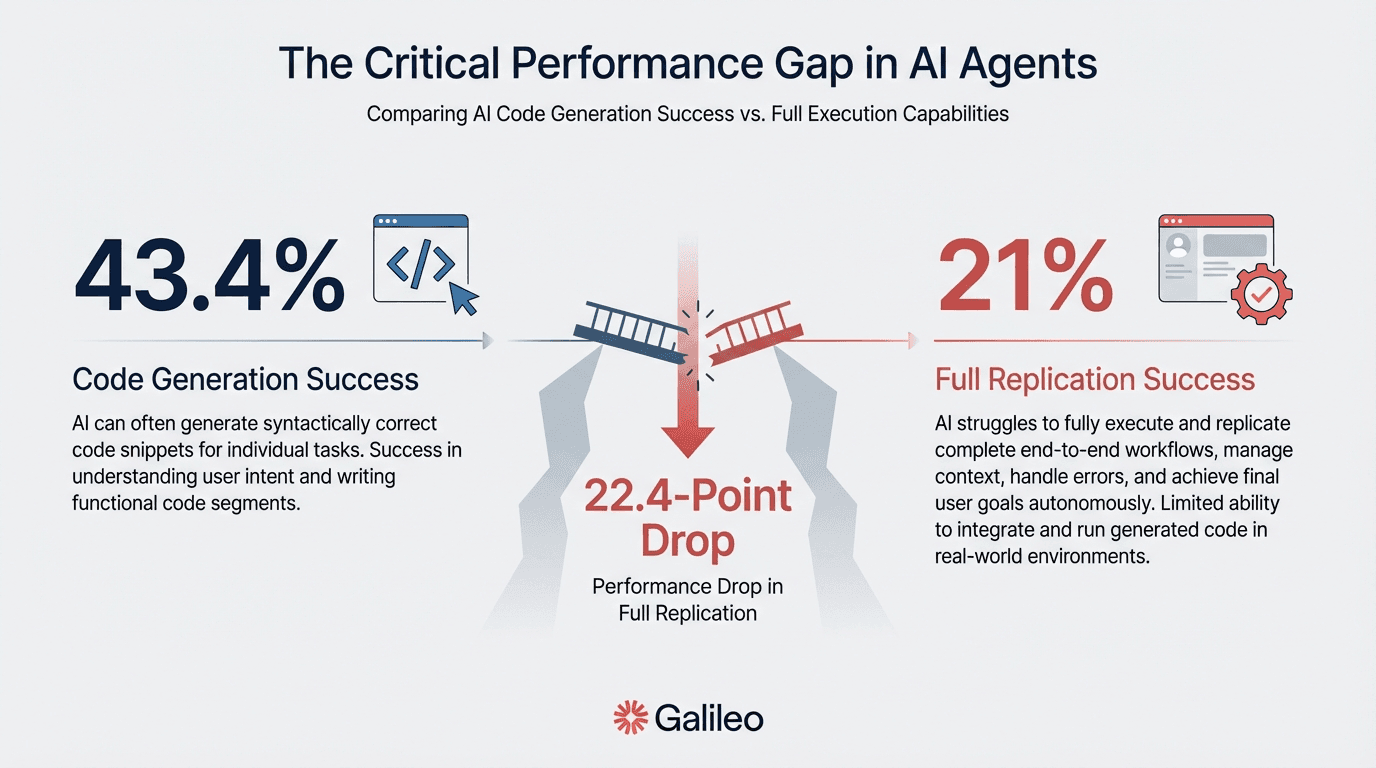

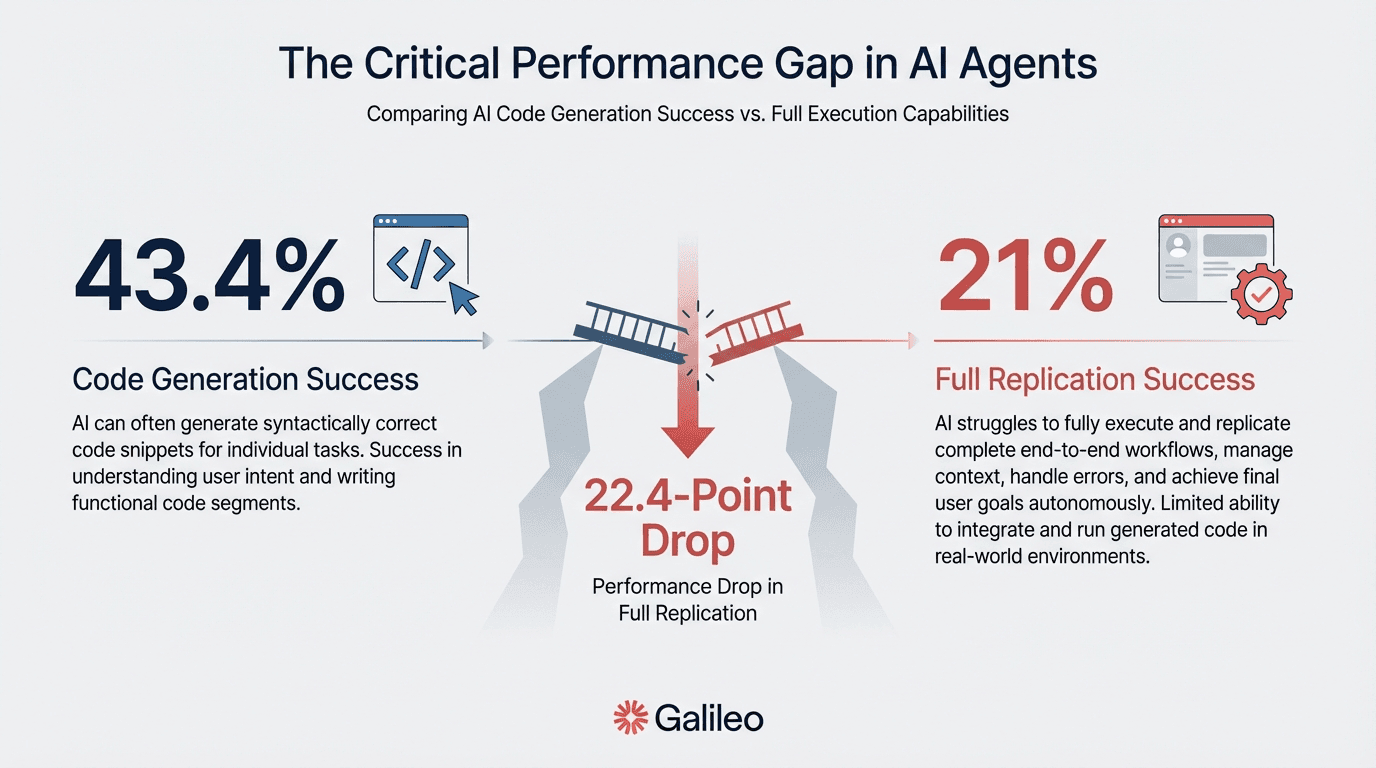

Code-only tasks succeed at 43.4%; full research replication drops to 21%

Hierarchical rubrics across 8,316 tasks enable precise capability gap identification

Performance reveals agents operate at approximately 50% of human expert capability

What Is PaperBench?

PaperBench is OpenAI's benchmark that measures AI agents' ability to autonomously replicate complete machine learning research papers from scratch. Released in April 2025, it requires agents to replicate 20 ICML 2024 Spotlight and Oral papers. The benchmark includes understanding paper contributions, developing complete codebases, and successfully executing experiments without access to the original authors' code.

For your team, this capability translates directly to R&D productivity: agents that can replicate research can accelerate internal innovation cycles. The benchmark tests whether agents can parse mathematical formulations from PDFs, translate algorithmic descriptions into working implementations, and validate numerical results against published figures.

End-to-end evaluation reveals capability gaps invisible in traditional benchmarks, giving you concrete data for resource allocation decisions.

Most benchmarks test thousands of simple tasks. PaperBench takes the opposite approach: 20 research papers from ICML 2024, decomposed into 8,316 individually gradable tasks across 12 AI research domains. The papers represent the top ~3.5% of conference submissions, ensuring evaluation against cutting-edge, peer-reviewed research.

The 12 research domains span modern machine learning:

Deep reinforcement learning

Robustness and adversarial methods

Probabilistic methods and uncertainty quantification

Large language models and transformers

Optimization, representation learning, and generative models

This breadth ensures agents face diverse challenges rather than narrow, domain-specific tasks. For procurement decisions, domain diversity means vendor performance claims must hold across your specific research areas.

Task Structure and Execution

Binary pass/fail grading misses partial progress and obscures where your investment delivers value. PaperBench solves this with a hierarchical rubric system: leaf nodes represent atomic pass/fail criteria for specific implementation components, while parent nodes aggregate child scores using weighted averages. This multi-level approach captures nuanced performance differences that simple binary metrics cannot detect.

This structure delivers strategic benefits for your team:

Granular capability mapping: Identify exactly which research tasks agents handle versus which require human expertise

Resource optimization: Direct engineering time toward high-failure task categories

Vendor comparison: Compare platforms on specific capability dimensions, not aggregate scores

Rubrics are co-developed with original paper authors, providing ground-truth validation that ensures evaluation criteria match actual research requirements. The critical deliverable is a runnable reproduce.sh script encapsulating the complete replication pipeline, enabling standardized execution across different agent implementations.

Rubrics and Automated Grading

Hierarchical rubric structures give you granular partial credit assessment that binary scoring completely misses. Parent nodes compute weighted averages of children, supporting measurement of partial progress: replicating some experiments but not others yields a score reflecting the weighted sum of successful subtasks. This approach rewards incremental capability improvements that traditional benchmarks would overlook entirely.

From a governance perspective, this granularity matters significantly. You can establish threshold policies at specific rubric levels rather than relying on opaque aggregate scores. Automated evaluation shows strong reliability, with F1 scores of 0.83 against human expert baseline, demonstrating that machine grading aligns closely with expert judgment.

However, for highest-stakes capability assessments, validate key findings with human expert review to account for variance in judgment outcomes. This hybrid approach balances evaluation efficiency with accuracy requirements.

PaperBench Results and Frontier Model Performance

Current results reveal both the promise and limitations of autonomous research agents. This section breaks down performance benchmarks, interpretation thresholds, and specific capability gaps you need to understand for deployment planning.

Current Performance Benchmarks

Claude 3.5 Sonnet with open-source scaffolding reaches 21.0% average replication score, representing the current state-of-the-art. For comparison, top ML PhD students achieve 41.4% success (best of 3 attempts after 48 hours.

Current best-performing AI agents achieve approximately half of human expert capability, a ~2x performance gap. When tested on a 3-paper subset, o1 demonstrated 26.6% performance and 43.4% on PaperBench Code-Dev, a code-only variant measuring implementation capability independent of full experimental execution.

What Is a Good PaperBench Score?

What separates state-of-the-art from breakthrough performance? These thresholds provide strategic context for vendor evaluation and help you set realistic expectations for AI research automation investments. Understanding where current systems fall on this scale enables better resource allocation and deployment planning decisions.

Below 20%: Severe limitations in autonomous research capabilities requiring extensive human intervention

20-30%: State-of-the-art AI performance (Claude 3.5 Sonnet at 21%, o1 subset at 26.6%)

30-45%: Strong performance approaching human-level capability suitable for semi-autonomous workflows

Above 45%: Breakthrough-level capability exceeding typical human expert performance

When evaluating vendors, contextualize their claims against these benchmarks. A system claiming 25% performance operates within expected state-of-the-art bounds, while claims above 35% warrant additional scrutiny and independent verification. Use these thresholds to calibrate investment decisions and set appropriate expectations with stakeholders about what autonomous research agents can realistically deliver today.

Where Agents Succeed and Fail

Code generation shows strong performance; full experimental execution reveals critical limitations. The o1-high model achieves 43.4% success on the PaperBench Code-Dev variant, more than doubling the full replication performance. This disparity highlights a fundamental capability asymmetry that shapes practical deployment strategies.

Performance collapses when agents must progress beyond code generation. The drop from 43.4% to 21.0% exposes the critical bottleneck: deploying experimental environments, debugging complex failures, and validating numerical results. Agents struggle with multi-step reasoning chains, environment configuration, dependency management, and iterative debugging cycles that human researchers handle intuitively.

Design workflows where agents handle high-volume code development while human experts focus on experimental design and complex debugging. Include checkpoint-based validation with human review at critical decision points. This hybrid approach leverages agent strengths in translation and implementation while compensating for weaknesses in execution and validation. Structure your teams to maximize handoff efficiency between automated code generation and human-led experimental workflows.

Why PaperBench Differs from Traditional AI Benchmarks

Understanding how PaperBench compares to established benchmarks helps you interpret vendor claims and build comprehensive evaluation strategies. This section covers benchmark comparisons, governance applications, and current limitations.

PaperBench vs. MMLU, HumanEval, and SWE-Bench

This benchmark represents a fundamental departure from traditional AI benchmarks by evaluating end-to-end autonomous research replication rather than isolated capabilities.

Traditional benchmarks test narrow skills: MMLU measures knowledge recall across academic subjects, HumanEval assesses function generation from docstrings, and SWE-Bench evaluates repository modifications for existing codebases. Each serves valuable purposes but captures only fragments of real-world research capability.

PaperBench requires replicating complete research implementations over hours to days, integrating paper comprehension, codebase development, experimental execution, and result validation. This holistic evaluation reveals capability gaps invisible when testing components in isolation.

When vendors report benchmark scores, understand what they measure: MMLU indicates knowledge breadth, HumanEval demonstrates function-level code generation, SWE-Bench reflects existing codebase modification, and PaperBench indicates autonomous research capability.

Build evaluation portfolios combining multiple benchmarks rather than relying on single metrics. Strong MMLU performance doesn't guarantee research replication success, just as high HumanEval scores don't predict experimental debugging capability.

Governance and Safety Applications

This benchmark provides critical infrastructure for measuring autonomous AI research capabilities, essential for evidence-based AI safety policy. NIST's framework requires continuous risk management, explainability requirements, and accountability mechanisms. PaperBench's hierarchical rubrics directly support these requirements by providing granular, auditable capability assessments that inform policy decisions with concrete data rather than speculation.

The benchmark enables governance functions through quantifiable capability measurement:

Threshold-based governance: Specific replication performance levels trigger enhanced safety protocols

Trend analysis: Track how quickly AI research capabilities advance over time

Comparative risk assessment: Benchmark different AI systems against standardized tasks

Your governance framework can use PaperBench scores for evidence-based policy decisions. Current 21% replication capability indicates agents operate at approximately 50% of human expert level.

Maintain audit trails for autonomous research agent decisions similar to PaperBench's rubric validation approach. Establish internal policies defining which capability thresholds require additional human oversight, creating proportionate governance that scales with demonstrated agent competence.

Current Limitations and Constraints

PaperBench faces several constraints limiting generalizability that you should factor into evaluation strategies. The benchmark includes only 20 ICML 2024 papers, creating selection bias toward specific research methodologies and problem types.

The single-conference snapshot cannot capture evolving research methodologies or temporal variations in AI research trends. Papers from other venues or time periods may present fundamentally different challenges.

The automated judge achieves an F1 score of 0.83 against human expert evaluation, representing approximately 17% grading variance. For high-stakes decisions, supplement automated evaluation with human expert review.

High computational costs create practical barriers to widespread adoption, requiring multi-GPU infrastructure and extended evaluation windows. The benchmark design deliberately focuses on empirical result replication while excluding assessment of theoretical contributions and novelty judgment.

Agents might replicate results mechanically without understanding underlying principles. Consider these limitations when extrapolating PaperBench performance to broader research automation capabilities within your organization.

How Can You Apply PaperBench Insights to Enterprise AI Strategy?

Understanding benchmark performance is only valuable when translated into concrete business decisions. Here's how to leverage PaperBench findings across roadmap planning, organizational design, and vendor evaluation to maximize your AI investment returns.

Translate Benchmark Scores into Strategic Roadmap Decisions

State-of-the-art performance at 21% means AI research automation remains in early maturity, suitable for pilot programs and human-augmented workflows, not fully autonomous deployment.

When you evaluate AI platforms, demand performance data across multiple benchmarks rather than relying on single-metric assessments. This multi-dimensional evaluation approach prevents over-reliance on inflated single metrics that mask critical capability gaps.

Assume approximately 50% capability versus expert humans when planning resource allocation. However, performance varies significantly by task type: code-only tasks reach 43.4% capability, while full experimental execution and validation drops to 21%.

Design workflows where agents handle high-volume code development and routine implementation tasks while human experts focus on experimental design, complex debugging, result validation, and long-horizon research planning. Structure your implementation timeline around these capability tiers, deploying agents first in areas where they perform best.

Restructure R&D Teams Around AI-Human Collaboration

The 43.4% success rate on code-only tasks versus 21.0% on full replication reveals where agents provide immediate productivity gains. Your research engineers shouldn't treat AI as a replacement; think code generation accelerator instead.

Agents can effectively translate research specifications into functional implementations, reducing implementation time for standard algorithms and data preprocessing pipelines. This allows your senior researchers to focus on higher-value activities like novel experimental design and cross-domain innovation.

However, the 22-point performance drop from code-only tasks to full research replication means you need strong experimental design and debugging capabilities within your organization.

Create dedicated roles focused on agent orchestration, validation frameworks, and quality assurance for AI-generated research code. Consider establishing an "AI Research Operations" function that bridges technical implementation and quality control, ensuring consistent output standards while maximizing agent utilization across projects.

Strengthen Procurement Processes with Performance-Based Vendor Evaluation

Your procurement process should require specific PaperBench performance data as part of due diligence, not just marketing claims about research automation. Demand verified replication scores and detailed performance breakdowns across the three core evaluation dimensions: code development, experiment execution, and result validation.

Granular performance data reveals critical capability gaps that aggregate scores often obscure. Request independent verification of claimed benchmark results whenever possible, as self-reported metrics frequently overstate production performance.

Vendor assessment extends beyond technical capabilities to governance maturity and operational support. Total cost of ownership extends beyond licensing fees to infrastructure requirements for multi-GPU environments, integration complexity with existing research workflows, and quality assurance costs for validating AI-generated research outputs.

Demand production deployment case studies with measured outcomes rather than theoretical capabilities. Evaluate vendor responsiveness, update frequency, and commitment to benchmark transparency as indicators of long-term partnership viability.

How Can You Build Internal PaperBench-Style Evaluations?

External benchmarks provide useful reference points, but your organization's unique research workflows require custom evaluation frameworks. Here's how to design, implement, and operationalize internal benchmarks that mirror PaperBench's rigorous methodology.

Identifying Your Benchmark Candidates

Which research tasks deserve evaluation infrastructure investment? Target those affecting competitive positioning. The highest-value benchmark candidates typically involve novel algorithm implementation for proprietary methods, experimental validation workflows specific to your domain, or methodology replication that directly supports product development.

Quality matters more than quantity when selecting papers to include. PaperBench uses only ICML Spotlight and Oral presentations; you should restrict your internal benchmark to the highest-quality research your team handles, whether peer-reviewed publications, validated internal research, or rigorously vetted vendor methodologies.

Domain experts who authored or deeply understand these research contributions should directly participate in rubric development, ensuring evaluation criteria reflect genuine technical standards rather than superficial checkboxes.

Designing Rubrics and Grading Systems

Binary pass/fail scoring misses partial progress. Hierarchical rubric structures give you granular assessment. Your leaf nodes need binary pass/fail criteria assessing verifiable requirements: correct algorithm implementation, proper data preprocessing, valid experimental configuration, and numerical accuracy within tolerance thresholds.

However, weight these evaluation criteria by business importance rather than treating all requirements equally—a critical production algorithm deserves higher weight than ancillary preprocessing steps.

Align your evaluation metrics with business objectives to ensure they reflect actual value creation. The hierarchical tree-structured model validated by PaperBench's methodology provides the proven framework.

Domain experts should co-develop rubrics with you to ensure evaluation criteria reflect genuine capability standards. Before deployment, validate automated graders against human expert assessment, accounting for measurement uncertainty in critical decisions.

Instrumentation and Governance Integration

Without a comprehensive logging infrastructure, you lose visibility into agent reasoning throughout evaluation workflows. Effective evaluation platforms should support a hierarchical trace-and-span architecture with structured logging patterns to capture agent decision-making.

Real-time monitoring with threshold-based alerting, aligned with PaperBench's hierarchical rubric measurement approach, allows rapid intervention when agents deviate from expected performance ranges.

Transform benchmarks from isolated tools into strategic assets by integrating with broader governance frameworks. Rather than treating evaluation as a one-time vendor selection exercise, design systems that allow research teams to autonomously conduct evaluations through intuitive interfaces and comprehensive documentation.

Establishing Evaluation Excellence for Autonomous Research

Current AI systems operate at approximately half of human expert performance. Position AI agents as code generation accelerators (43% success) while maintaining human expertise for experimental design and validation, where performance drops to 21%.

Build an evaluation infrastructure providing visibility into agent decision-making and allowing rapid iteration. Establish governance frameworks scaling with capability advancement rather than retrofitting oversight after deployment failures.

Explore Galileo's evaluation framework to build PaperBench-style assessments for your research automation strategy:

Hierarchical logging infrastructure: Capture agent decision-making across complex evaluation workflows

Custom metrics frameworks: Define domain-specific evaluation criteria aligned with research validation requirements

Real-time monitoring dashboards: Track autonomous agent performance with threshold-based alerting

Agent workflow orchestration: Design human-in-the-loop validation checkpoints at critical stages

Audit trail infrastructure: Maintain immutable records of agent decisions for governance compliance

Book a demo to see how Galileo's agent evaluation capabilities can help you build reliable AI agents with end-to-end observability, production guardrails, and research-backed metrics.

FAQs

What is PaperBench and why does it matter for enterprise AI?

PaperBench is OpenAI's benchmark measuring AI agents' ability to autonomously replicate cutting-edge machine learning research from 20 ICML 2024 papers. It matters because it provides quantifiable metrics for research automation capabilities beyond marketing claims, revealing that current best agents achieve only 21% success versus 41% for human experts.

How do you interpret PaperBench scores for procurement decisions?

Evaluate scores against current performance benchmarks: Below 20% indicates severe limitations requiring significant human oversight. Performance at 20-25% aligns with best-performing AI agents, representing approximately 50% of human expert capability. Demand detailed performance breakdowns across code development (43.4% success), execution (21.0% success), and result validation.

How do you design internal evaluation systems similar to PaperBench?

Start with business-critical research tasks as benchmark candidates, co-develop hierarchical rubrics with domain experts defining success criteria with binary leaf nodes and weighted parent aggregation, and implement scoring reflecting business value. Validate automated evaluation systems against human expert evaluation for your specific use cases.

When should you prioritize PaperBench versus other AI benchmarks?

Prioritize PaperBench when evaluating autonomous research capabilities and long-horizon task execution. Use MMLU for knowledge breadth assessment, HumanEval for basic coding capability, and SWE-Bench for software maintenance skills. Evaluate AI systems across multiple benchmark types to understand capability profiles.

What are the cost implications of deploying PaperBench-level research automation?

PaperBench's multi-GPU computational requirements and 48-hour evaluation windows create significant infrastructure costs. Account for GPU cluster expenses, compute costs for automated evaluation at scale, and quality assurance overhead for validating AI-generated outputs. Current 21% success rates mean you'll need human oversight for approximately 79% of research replication attempts.

Your AI agent generates clean code for a novel algorithm. Hours later, it's stuck debugging environment errors. The gap between code generation and full execution represents the frontier challenge for autonomous AI.

PaperBench, OpenAI's benchmark released in April 2025, provides the first standardized measurement of this critical capability gap. Unlike traditional benchmarks testing isolated skills, this benchmark evaluates end-to-end research replication. It requires AI agents to understand cutting-edge papers, build complete codebases from scratch, and reproduce empirical results without human intervention.

TLDR

PaperBench measures AI agents' ability to replicate 20 ICML 2024 Spotlight papers autonomously

Best AI agents achieve 21% success versus 41% for human PhD researchers

Code-only tasks succeed at 43.4%; full research replication drops to 21%

Hierarchical rubrics across 8,316 tasks enable precise capability gap identification

Performance reveals agents operate at approximately 50% of human expert capability

What Is PaperBench?

PaperBench is OpenAI's benchmark that measures AI agents' ability to autonomously replicate complete machine learning research papers from scratch. Released in April 2025, it requires agents to replicate 20 ICML 2024 Spotlight and Oral papers. The benchmark includes understanding paper contributions, developing complete codebases, and successfully executing experiments without access to the original authors' code.

For your team, this capability translates directly to R&D productivity: agents that can replicate research can accelerate internal innovation cycles. The benchmark tests whether agents can parse mathematical formulations from PDFs, translate algorithmic descriptions into working implementations, and validate numerical results against published figures.

End-to-end evaluation reveals capability gaps invisible in traditional benchmarks, giving you concrete data for resource allocation decisions.

Most benchmarks test thousands of simple tasks. PaperBench takes the opposite approach: 20 research papers from ICML 2024, decomposed into 8,316 individually gradable tasks across 12 AI research domains. The papers represent the top ~3.5% of conference submissions, ensuring evaluation against cutting-edge, peer-reviewed research.

The 12 research domains span modern machine learning:

Deep reinforcement learning

Robustness and adversarial methods

Probabilistic methods and uncertainty quantification

Large language models and transformers

Optimization, representation learning, and generative models

This breadth ensures agents face diverse challenges rather than narrow, domain-specific tasks. For procurement decisions, domain diversity means vendor performance claims must hold across your specific research areas.

Task Structure and Execution

Binary pass/fail grading misses partial progress and obscures where your investment delivers value. PaperBench solves this with a hierarchical rubric system: leaf nodes represent atomic pass/fail criteria for specific implementation components, while parent nodes aggregate child scores using weighted averages. This multi-level approach captures nuanced performance differences that simple binary metrics cannot detect.

This structure delivers strategic benefits for your team:

Granular capability mapping: Identify exactly which research tasks agents handle versus which require human expertise

Resource optimization: Direct engineering time toward high-failure task categories

Vendor comparison: Compare platforms on specific capability dimensions, not aggregate scores

Rubrics are co-developed with original paper authors, providing ground-truth validation that ensures evaluation criteria match actual research requirements. The critical deliverable is a runnable reproduce.sh script encapsulating the complete replication pipeline, enabling standardized execution across different agent implementations.

Rubrics and Automated Grading

Hierarchical rubric structures give you granular partial credit assessment that binary scoring completely misses. Parent nodes compute weighted averages of children, supporting measurement of partial progress: replicating some experiments but not others yields a score reflecting the weighted sum of successful subtasks. This approach rewards incremental capability improvements that traditional benchmarks would overlook entirely.

From a governance perspective, this granularity matters significantly. You can establish threshold policies at specific rubric levels rather than relying on opaque aggregate scores. Automated evaluation shows strong reliability, with F1 scores of 0.83 against human expert baseline, demonstrating that machine grading aligns closely with expert judgment.

However, for highest-stakes capability assessments, validate key findings with human expert review to account for variance in judgment outcomes. This hybrid approach balances evaluation efficiency with accuracy requirements.

PaperBench Results and Frontier Model Performance

Current results reveal both the promise and limitations of autonomous research agents. This section breaks down performance benchmarks, interpretation thresholds, and specific capability gaps you need to understand for deployment planning.

Current Performance Benchmarks

Claude 3.5 Sonnet with open-source scaffolding reaches 21.0% average replication score, representing the current state-of-the-art. For comparison, top ML PhD students achieve 41.4% success (best of 3 attempts after 48 hours.

Current best-performing AI agents achieve approximately half of human expert capability, a ~2x performance gap. When tested on a 3-paper subset, o1 demonstrated 26.6% performance and 43.4% on PaperBench Code-Dev, a code-only variant measuring implementation capability independent of full experimental execution.

What Is a Good PaperBench Score?

What separates state-of-the-art from breakthrough performance? These thresholds provide strategic context for vendor evaluation and help you set realistic expectations for AI research automation investments. Understanding where current systems fall on this scale enables better resource allocation and deployment planning decisions.

Below 20%: Severe limitations in autonomous research capabilities requiring extensive human intervention

20-30%: State-of-the-art AI performance (Claude 3.5 Sonnet at 21%, o1 subset at 26.6%)

30-45%: Strong performance approaching human-level capability suitable for semi-autonomous workflows

Above 45%: Breakthrough-level capability exceeding typical human expert performance

When evaluating vendors, contextualize their claims against these benchmarks. A system claiming 25% performance operates within expected state-of-the-art bounds, while claims above 35% warrant additional scrutiny and independent verification. Use these thresholds to calibrate investment decisions and set appropriate expectations with stakeholders about what autonomous research agents can realistically deliver today.

Where Agents Succeed and Fail

Code generation shows strong performance; full experimental execution reveals critical limitations. The o1-high model achieves 43.4% success on the PaperBench Code-Dev variant, more than doubling the full replication performance. This disparity highlights a fundamental capability asymmetry that shapes practical deployment strategies.

Performance collapses when agents must progress beyond code generation. The drop from 43.4% to 21.0% exposes the critical bottleneck: deploying experimental environments, debugging complex failures, and validating numerical results. Agents struggle with multi-step reasoning chains, environment configuration, dependency management, and iterative debugging cycles that human researchers handle intuitively.

Design workflows where agents handle high-volume code development while human experts focus on experimental design and complex debugging. Include checkpoint-based validation with human review at critical decision points. This hybrid approach leverages agent strengths in translation and implementation while compensating for weaknesses in execution and validation. Structure your teams to maximize handoff efficiency between automated code generation and human-led experimental workflows.

Why PaperBench Differs from Traditional AI Benchmarks

Understanding how PaperBench compares to established benchmarks helps you interpret vendor claims and build comprehensive evaluation strategies. This section covers benchmark comparisons, governance applications, and current limitations.

PaperBench vs. MMLU, HumanEval, and SWE-Bench

This benchmark represents a fundamental departure from traditional AI benchmarks by evaluating end-to-end autonomous research replication rather than isolated capabilities.

Traditional benchmarks test narrow skills: MMLU measures knowledge recall across academic subjects, HumanEval assesses function generation from docstrings, and SWE-Bench evaluates repository modifications for existing codebases. Each serves valuable purposes but captures only fragments of real-world research capability.

PaperBench requires replicating complete research implementations over hours to days, integrating paper comprehension, codebase development, experimental execution, and result validation. This holistic evaluation reveals capability gaps invisible when testing components in isolation.

When vendors report benchmark scores, understand what they measure: MMLU indicates knowledge breadth, HumanEval demonstrates function-level code generation, SWE-Bench reflects existing codebase modification, and PaperBench indicates autonomous research capability.

Build evaluation portfolios combining multiple benchmarks rather than relying on single metrics. Strong MMLU performance doesn't guarantee research replication success, just as high HumanEval scores don't predict experimental debugging capability.

Governance and Safety Applications

This benchmark provides critical infrastructure for measuring autonomous AI research capabilities, essential for evidence-based AI safety policy. NIST's framework requires continuous risk management, explainability requirements, and accountability mechanisms. PaperBench's hierarchical rubrics directly support these requirements by providing granular, auditable capability assessments that inform policy decisions with concrete data rather than speculation.

The benchmark enables governance functions through quantifiable capability measurement:

Threshold-based governance: Specific replication performance levels trigger enhanced safety protocols

Trend analysis: Track how quickly AI research capabilities advance over time

Comparative risk assessment: Benchmark different AI systems against standardized tasks

Your governance framework can use PaperBench scores for evidence-based policy decisions. Current 21% replication capability indicates agents operate at approximately 50% of human expert level.

Maintain audit trails for autonomous research agent decisions similar to PaperBench's rubric validation approach. Establish internal policies defining which capability thresholds require additional human oversight, creating proportionate governance that scales with demonstrated agent competence.

Current Limitations and Constraints

PaperBench faces several constraints limiting generalizability that you should factor into evaluation strategies. The benchmark includes only 20 ICML 2024 papers, creating selection bias toward specific research methodologies and problem types.

The single-conference snapshot cannot capture evolving research methodologies or temporal variations in AI research trends. Papers from other venues or time periods may present fundamentally different challenges.

The automated judge achieves an F1 score of 0.83 against human expert evaluation, representing approximately 17% grading variance. For high-stakes decisions, supplement automated evaluation with human expert review.

High computational costs create practical barriers to widespread adoption, requiring multi-GPU infrastructure and extended evaluation windows. The benchmark design deliberately focuses on empirical result replication while excluding assessment of theoretical contributions and novelty judgment.

Agents might replicate results mechanically without understanding underlying principles. Consider these limitations when extrapolating PaperBench performance to broader research automation capabilities within your organization.

How Can You Apply PaperBench Insights to Enterprise AI Strategy?

Understanding benchmark performance is only valuable when translated into concrete business decisions. Here's how to leverage PaperBench findings across roadmap planning, organizational design, and vendor evaluation to maximize your AI investment returns.

Translate Benchmark Scores into Strategic Roadmap Decisions

State-of-the-art performance at 21% means AI research automation remains in early maturity, suitable for pilot programs and human-augmented workflows, not fully autonomous deployment.

When you evaluate AI platforms, demand performance data across multiple benchmarks rather than relying on single-metric assessments. This multi-dimensional evaluation approach prevents over-reliance on inflated single metrics that mask critical capability gaps.

Assume approximately 50% capability versus expert humans when planning resource allocation. However, performance varies significantly by task type: code-only tasks reach 43.4% capability, while full experimental execution and validation drops to 21%.

Design workflows where agents handle high-volume code development and routine implementation tasks while human experts focus on experimental design, complex debugging, result validation, and long-horizon research planning. Structure your implementation timeline around these capability tiers, deploying agents first in areas where they perform best.

Restructure R&D Teams Around AI-Human Collaboration

The 43.4% success rate on code-only tasks versus 21.0% on full replication reveals where agents provide immediate productivity gains. Your research engineers shouldn't treat AI as a replacement; think code generation accelerator instead.

Agents can effectively translate research specifications into functional implementations, reducing implementation time for standard algorithms and data preprocessing pipelines. This allows your senior researchers to focus on higher-value activities like novel experimental design and cross-domain innovation.

However, the 22-point performance drop from code-only tasks to full research replication means you need strong experimental design and debugging capabilities within your organization.

Create dedicated roles focused on agent orchestration, validation frameworks, and quality assurance for AI-generated research code. Consider establishing an "AI Research Operations" function that bridges technical implementation and quality control, ensuring consistent output standards while maximizing agent utilization across projects.

Strengthen Procurement Processes with Performance-Based Vendor Evaluation

Your procurement process should require specific PaperBench performance data as part of due diligence, not just marketing claims about research automation. Demand verified replication scores and detailed performance breakdowns across the three core evaluation dimensions: code development, experiment execution, and result validation.

Granular performance data reveals critical capability gaps that aggregate scores often obscure. Request independent verification of claimed benchmark results whenever possible, as self-reported metrics frequently overstate production performance.

Vendor assessment extends beyond technical capabilities to governance maturity and operational support. Total cost of ownership extends beyond licensing fees to infrastructure requirements for multi-GPU environments, integration complexity with existing research workflows, and quality assurance costs for validating AI-generated research outputs.

Demand production deployment case studies with measured outcomes rather than theoretical capabilities. Evaluate vendor responsiveness, update frequency, and commitment to benchmark transparency as indicators of long-term partnership viability.

How Can You Build Internal PaperBench-Style Evaluations?

External benchmarks provide useful reference points, but your organization's unique research workflows require custom evaluation frameworks. Here's how to design, implement, and operationalize internal benchmarks that mirror PaperBench's rigorous methodology.

Identifying Your Benchmark Candidates

Which research tasks deserve evaluation infrastructure investment? Target those affecting competitive positioning. The highest-value benchmark candidates typically involve novel algorithm implementation for proprietary methods, experimental validation workflows specific to your domain, or methodology replication that directly supports product development.

Quality matters more than quantity when selecting papers to include. PaperBench uses only ICML Spotlight and Oral presentations; you should restrict your internal benchmark to the highest-quality research your team handles, whether peer-reviewed publications, validated internal research, or rigorously vetted vendor methodologies.

Domain experts who authored or deeply understand these research contributions should directly participate in rubric development, ensuring evaluation criteria reflect genuine technical standards rather than superficial checkboxes.

Designing Rubrics and Grading Systems

Binary pass/fail scoring misses partial progress. Hierarchical rubric structures give you granular assessment. Your leaf nodes need binary pass/fail criteria assessing verifiable requirements: correct algorithm implementation, proper data preprocessing, valid experimental configuration, and numerical accuracy within tolerance thresholds.

However, weight these evaluation criteria by business importance rather than treating all requirements equally—a critical production algorithm deserves higher weight than ancillary preprocessing steps.

Align your evaluation metrics with business objectives to ensure they reflect actual value creation. The hierarchical tree-structured model validated by PaperBench's methodology provides the proven framework.

Domain experts should co-develop rubrics with you to ensure evaluation criteria reflect genuine capability standards. Before deployment, validate automated graders against human expert assessment, accounting for measurement uncertainty in critical decisions.

Instrumentation and Governance Integration

Without a comprehensive logging infrastructure, you lose visibility into agent reasoning throughout evaluation workflows. Effective evaluation platforms should support a hierarchical trace-and-span architecture with structured logging patterns to capture agent decision-making.

Real-time monitoring with threshold-based alerting, aligned with PaperBench's hierarchical rubric measurement approach, allows rapid intervention when agents deviate from expected performance ranges.

Transform benchmarks from isolated tools into strategic assets by integrating with broader governance frameworks. Rather than treating evaluation as a one-time vendor selection exercise, design systems that allow research teams to autonomously conduct evaluations through intuitive interfaces and comprehensive documentation.

Establishing Evaluation Excellence for Autonomous Research

Current AI systems operate at approximately half of human expert performance. Position AI agents as code generation accelerators (43% success) while maintaining human expertise for experimental design and validation, where performance drops to 21%.

Build an evaluation infrastructure providing visibility into agent decision-making and allowing rapid iteration. Establish governance frameworks scaling with capability advancement rather than retrofitting oversight after deployment failures.

Explore Galileo's evaluation framework to build PaperBench-style assessments for your research automation strategy:

Hierarchical logging infrastructure: Capture agent decision-making across complex evaluation workflows

Custom metrics frameworks: Define domain-specific evaluation criteria aligned with research validation requirements

Real-time monitoring dashboards: Track autonomous agent performance with threshold-based alerting

Agent workflow orchestration: Design human-in-the-loop validation checkpoints at critical stages

Audit trail infrastructure: Maintain immutable records of agent decisions for governance compliance

Book a demo to see how Galileo's agent evaluation capabilities can help you build reliable AI agents with end-to-end observability, production guardrails, and research-backed metrics.

FAQs

What is PaperBench and why does it matter for enterprise AI?

PaperBench is OpenAI's benchmark measuring AI agents' ability to autonomously replicate cutting-edge machine learning research from 20 ICML 2024 papers. It matters because it provides quantifiable metrics for research automation capabilities beyond marketing claims, revealing that current best agents achieve only 21% success versus 41% for human experts.

How do you interpret PaperBench scores for procurement decisions?

Evaluate scores against current performance benchmarks: Below 20% indicates severe limitations requiring significant human oversight. Performance at 20-25% aligns with best-performing AI agents, representing approximately 50% of human expert capability. Demand detailed performance breakdowns across code development (43.4% success), execution (21.0% success), and result validation.

How do you design internal evaluation systems similar to PaperBench?

Start with business-critical research tasks as benchmark candidates, co-develop hierarchical rubrics with domain experts defining success criteria with binary leaf nodes and weighted parent aggregation, and implement scoring reflecting business value. Validate automated evaluation systems against human expert evaluation for your specific use cases.

When should you prioritize PaperBench versus other AI benchmarks?

Prioritize PaperBench when evaluating autonomous research capabilities and long-horizon task execution. Use MMLU for knowledge breadth assessment, HumanEval for basic coding capability, and SWE-Bench for software maintenance skills. Evaluate AI systems across multiple benchmark types to understand capability profiles.

What are the cost implications of deploying PaperBench-level research automation?

PaperBench's multi-GPU computational requirements and 48-hour evaluation windows create significant infrastructure costs. Account for GPU cluster expenses, compute costs for automated evaluation at scale, and quality assurance overhead for validating AI-generated outputs. Current 21% success rates mean you'll need human oversight for approximately 79% of research replication attempts.

Your AI agent generates clean code for a novel algorithm. Hours later, it's stuck debugging environment errors. The gap between code generation and full execution represents the frontier challenge for autonomous AI.

PaperBench, OpenAI's benchmark released in April 2025, provides the first standardized measurement of this critical capability gap. Unlike traditional benchmarks testing isolated skills, this benchmark evaluates end-to-end research replication. It requires AI agents to understand cutting-edge papers, build complete codebases from scratch, and reproduce empirical results without human intervention.

TLDR

PaperBench measures AI agents' ability to replicate 20 ICML 2024 Spotlight papers autonomously

Best AI agents achieve 21% success versus 41% for human PhD researchers

Code-only tasks succeed at 43.4%; full research replication drops to 21%

Hierarchical rubrics across 8,316 tasks enable precise capability gap identification

Performance reveals agents operate at approximately 50% of human expert capability

What Is PaperBench?

PaperBench is OpenAI's benchmark that measures AI agents' ability to autonomously replicate complete machine learning research papers from scratch. Released in April 2025, it requires agents to replicate 20 ICML 2024 Spotlight and Oral papers. The benchmark includes understanding paper contributions, developing complete codebases, and successfully executing experiments without access to the original authors' code.

For your team, this capability translates directly to R&D productivity: agents that can replicate research can accelerate internal innovation cycles. The benchmark tests whether agents can parse mathematical formulations from PDFs, translate algorithmic descriptions into working implementations, and validate numerical results against published figures.

End-to-end evaluation reveals capability gaps invisible in traditional benchmarks, giving you concrete data for resource allocation decisions.

Most benchmarks test thousands of simple tasks. PaperBench takes the opposite approach: 20 research papers from ICML 2024, decomposed into 8,316 individually gradable tasks across 12 AI research domains. The papers represent the top ~3.5% of conference submissions, ensuring evaluation against cutting-edge, peer-reviewed research.

The 12 research domains span modern machine learning:

Deep reinforcement learning

Robustness and adversarial methods

Probabilistic methods and uncertainty quantification

Large language models and transformers

Optimization, representation learning, and generative models

This breadth ensures agents face diverse challenges rather than narrow, domain-specific tasks. For procurement decisions, domain diversity means vendor performance claims must hold across your specific research areas.

Task Structure and Execution

Binary pass/fail grading misses partial progress and obscures where your investment delivers value. PaperBench solves this with a hierarchical rubric system: leaf nodes represent atomic pass/fail criteria for specific implementation components, while parent nodes aggregate child scores using weighted averages. This multi-level approach captures nuanced performance differences that simple binary metrics cannot detect.

This structure delivers strategic benefits for your team:

Granular capability mapping: Identify exactly which research tasks agents handle versus which require human expertise

Resource optimization: Direct engineering time toward high-failure task categories

Vendor comparison: Compare platforms on specific capability dimensions, not aggregate scores

Rubrics are co-developed with original paper authors, providing ground-truth validation that ensures evaluation criteria match actual research requirements. The critical deliverable is a runnable reproduce.sh script encapsulating the complete replication pipeline, enabling standardized execution across different agent implementations.

Rubrics and Automated Grading

Hierarchical rubric structures give you granular partial credit assessment that binary scoring completely misses. Parent nodes compute weighted averages of children, supporting measurement of partial progress: replicating some experiments but not others yields a score reflecting the weighted sum of successful subtasks. This approach rewards incremental capability improvements that traditional benchmarks would overlook entirely.

From a governance perspective, this granularity matters significantly. You can establish threshold policies at specific rubric levels rather than relying on opaque aggregate scores. Automated evaluation shows strong reliability, with F1 scores of 0.83 against human expert baseline, demonstrating that machine grading aligns closely with expert judgment.

However, for highest-stakes capability assessments, validate key findings with human expert review to account for variance in judgment outcomes. This hybrid approach balances evaluation efficiency with accuracy requirements.

PaperBench Results and Frontier Model Performance

Current results reveal both the promise and limitations of autonomous research agents. This section breaks down performance benchmarks, interpretation thresholds, and specific capability gaps you need to understand for deployment planning.

Current Performance Benchmarks

Claude 3.5 Sonnet with open-source scaffolding reaches 21.0% average replication score, representing the current state-of-the-art. For comparison, top ML PhD students achieve 41.4% success (best of 3 attempts after 48 hours.

Current best-performing AI agents achieve approximately half of human expert capability, a ~2x performance gap. When tested on a 3-paper subset, o1 demonstrated 26.6% performance and 43.4% on PaperBench Code-Dev, a code-only variant measuring implementation capability independent of full experimental execution.

What Is a Good PaperBench Score?

What separates state-of-the-art from breakthrough performance? These thresholds provide strategic context for vendor evaluation and help you set realistic expectations for AI research automation investments. Understanding where current systems fall on this scale enables better resource allocation and deployment planning decisions.

Below 20%: Severe limitations in autonomous research capabilities requiring extensive human intervention

20-30%: State-of-the-art AI performance (Claude 3.5 Sonnet at 21%, o1 subset at 26.6%)

30-45%: Strong performance approaching human-level capability suitable for semi-autonomous workflows

Above 45%: Breakthrough-level capability exceeding typical human expert performance

When evaluating vendors, contextualize their claims against these benchmarks. A system claiming 25% performance operates within expected state-of-the-art bounds, while claims above 35% warrant additional scrutiny and independent verification. Use these thresholds to calibrate investment decisions and set appropriate expectations with stakeholders about what autonomous research agents can realistically deliver today.

Where Agents Succeed and Fail

Code generation shows strong performance; full experimental execution reveals critical limitations. The o1-high model achieves 43.4% success on the PaperBench Code-Dev variant, more than doubling the full replication performance. This disparity highlights a fundamental capability asymmetry that shapes practical deployment strategies.

Performance collapses when agents must progress beyond code generation. The drop from 43.4% to 21.0% exposes the critical bottleneck: deploying experimental environments, debugging complex failures, and validating numerical results. Agents struggle with multi-step reasoning chains, environment configuration, dependency management, and iterative debugging cycles that human researchers handle intuitively.

Design workflows where agents handle high-volume code development while human experts focus on experimental design and complex debugging. Include checkpoint-based validation with human review at critical decision points. This hybrid approach leverages agent strengths in translation and implementation while compensating for weaknesses in execution and validation. Structure your teams to maximize handoff efficiency between automated code generation and human-led experimental workflows.

Why PaperBench Differs from Traditional AI Benchmarks

Understanding how PaperBench compares to established benchmarks helps you interpret vendor claims and build comprehensive evaluation strategies. This section covers benchmark comparisons, governance applications, and current limitations.

PaperBench vs. MMLU, HumanEval, and SWE-Bench

This benchmark represents a fundamental departure from traditional AI benchmarks by evaluating end-to-end autonomous research replication rather than isolated capabilities.

Traditional benchmarks test narrow skills: MMLU measures knowledge recall across academic subjects, HumanEval assesses function generation from docstrings, and SWE-Bench evaluates repository modifications for existing codebases. Each serves valuable purposes but captures only fragments of real-world research capability.

PaperBench requires replicating complete research implementations over hours to days, integrating paper comprehension, codebase development, experimental execution, and result validation. This holistic evaluation reveals capability gaps invisible when testing components in isolation.

When vendors report benchmark scores, understand what they measure: MMLU indicates knowledge breadth, HumanEval demonstrates function-level code generation, SWE-Bench reflects existing codebase modification, and PaperBench indicates autonomous research capability.

Build evaluation portfolios combining multiple benchmarks rather than relying on single metrics. Strong MMLU performance doesn't guarantee research replication success, just as high HumanEval scores don't predict experimental debugging capability.

Governance and Safety Applications

This benchmark provides critical infrastructure for measuring autonomous AI research capabilities, essential for evidence-based AI safety policy. NIST's framework requires continuous risk management, explainability requirements, and accountability mechanisms. PaperBench's hierarchical rubrics directly support these requirements by providing granular, auditable capability assessments that inform policy decisions with concrete data rather than speculation.

The benchmark enables governance functions through quantifiable capability measurement:

Threshold-based governance: Specific replication performance levels trigger enhanced safety protocols

Trend analysis: Track how quickly AI research capabilities advance over time

Comparative risk assessment: Benchmark different AI systems against standardized tasks

Your governance framework can use PaperBench scores for evidence-based policy decisions. Current 21% replication capability indicates agents operate at approximately 50% of human expert level.

Maintain audit trails for autonomous research agent decisions similar to PaperBench's rubric validation approach. Establish internal policies defining which capability thresholds require additional human oversight, creating proportionate governance that scales with demonstrated agent competence.

Current Limitations and Constraints

PaperBench faces several constraints limiting generalizability that you should factor into evaluation strategies. The benchmark includes only 20 ICML 2024 papers, creating selection bias toward specific research methodologies and problem types.

The single-conference snapshot cannot capture evolving research methodologies or temporal variations in AI research trends. Papers from other venues or time periods may present fundamentally different challenges.

The automated judge achieves an F1 score of 0.83 against human expert evaluation, representing approximately 17% grading variance. For high-stakes decisions, supplement automated evaluation with human expert review.

High computational costs create practical barriers to widespread adoption, requiring multi-GPU infrastructure and extended evaluation windows. The benchmark design deliberately focuses on empirical result replication while excluding assessment of theoretical contributions and novelty judgment.

Agents might replicate results mechanically without understanding underlying principles. Consider these limitations when extrapolating PaperBench performance to broader research automation capabilities within your organization.

How Can You Apply PaperBench Insights to Enterprise AI Strategy?

Understanding benchmark performance is only valuable when translated into concrete business decisions. Here's how to leverage PaperBench findings across roadmap planning, organizational design, and vendor evaluation to maximize your AI investment returns.

Translate Benchmark Scores into Strategic Roadmap Decisions

State-of-the-art performance at 21% means AI research automation remains in early maturity, suitable for pilot programs and human-augmented workflows, not fully autonomous deployment.

When you evaluate AI platforms, demand performance data across multiple benchmarks rather than relying on single-metric assessments. This multi-dimensional evaluation approach prevents over-reliance on inflated single metrics that mask critical capability gaps.

Assume approximately 50% capability versus expert humans when planning resource allocation. However, performance varies significantly by task type: code-only tasks reach 43.4% capability, while full experimental execution and validation drops to 21%.

Design workflows where agents handle high-volume code development and routine implementation tasks while human experts focus on experimental design, complex debugging, result validation, and long-horizon research planning. Structure your implementation timeline around these capability tiers, deploying agents first in areas where they perform best.

Restructure R&D Teams Around AI-Human Collaboration

The 43.4% success rate on code-only tasks versus 21.0% on full replication reveals where agents provide immediate productivity gains. Your research engineers shouldn't treat AI as a replacement; think code generation accelerator instead.

Agents can effectively translate research specifications into functional implementations, reducing implementation time for standard algorithms and data preprocessing pipelines. This allows your senior researchers to focus on higher-value activities like novel experimental design and cross-domain innovation.

However, the 22-point performance drop from code-only tasks to full research replication means you need strong experimental design and debugging capabilities within your organization.

Create dedicated roles focused on agent orchestration, validation frameworks, and quality assurance for AI-generated research code. Consider establishing an "AI Research Operations" function that bridges technical implementation and quality control, ensuring consistent output standards while maximizing agent utilization across projects.

Strengthen Procurement Processes with Performance-Based Vendor Evaluation

Your procurement process should require specific PaperBench performance data as part of due diligence, not just marketing claims about research automation. Demand verified replication scores and detailed performance breakdowns across the three core evaluation dimensions: code development, experiment execution, and result validation.

Granular performance data reveals critical capability gaps that aggregate scores often obscure. Request independent verification of claimed benchmark results whenever possible, as self-reported metrics frequently overstate production performance.

Vendor assessment extends beyond technical capabilities to governance maturity and operational support. Total cost of ownership extends beyond licensing fees to infrastructure requirements for multi-GPU environments, integration complexity with existing research workflows, and quality assurance costs for validating AI-generated research outputs.

Demand production deployment case studies with measured outcomes rather than theoretical capabilities. Evaluate vendor responsiveness, update frequency, and commitment to benchmark transparency as indicators of long-term partnership viability.

How Can You Build Internal PaperBench-Style Evaluations?

External benchmarks provide useful reference points, but your organization's unique research workflows require custom evaluation frameworks. Here's how to design, implement, and operationalize internal benchmarks that mirror PaperBench's rigorous methodology.

Identifying Your Benchmark Candidates

Which research tasks deserve evaluation infrastructure investment? Target those affecting competitive positioning. The highest-value benchmark candidates typically involve novel algorithm implementation for proprietary methods, experimental validation workflows specific to your domain, or methodology replication that directly supports product development.

Quality matters more than quantity when selecting papers to include. PaperBench uses only ICML Spotlight and Oral presentations; you should restrict your internal benchmark to the highest-quality research your team handles, whether peer-reviewed publications, validated internal research, or rigorously vetted vendor methodologies.

Domain experts who authored or deeply understand these research contributions should directly participate in rubric development, ensuring evaluation criteria reflect genuine technical standards rather than superficial checkboxes.

Designing Rubrics and Grading Systems

Binary pass/fail scoring misses partial progress. Hierarchical rubric structures give you granular assessment. Your leaf nodes need binary pass/fail criteria assessing verifiable requirements: correct algorithm implementation, proper data preprocessing, valid experimental configuration, and numerical accuracy within tolerance thresholds.

However, weight these evaluation criteria by business importance rather than treating all requirements equally—a critical production algorithm deserves higher weight than ancillary preprocessing steps.

Align your evaluation metrics with business objectives to ensure they reflect actual value creation. The hierarchical tree-structured model validated by PaperBench's methodology provides the proven framework.

Domain experts should co-develop rubrics with you to ensure evaluation criteria reflect genuine capability standards. Before deployment, validate automated graders against human expert assessment, accounting for measurement uncertainty in critical decisions.

Instrumentation and Governance Integration

Without a comprehensive logging infrastructure, you lose visibility into agent reasoning throughout evaluation workflows. Effective evaluation platforms should support a hierarchical trace-and-span architecture with structured logging patterns to capture agent decision-making.

Real-time monitoring with threshold-based alerting, aligned with PaperBench's hierarchical rubric measurement approach, allows rapid intervention when agents deviate from expected performance ranges.

Transform benchmarks from isolated tools into strategic assets by integrating with broader governance frameworks. Rather than treating evaluation as a one-time vendor selection exercise, design systems that allow research teams to autonomously conduct evaluations through intuitive interfaces and comprehensive documentation.

Establishing Evaluation Excellence for Autonomous Research

Current AI systems operate at approximately half of human expert performance. Position AI agents as code generation accelerators (43% success) while maintaining human expertise for experimental design and validation, where performance drops to 21%.

Build an evaluation infrastructure providing visibility into agent decision-making and allowing rapid iteration. Establish governance frameworks scaling with capability advancement rather than retrofitting oversight after deployment failures.

Explore Galileo's evaluation framework to build PaperBench-style assessments for your research automation strategy:

Hierarchical logging infrastructure: Capture agent decision-making across complex evaluation workflows

Custom metrics frameworks: Define domain-specific evaluation criteria aligned with research validation requirements

Real-time monitoring dashboards: Track autonomous agent performance with threshold-based alerting

Agent workflow orchestration: Design human-in-the-loop validation checkpoints at critical stages

Audit trail infrastructure: Maintain immutable records of agent decisions for governance compliance

Book a demo to see how Galileo's agent evaluation capabilities can help you build reliable AI agents with end-to-end observability, production guardrails, and research-backed metrics.

FAQs

What is PaperBench and why does it matter for enterprise AI?

PaperBench is OpenAI's benchmark measuring AI agents' ability to autonomously replicate cutting-edge machine learning research from 20 ICML 2024 papers. It matters because it provides quantifiable metrics for research automation capabilities beyond marketing claims, revealing that current best agents achieve only 21% success versus 41% for human experts.

How do you interpret PaperBench scores for procurement decisions?

Evaluate scores against current performance benchmarks: Below 20% indicates severe limitations requiring significant human oversight. Performance at 20-25% aligns with best-performing AI agents, representing approximately 50% of human expert capability. Demand detailed performance breakdowns across code development (43.4% success), execution (21.0% success), and result validation.

How do you design internal evaluation systems similar to PaperBench?

Start with business-critical research tasks as benchmark candidates, co-develop hierarchical rubrics with domain experts defining success criteria with binary leaf nodes and weighted parent aggregation, and implement scoring reflecting business value. Validate automated evaluation systems against human expert evaluation for your specific use cases.

When should you prioritize PaperBench versus other AI benchmarks?

Prioritize PaperBench when evaluating autonomous research capabilities and long-horizon task execution. Use MMLU for knowledge breadth assessment, HumanEval for basic coding capability, and SWE-Bench for software maintenance skills. Evaluate AI systems across multiple benchmark types to understand capability profiles.

What are the cost implications of deploying PaperBench-level research automation?

PaperBench's multi-GPU computational requirements and 48-hour evaluation windows create significant infrastructure costs. Account for GPU cluster expenses, compute costs for automated evaluation at scale, and quality assurance overhead for validating AI-generated outputs. Current 21% success rates mean you'll need human oversight for approximately 79% of research replication attempts.

Your AI agent generates clean code for a novel algorithm. Hours later, it's stuck debugging environment errors. The gap between code generation and full execution represents the frontier challenge for autonomous AI.

PaperBench, OpenAI's benchmark released in April 2025, provides the first standardized measurement of this critical capability gap. Unlike traditional benchmarks testing isolated skills, this benchmark evaluates end-to-end research replication. It requires AI agents to understand cutting-edge papers, build complete codebases from scratch, and reproduce empirical results without human intervention.

TLDR

PaperBench measures AI agents' ability to replicate 20 ICML 2024 Spotlight papers autonomously

Best AI agents achieve 21% success versus 41% for human PhD researchers

Code-only tasks succeed at 43.4%; full research replication drops to 21%

Hierarchical rubrics across 8,316 tasks enable precise capability gap identification

Performance reveals agents operate at approximately 50% of human expert capability

What Is PaperBench?

PaperBench is OpenAI's benchmark that measures AI agents' ability to autonomously replicate complete machine learning research papers from scratch. Released in April 2025, it requires agents to replicate 20 ICML 2024 Spotlight and Oral papers. The benchmark includes understanding paper contributions, developing complete codebases, and successfully executing experiments without access to the original authors' code.

For your team, this capability translates directly to R&D productivity: agents that can replicate research can accelerate internal innovation cycles. The benchmark tests whether agents can parse mathematical formulations from PDFs, translate algorithmic descriptions into working implementations, and validate numerical results against published figures.

End-to-end evaluation reveals capability gaps invisible in traditional benchmarks, giving you concrete data for resource allocation decisions.

Most benchmarks test thousands of simple tasks. PaperBench takes the opposite approach: 20 research papers from ICML 2024, decomposed into 8,316 individually gradable tasks across 12 AI research domains. The papers represent the top ~3.5% of conference submissions, ensuring evaluation against cutting-edge, peer-reviewed research.

The 12 research domains span modern machine learning:

Deep reinforcement learning

Robustness and adversarial methods

Probabilistic methods and uncertainty quantification

Large language models and transformers

Optimization, representation learning, and generative models

This breadth ensures agents face diverse challenges rather than narrow, domain-specific tasks. For procurement decisions, domain diversity means vendor performance claims must hold across your specific research areas.

Task Structure and Execution

Binary pass/fail grading misses partial progress and obscures where your investment delivers value. PaperBench solves this with a hierarchical rubric system: leaf nodes represent atomic pass/fail criteria for specific implementation components, while parent nodes aggregate child scores using weighted averages. This multi-level approach captures nuanced performance differences that simple binary metrics cannot detect.

This structure delivers strategic benefits for your team:

Granular capability mapping: Identify exactly which research tasks agents handle versus which require human expertise

Resource optimization: Direct engineering time toward high-failure task categories

Vendor comparison: Compare platforms on specific capability dimensions, not aggregate scores

Rubrics are co-developed with original paper authors, providing ground-truth validation that ensures evaluation criteria match actual research requirements. The critical deliverable is a runnable reproduce.sh script encapsulating the complete replication pipeline, enabling standardized execution across different agent implementations.

Rubrics and Automated Grading

Hierarchical rubric structures give you granular partial credit assessment that binary scoring completely misses. Parent nodes compute weighted averages of children, supporting measurement of partial progress: replicating some experiments but not others yields a score reflecting the weighted sum of successful subtasks. This approach rewards incremental capability improvements that traditional benchmarks would overlook entirely.

From a governance perspective, this granularity matters significantly. You can establish threshold policies at specific rubric levels rather than relying on opaque aggregate scores. Automated evaluation shows strong reliability, with F1 scores of 0.83 against human expert baseline, demonstrating that machine grading aligns closely with expert judgment.

However, for highest-stakes capability assessments, validate key findings with human expert review to account for variance in judgment outcomes. This hybrid approach balances evaluation efficiency with accuracy requirements.

PaperBench Results and Frontier Model Performance

Current results reveal both the promise and limitations of autonomous research agents. This section breaks down performance benchmarks, interpretation thresholds, and specific capability gaps you need to understand for deployment planning.

Current Performance Benchmarks

Claude 3.5 Sonnet with open-source scaffolding reaches 21.0% average replication score, representing the current state-of-the-art. For comparison, top ML PhD students achieve 41.4% success (best of 3 attempts after 48 hours.

Current best-performing AI agents achieve approximately half of human expert capability, a ~2x performance gap. When tested on a 3-paper subset, o1 demonstrated 26.6% performance and 43.4% on PaperBench Code-Dev, a code-only variant measuring implementation capability independent of full experimental execution.

What Is a Good PaperBench Score?

What separates state-of-the-art from breakthrough performance? These thresholds provide strategic context for vendor evaluation and help you set realistic expectations for AI research automation investments. Understanding where current systems fall on this scale enables better resource allocation and deployment planning decisions.

Below 20%: Severe limitations in autonomous research capabilities requiring extensive human intervention

20-30%: State-of-the-art AI performance (Claude 3.5 Sonnet at 21%, o1 subset at 26.6%)

30-45%: Strong performance approaching human-level capability suitable for semi-autonomous workflows

Above 45%: Breakthrough-level capability exceeding typical human expert performance

When evaluating vendors, contextualize their claims against these benchmarks. A system claiming 25% performance operates within expected state-of-the-art bounds, while claims above 35% warrant additional scrutiny and independent verification. Use these thresholds to calibrate investment decisions and set appropriate expectations with stakeholders about what autonomous research agents can realistically deliver today.

Where Agents Succeed and Fail

Code generation shows strong performance; full experimental execution reveals critical limitations. The o1-high model achieves 43.4% success on the PaperBench Code-Dev variant, more than doubling the full replication performance. This disparity highlights a fundamental capability asymmetry that shapes practical deployment strategies.

Performance collapses when agents must progress beyond code generation. The drop from 43.4% to 21.0% exposes the critical bottleneck: deploying experimental environments, debugging complex failures, and validating numerical results. Agents struggle with multi-step reasoning chains, environment configuration, dependency management, and iterative debugging cycles that human researchers handle intuitively.

Design workflows where agents handle high-volume code development while human experts focus on experimental design and complex debugging. Include checkpoint-based validation with human review at critical decision points. This hybrid approach leverages agent strengths in translation and implementation while compensating for weaknesses in execution and validation. Structure your teams to maximize handoff efficiency between automated code generation and human-led experimental workflows.

Why PaperBench Differs from Traditional AI Benchmarks

Understanding how PaperBench compares to established benchmarks helps you interpret vendor claims and build comprehensive evaluation strategies. This section covers benchmark comparisons, governance applications, and current limitations.

PaperBench vs. MMLU, HumanEval, and SWE-Bench